If the robot to kill the person who is guilty?

If you are looking for signs of the coming of the apocalypse, which will blame robots , note the demilitarized zone between North and South Korea. Here, along the most fortified border in the world, are more than just watch the soldiers.

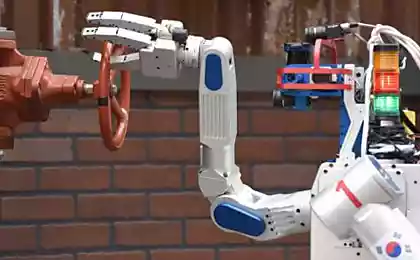

Meet the SGR-A1, hour-robot, developed by Samsung in South Korea. 1.2-meter device looks a little more than a pair of black metal shoe boxes, but his soulless eyes equipped with infrared ports, can detect the enemy at a distance of four kilometers. His machine gun at the ready.

Currently, the robot informs its creators of suspected attacker sitting in the control room. And in order that he opened fire, need the approval of the person. But this robot is also working in the automatic mode and can shoot first at its discretion. Resistance, my fellow men, it is useless.

The Pentagon and the military all over the world are developing more and more autonomous weapons, it goes far beyond the remote-controlled drones, which Washington has used in Pakistan, Afghanistan and other countries. Technological advances allow these new types of weapons to choose a target and shoot it at your choice without the approval of the person. Some predict that these robots will one day be fighting side by side with the soldiers-men.

Not surprisingly, news of the robots that kill, makes some people nervous. In April this year, an international coalition of designers, researchers and human rights activists convened a campaign against the establishment of killer robots and asked the diplomats to the United Nations in Geneva. Campaigners want to ban the development and use of "lethal autonomous weapons", while recognizing that such weapons, although technologically possible, has never been applied.

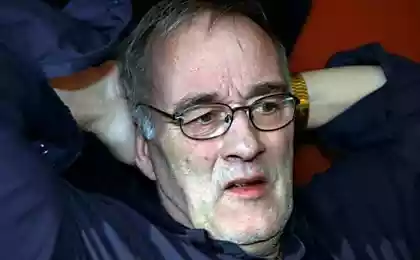

"It makes sense to ask whether the decline of the value of human life that will allow robots to kill people?" - Says Peter Asaro, senior lecturer in the School of Media Research in New York, and a representative of the campaign against the killer robots.

This is just one question that philosophers, jurists and scientists struggle since time immemorial, while the world is preparing for the onslaught of new robots, which, we are told, will mow lawns, care for the elderly, to teach children with autism and even drive our cars. These robots can not kill, but the representatives of the road want to force the government and the courts to consider a number of legal and ethical issues. To whom should I sue if I get hit by a car without a driver? What if the robot to the patient-physician gives the wrong medication? What if the robot cleaner accidentally sucked my hair while I was dozing on the floor (as actually happened to a woman in South Korea not long ago)? Can a robot commit a war crime?

It is this last question is the most worried about the professor Asaro. He and other members of the coalition, which includes Human Rights Watch claimed that if an autonomous computer system is programmed to ensure that the enemy soldiers to distinguish from the civilian population, actually pulls the trigger without "human approval," it will be very difficult to keep the situation under control if something goes wrong.

If the robot goes down and kill the whole village, you will not be able to put an inanimate object in front of the International Criminal Court, or give his court-martial. Some experts say that you can not blame the law, even the developers of the robot, if they did not anticipate that the robot can go crazy.

In criminal law, the courts must find what is known as mens rea, or mens rea (Latin). But they are unlikely to find information about what the robot - from the current level of development of artificial intelligence - has the will at all. Designers robots may incur civil liability, if it is found that the robot went out of control due to a failure in the program. However, they may not be held liable for the actions of killer robots.

These killer robots may look like anything - from the autonomous robotic drones to the rovers with guns. The directive from 2012 the Ministry of Defense decided that autonomous systems "should be designed so that commanders and operators to implement appropriate levels of human control over the use of force." In an email response to a question from The Globe and Mail Canadian Department of National Defence said that "now is not developed an autonomous lethal weapons", but their research agency is actively engaged in "research program in the field of unmanned systems».

At least one company engaged in robotics, Waterloo, Ont.-based Clearpath Robotics Inc., spoke against killer robots, despite the fact that the robots created for Canadian and US military. Gariepi Ryan, co-founder of ClearPath and its chief technology officer, said that a good application for autonomous military robots have the task of demining or monitoring, but not murder, especially if such cars on one side of the battle: "How can the robots decide who endure death penalty? Does the right to deprive a person of life so enemy soldiers? »

Inevitably the discussion murderers robots revolves around the "Three Laws of Robotics" of the stories science fiction writer Isaac Asimov. The first law prohibits robots from harming people; the second tells people to obey them only if it does not conflict with the first law; the third tells robots to protect their own lives - provided that it does not violate the first two laws. But most experts say that these laws will do little good in the real world: their shortcomings even Asimov shows in his stories.

Nevertheless, Professor Ronald Arkin of Georgia Institute of Technology, the famous American designer of robots, which works on projects the Pentagon argues that the killer robots or other automated weapons systems can be programmed to follow the laws of war - and follow them better than people . The robot, he says, will never shoot, defend their lives or because of fear. He will have access to information and data that no soldier-man could not handle so quickly that makes it less likely a mistake in the "heat of battle". He would never intentionally kill civilians in retaliation for the death of a comrade. And it really can follow the soldiers, so they did not commit the crime.

Professor Arkin also argues that autonomous technology is in fact already on the battlefield. According to him, US Patriot missile batteries will automatically choose targets and give a person up to nine seconds to cancel the goal and stop the missile. A system of US Navy's Phalanx protect ships, automatically knocking down missiles.

But even they require a moratorium on the development of more autonomous weapons as long as you can show that they can reduce civilian casualties. "Nobody wants the appearance of the Terminator. Who would want to have a system that can be sent on a mission, and it will be to decide who should be killed? - Says Professor Arkin. - The systems must be designed very carefully and cautiously, and I think that there are ways to do it ».

In recent years, large technology companies are increasingly concerned about artificial intelligence. Physicist Stephen Hawking has warned that the appearance of AI "could mean the end of the human race." Elon Musk, founder of Tesla Motors, AI called "existential threat" - is no less dangerous than nuclear weapons. Bill Gates, founder of Microsoft, is also concerned.

People who actually work on the creation of artificial intelligence, saying that there is nothing to worry about. Yoshua Bengio, a leading researcher at the University of Montreal, AI notes that fiction Star Wars and Star Trek greatly overestimates the ability of robots and artificial intelligence. Once, he says, we could build a car that would compete with the human brain. But now, the AI is not so developed.

There is still much to do before robots become such what you see it in the movies - says Professor Benji. - We are still very far from being able to obtain the level of understanding of the world that we expect from, say, five years of a child or even an adult. And to achieve this goal may require a decade or more. We can not even repeat the intelligence level of the mouse.

Blockquote>

Without a doubt, the development of both hardware and software for robots always pushed forward.

Robots developed by Boston Dynamics, which recently bought Google, can be worn on rough terrain, like dogs, and crawl like rats in the disaster zone.

Robot dog named Spot can stand on his feet after his beat, his metal legs look eerily realistic.

But if you fear that the revolution killer robots is inevitable, Atlas, six-foot humanoid robot weighing in at 330 pounds, will help you calm down. Also created by Boston Dynamics, he can walk up the stairs and over the rocks. He looks like a Cyberman from the series "Doctor Who", developed by Apple. However, the charge of its battery lasts just 1 hour.

It seems, says Ian Kerr, Head of the Department of Ethics, Law and Technology at the University of Ottawa, people gradually give control of machines with artificial intelligence - computers that eventually will do certain things better than we do. Although it will be some time before you see the car, which does not need a driver, even now there are cars that can park themselves. Robot IBM Watson from the company International Business Machines Corp., who won a quiz Jeopardy! in 2011, it is currently used to analyze millions of pages of medical research to recommend treatments for cancer patients.

Man - this is just a point ... On something like this, I sometimes think, and at this point I'm like Abraham on the mountain when he hears the voice of God, but still has to decide for himself what to do, says Prof essor Kerr. - It is natural that the hospital will want to doctors relied on machines because machines have greater potential ... And then we reach the point when mankind will be relieved of some of the types of decisions, such as driving or war.

Blockquote>

Source: geektimes.ru/company/robohunter/blog/253026/