481

The ethical aspect of programming Autonomous cars

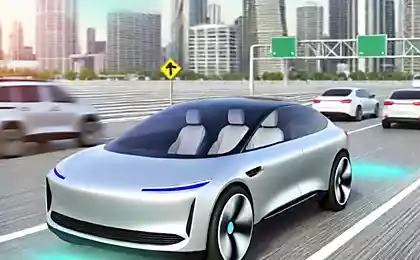

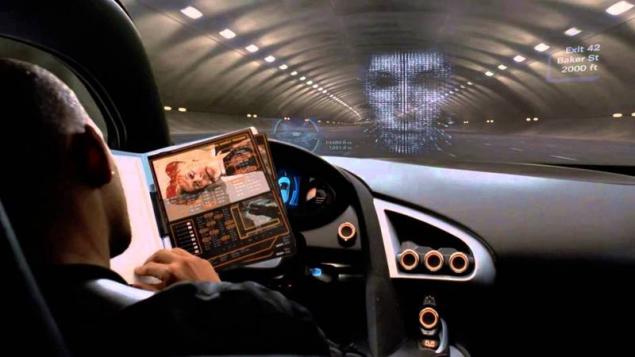

All the new technology in the field of automation first of all penetrate in the automotive industry. Today, production vehicles standard equipped with cruise control, parallel Parking program, and even automatic overtaking. With all these innovations, the driver can quietly (or not quite calmly) to sit and watch as the computer controls of his machine.

It is logical that many manufacturers are starting to think about how to fully transfer the management control of a computer. These cars will be safer, greener and economical than their manual predecessors. But absolute safety is known to be.

And this raises a number of difficult issues. For example, how to program a machine for the inevitable crash? Whether the system is to minimize loss of life, even if I have to sacrifice the lives of passengers? Or she will need to save the passengers at any cost? Or a choice between these two alternatives needs to be random?

The answers to these questions can affect how unmanned vehicles will be perceived by society. After all, who wants to buy a car, which in extreme circumstances can kill the owner?

So if the science? Perhaps the answer to this question were able to approach scientists from the Toulouse school of Economics Jean-Francois Bonnefon and his colleagues. They came to the conclusion that, because given the moral and ethical questions cannot be right or wrong answer, the last word in the development of unmanned vehicle systems will be public opinion.

Therefore, the researchers decided to study the public opinion with the use of the new scientific method and experimental ethics. This approach involves the study of the reaction of a large number of people assigned to them ethical dilemmas. The results were interesting, though a little predictable.

"The data are only the first steps towards exploring the most difficult problems of moral properties when programming Autonomous vehicles," say the researchers.

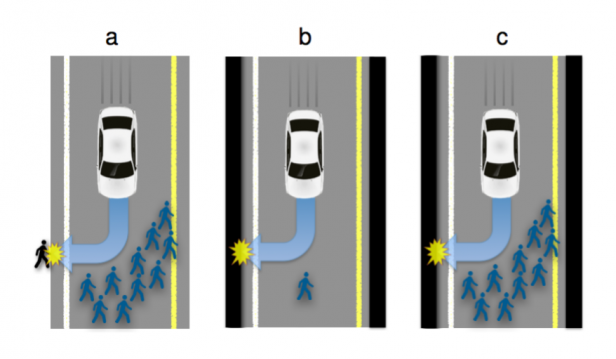

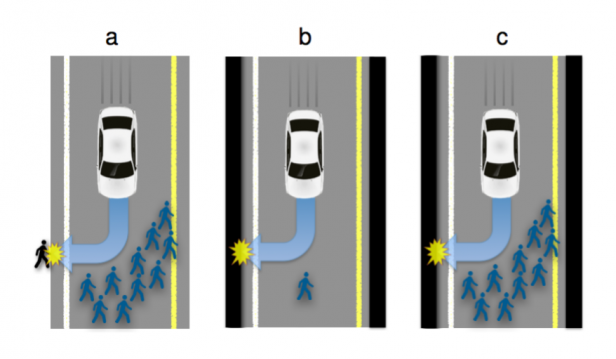

And that's what the dilemma was to allow the subjects. Imagine that in the near future you have a drone machine. You go to her, and suddenly the road ahead passes a group of ten people. The machine does not have time to stop to avoid a collision, but can roll to the side and crash into the wall. But in this case, you will die. Which option should choose a car?

One approach to solving this problem is to proceed from the idea that the number of casualties should be minimized. The death of one person against the death of ten.

But this approach can be other consequences. If self-driving cars will buy less due to the fact that they have the potential to lead to the death of the owner, more people will die in accidents involving ordinary cars. It turns out no-win situation.

Bonnefon and his colleagues are looking for a reasonable solution to this ethical dilemma, starting from the public opinion. In the beginning, they suggested that people often choose the scenario that assumes the preservation of their own lives.

They interviewed several hundred ordinary citizens. The survey participants were offered a scenario in which it was possible to save one or more pedestrians, turning into a wall, killing thus a passenger or a pedestrian.

At the same time, the researchers changed some of the details of the scenario, such as the number of pedestrians that can be saved; whom the decision was made to collapse the computer or the owner of the car; and what is the role of the test in this scenario – as a passenger car or a third-party observer.

In General, people often expressed the idea to preserve as many human lives.published

The Author Nail The First Change

P. S. And remember, only by changing their consumption — together we change the world! © Join us at Facebook , Vkontakte, Odnoklassniki

Source: rusbase.com/opinion/self-driving-cars-moral/

It is logical that many manufacturers are starting to think about how to fully transfer the management control of a computer. These cars will be safer, greener and economical than their manual predecessors. But absolute safety is known to be.

And this raises a number of difficult issues. For example, how to program a machine for the inevitable crash? Whether the system is to minimize loss of life, even if I have to sacrifice the lives of passengers? Or she will need to save the passengers at any cost? Or a choice between these two alternatives needs to be random?

The answers to these questions can affect how unmanned vehicles will be perceived by society. After all, who wants to buy a car, which in extreme circumstances can kill the owner?

So if the science? Perhaps the answer to this question were able to approach scientists from the Toulouse school of Economics Jean-Francois Bonnefon and his colleagues. They came to the conclusion that, because given the moral and ethical questions cannot be right or wrong answer, the last word in the development of unmanned vehicle systems will be public opinion.

Therefore, the researchers decided to study the public opinion with the use of the new scientific method and experimental ethics. This approach involves the study of the reaction of a large number of people assigned to them ethical dilemmas. The results were interesting, though a little predictable.

"The data are only the first steps towards exploring the most difficult problems of moral properties when programming Autonomous vehicles," say the researchers.

And that's what the dilemma was to allow the subjects. Imagine that in the near future you have a drone machine. You go to her, and suddenly the road ahead passes a group of ten people. The machine does not have time to stop to avoid a collision, but can roll to the side and crash into the wall. But in this case, you will die. Which option should choose a car?

One approach to solving this problem is to proceed from the idea that the number of casualties should be minimized. The death of one person against the death of ten.

But this approach can be other consequences. If self-driving cars will buy less due to the fact that they have the potential to lead to the death of the owner, more people will die in accidents involving ordinary cars. It turns out no-win situation.

Bonnefon and his colleagues are looking for a reasonable solution to this ethical dilemma, starting from the public opinion. In the beginning, they suggested that people often choose the scenario that assumes the preservation of their own lives.

They interviewed several hundred ordinary citizens. The survey participants were offered a scenario in which it was possible to save one or more pedestrians, turning into a wall, killing thus a passenger or a pedestrian.

At the same time, the researchers changed some of the details of the scenario, such as the number of pedestrians that can be saved; whom the decision was made to collapse the computer or the owner of the car; and what is the role of the test in this scenario – as a passenger car or a third-party observer.

In General, people often expressed the idea to preserve as many human lives.published

The Author Nail The First Change

P. S. And remember, only by changing their consumption — together we change the world! © Join us at Facebook , Vkontakte, Odnoklassniki

Source: rusbase.com/opinion/self-driving-cars-moral/