381

10 things that are difficult to teach robots

Being human is much easier than creating a human being. Take, for example, the process of playing ball as a child with a friend. If you decompose these activities into separate biological functions, the game will cease to be simple. You need sensors, transmitters and effectors. You need to calculate how hard to hit the ball so that it reduces the distance between you and your companion. You need to consider the sun’s glare, wind speed, and anything that might distract you. You need to determine how the ball spins and how to take it. And there is room for extraneous scenarios: what if the ball flies overhead? Fly over the fence? Blow a neighbor's window?

These questions demonstrate some of the most pressing problems of robotics, and also lay the groundwork for our countdown. Here is a list of the ten most difficult things to teach robots. We must defeat the top ten if we are ever to fulfill the promises made by Bradbury, Dick, Asimov, Clark and other science fiction writers who have seen imaginary worlds where machines behave like humans.

pave the way

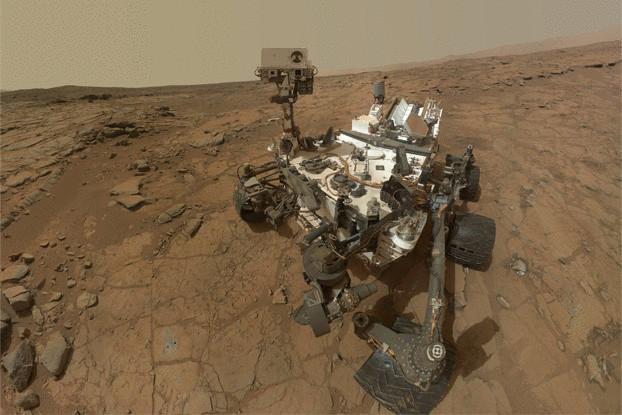

Moving from point A to point B seemed easy since childhood. We humans do this every day, every hour. For a robot, however, navigation—especially through a single environment that is constantly changing, or through an environment it has not seen before—is a daunting thing. First, the robot must be able to perceive the environment, as well as understand all incoming data.

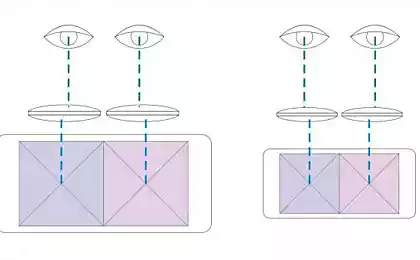

Robotics solve the first problem by arming their machines with an array of sensors, scanners, cameras and other high-tech tools that help robots assess their surroundings. Laser scanners are becoming increasingly popular, although they cannot be used in aquatic environments due to the fact that light is severely distorted in water. Sonar technology seems to be a viable alternative for underwater robots, but in ground conditions it is far less accurate. In addition, the robot helps to “see” its landscape with a technical vision system consisting of a set of integrated stereoscopic cameras.

Collecting environmental data is only half the battle. A much more difficult task will be to process this data and use it to make decisions. Many developers control their robots using a predefined map or making it on the fly. In robotics, this is known as SLAM, a method of simultaneously navigating and mapping. Mapping here means how the robot converts the information received by the sensors into a specific form. Navigation involves how the robot positions itself relative to the map. In practice, these two processes must take place simultaneously, in the form of a chicken and an egg, which can only be done using powerful computers and advanced algorithms that calculate position based on probabilities.

Demonstrate agility

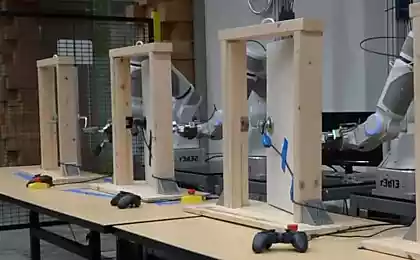

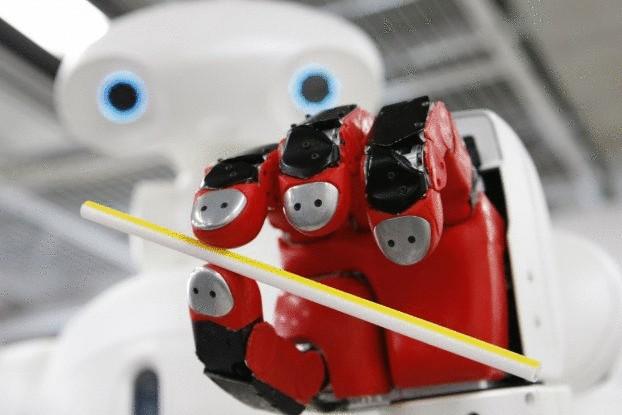

Robots have been collecting packaging and parts in factories and warehouses for years. But in such situations, they tend not to meet people and almost always work with similarly shaped objects in a relatively free environment. The life of such a robot in the factory is boring and ordinary. If the robot wants to work at home or in a hospital, it will need to have advanced touch, the ability to detect people nearby and impeccable taste in terms of choice of actions.

These robot skills are extremely difficult to teach. Normally, scientists do not train robots to touch at all, programming them to fail if they come into contact with another object. Over the past five years or so, however, significant strides have been made in combining malleable robots and artificial leather. Compliance refers to the level of flexibility of the robot. Flexible cars are more malleable, rigid - less.

In 2013, researchers at Georgia Tech created a robotic arm with spring joints that allow the manipulator to bend and interact with objects like a human hand. They then coated it all with skin that could recognize pressure or touch. Some types of robot skin contain hexagonal chips, each equipped with an infrared sensor that detects any approach closer than a centimeter. Others are equipped with electronic "fingerprints" - a ribbed and rough surface that improves grip and facilitates signal processing.

Combine these high-tech manipulators with an advanced vision system – and you get a robot that can do a gentle massage or go through a folder with documents, choosing the right one from a huge collection.

Keep the conversation going.

Alan Turing, one of the founders of computer science, made a bold prediction in 1950: one day machines will be able to speak so freely that you will not be able to distinguish them from people. Alas, until robots (and even Siri) met Turing’s expectations. That’s because speech recognition is vastly different from natural language processing — something our brains do by extracting meaning from words and sentences during conversation.

Initially, scientists thought repeating this would be as easy as connecting grammar rules to a machine's memory. But trying to program grammatical examples for each language has failed. Even to determine the meaning of individual words was very difficult (after all, there is such a phenomenon as homonyms – the key to the door and the key violin, for example). Humans have learned to define the meanings of these words in context, relying on their mental abilities developed over many years of evolution, but breaking them down again into strict rules that can be put into code proved simply impossible.

As a result, many robots today process language based on statistics. Scientists feed them huge texts known as corpuscles and then allow computers to break long texts into chunks to figure out which words often go together and in what order. This allows the robot to “learn” language based on statistical analysis.

Learn new things

Suppose someone who has never played golf decided to learn how to swing a stick. He can read a book about it and then try or watch a famous golfer practice and then try it himself. In any case, mastering the basics can be simple and quick.

Robotics face certain challenges when trying to build an autonomous machine capable of learning new skills. One approach, as in the case of golf, is to break down the activity into precise steps and then program them in the robot's brain. This suggests that every aspect of activity needs to be separated, described, and coded, which is not always easy to do. There are certain aspects of waving a golf club that are difficult to describe in words. For example, the interaction of the wrist and elbow. These subtle details are easier to show than to describe.

In recent years, scientists have made some progress in training robots to mimic a human operator. They call this imitation training, or demonstration training (LfD). How do they do that? Arming machines with arrays of wide-angle and scaling cameras. This equipment allows the robot to "see" the teacher performing certain active processes. Training algorithms process this data to create a mathematical function map that combines visual input and desired actions. Of course, LfD robots need to be able to ignore certain aspects of their teacher’s behavior—like itching or runny noses—and deal with similar problems that arise from differences in robot and human anatomy.

Trick

The curious art of deception has evolved in animals to bypass competitors and not be eaten by predators. In practice, deception as an art of survival can be a very, very effective mechanism of self-preservation.

Robots, on the other hand, can be incredibly difficult (and perhaps good for us). Deception requires imagination—the ability to form ideas or images of external objects unrelated to feelings—and a machine usually does not. They are strong at directly processing data from sensors, cameras and scanners, but cannot form concepts that go beyond sensory data.

On the other hand, the robots of the future may be better at cheating. Georgia Tech scientists have been able to transfer some protein tricking skills to robots in the lab. First, they studied cunning rodents that protect their food stashes by luring competitors into old and unused vaults. Then they encoded this behavior into simple rules and loaded it into the brains of their robots. The machines were able to use these algorithms to determine when cheating might be useful in a particular situation. Therefore, they could deceive their companion by luring him to another place where there is nothing of value.

Anticipate human action

In The Jetsons, Robot Maid Rosie was able to hold a conversation, cook food, clean and help George, Jane, Judy and Elroy. To understand the quality of Rosie's build, it is enough to recall one of the opening episodes: Mr. Spaceley, George's boss, comes to the Jetsons' house for dinner. After the meal, he takes out the cigar and puts it in his mouth, and Rosie rushes forward with a lighter. This simple action represents a complex human behavior—the ability to anticipate what will happen next based on what has just happened.

Like deception, anticipating human actions requires a robot to imagine a future state. He must be able to say, “If I see a man doing A, then, as I can assume from past experience, he is likely to do B.” In robotics, this point has been extremely difficult, but people are making some progress. A team at Cornell University developed an autonomous robot that could respond based on how a companion interacts with objects in the environment. To do this, it uses a pair of 3D cameras to get an image of its surroundings. The algorithm then identifies key objects in the room and highlights them from the rest. Then, using a huge amount of information obtained from previous training, the robot develops a set of specific expectations of movements from the person and the objects it touches. The robot draws conclusions about what comes next and acts accordingly.

Sometimes Cornell robots get it wrong, but they’re moving forward pretty confidently as camera technology improves.

Coordinate with other robots

Building a single, large-scale machine—even an android, if you will—requires a serious investment of time, energy, and money. Another approach involves deploying an army of simpler robots that can work together to accomplish complex tasks.

There are a number of problems. A robot working in a team must be able to position itself well in connection with its mates and be able to communicate effectively with other machines and a human operator. To solve these problems, scientists turned to the world of insects, which use complex swarm behavior to find food and solve problems that benefit the entire colony. For example, by studying ants, scientists realized that individual individuals use pheromones to communicate with each other.

Robots can use this same “pheromone logic,” only to rely on light rather than chemicals to communicate. It works like this: a group of tiny robots are dispersed in a confined space. First, they explore the area randomly until one comes across a light trail left by another bot. He knows he has to follow the trail and walks, leaving his own trail. As the tracks merge into one, more and more robots follow each other in a goose.

Self-copy

The Lord said to Adam and Eve, “Be fruitful and multiply, and fill the earth.” A robot that received such a command would feel embarrassed or disappointed. Why? Because it can't reproduce. It’s one thing to build a robot, but it’s another to build a robot that can make copies of itself or regenerate lost or damaged components.

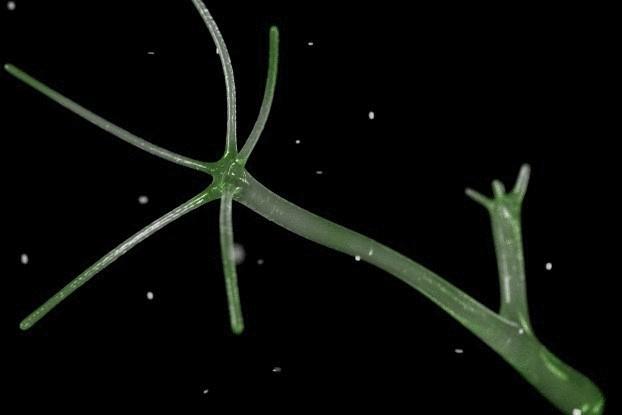

Remarkably, robots may not take humans as an example of a reproductive model. You may have noticed that we are not divided into two parts. The simplest, however, do this all the time. Relatives of jellyfish—hydras—practice a form of asexual reproduction known as budding: a small ball separates from a parent’s body and then breaks off to become a new, genetically identical individual.

Scientists are working on robots that can perform the same simple cloning procedure. Many of these robots are built of repetitive elements, usually cubes, which are made in the image and likeness of a single cube, and also contain a program of self-replication. The cubes have magnets on the surface, so they can join and detach from other cubes nearby. Each cube is divided into two parts diagonally, so each half can exist independently. All the same, the robot contains several cubes assembled into a certain figure.

Act on principle

When we talk to people every day, we make hundreds of decisions. In each of them, we weigh our every choice, determining what is good and what is bad, fair and dishonest. If robots wanted to be like us, they would need to understand ethics.

But as with language, encoding ethical behavior is extremely difficult, mainly because there is no single set of accepted ethical principles. Different countries have different rules of conduct and different systems of laws. Even within individual cultures, regional differences can affect how people evaluate and measure their actions and those of others. Attempting to write a global ethics suitable for all robots is almost impossible.

That’s why scientists decided to create robots, limiting the scope of the ethical problem. For example, if a machine were to operate in a particular environment—in the kitchen, say, or in a patient’s room—it would have far fewer rules of conduct and fewer laws to make ethically informed decisions. To achieve this goal, robotics engineers introduce ethical choices into the machine learning algorithm. This choice is based on three flexible criteria: what good the action will lead to, what harm it will cause and the measure of justice. Using this type of artificial intelligence, your future home robot will be able to determine exactly who in the family should wash dishes, and who will get a remote from the TV for the night.

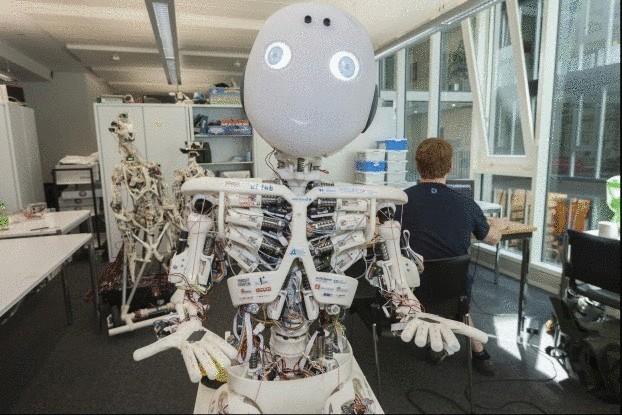

Feel emotions

“That is my secret, it is very simple: only the heart is sharp. You cannot see the most important thing.”

If this remark of the Fox in Antoine de Saint-Exupery's The Little Prince is correct, then robots will not see the most beautiful and best in the world. After all, they are great at probing the world around them, but they cannot turn sensory data into specific emotions. They can not see the smile of a loved one and feel joy, or fix the angry grimace of a stranger and tremble with fear.

That, more than anything else on our list, separates a person from a machine. How do you teach a robot to fall in love? How do you program frustration, disgust, surprise, or pity? Should we even try?

Some think it's worth it. They believe that the robots of the future will combine cognitive and emotional systems, which means better work, learn faster and interact more effectively with people. Believe it or not, prototypes of such robots already exist, and they can express a limited range of human emotions. Nao, a robot developed by European scientists, has the emotional qualities of a one-year-old baby. It can express happiness, anger, fear and pride, accompanying emotions with gestures. And that's just the beginning.

Source: hi-news.ru

Ayam Cemani — incredible coal-black breed the chickens

How to find the perfect place for a Wi-Fi router inside my apartment