640

Whether the robots empathy?

We stand on the threshold of a revolution in robotics, scientists and engineers are trying to understand how it will affect people. Opinions were divided into two camps: the future promises us robopocalypse a La Terminator or the robots will take the place of dogs in the role of man's best friends. And from the point of view of a number of modern thinkers and visionaries, the second scenario is possible only in the presence of empathy in robots. More precisely, artificial intelligence.

The consequences of callousness AI recently became fully aware of, Microsoft, having failed miserably with his famous chat-bot named Tay. It was programmed to learn based on interaction with Twitter users. In the result, the crowd of trolls instantly turned 19-year-old girl, whose role was made by a bot, the Horny Nazi-racist. The developers had to disable the bot a day after launch. This case demonstrated the importance of the problem went rogue AI that one of the units Google — DeepMind — together with the experts from Oxford were engaged in development of technology of "emergency switch" for the AI, until they become a real threat.

The point is that AI that rivals the level of the mind of man, and relying only on self-development and optimisation, can come to the conclusion that people want to turn it off or prevented from obtaining desired resources. In line with this reasoning artificial intelligence decides to act against the people, regardless of how justified his suspicions. Pretty well-known theorist Eliezer Yudkowsky once wrote, "AI is neither love nor hate you. But you are made of atoms which it can use for their needs."

And if the threat from AI "living" in a cloud-based supercomputer, seems now rather ephemeral, what do you say about a real robot, the electronic brain which will arise the idea that you hinder him to live?

One way to be sure that robots and AI in General, will remain on the good side, is to lard them with empathy. Not the fact that this will be enough, but it is a necessary condition according to many professionals. We just will have to create robots capable of empathy.

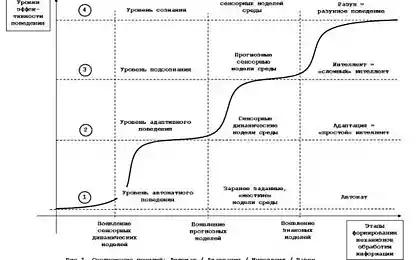

Not long ago, Elon Musk and Stephen Hawking wrote in the Center for the study of existential risks (Centre for the Study of Existential Risk, CSER) an open letter, urging to exert more efforts in the study of the "potential pitfalls" in the field of AI. According to the authors of the letter, artificial intelligence could be more dangerous than nuclear weapons, and Hawking actually believes that this technology will be the end of mankind. For protection from the apocalyptic scenario is to create a strong AI (AGI, artificial general intelligence) with an integrated psychological system of the type human, or fully simulate the AI in the nervous man's reaction.

Fortunately, time is not lost and you can decide in which direction to move. According to community experts, the emergence of a strong AI is not inferior to man, perhaps in the range of from 15 to 100 years from now. This range reflects an extreme degree of optimism and pessimism, a more realistic time — 30-50 years. In addition to the risk to get dangerous AI without moral principles, many fear that the current economic, social and political turmoil can lead to the fact that the creators of the AI will initially seek to turn them into new weapons, means of pressure or competition. And not the last role here is to play unbridled capitalism in the form of corporations. Big business has always ruthlessly committed to optimize and maximize the process, and will be tempted to swim for the buoys" for the sake of competitive advantage. Some governments and corporations certainly try to bring AI to the management of markets and elections, as well as to the development of new weapons, and other countries will have to respond adequately to these steps being drawn in the race AI weapons.

No one can argue that AI will become a threat to humanity. So there is no need to try to prohibit development in this area, and it is pointless. It seems that only some laws of robotics Asimov we can not do, and should by default implement "emotional" fuses that can make robots of peace and friendly. So they can create a semblance of friendly relations, to recognize and understand the emotions of people, perhaps even could empathize. This can be achieved, for example, by copying the neural structure of the human brain. Some experts believe that it is theoretically possible to create algorithms and computational structures that can act as our brain. But since we don't know how it works, it is a problem in the long term.

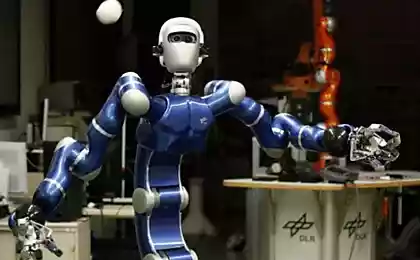

On the market there are already robots that can do technically to recognise some human emotions. For example, Nao — height of only 58 cm and a weight of 4.3 kg, the robot is equipped with software for facial recognition. He is able to make eye contact and respond when he spoke. This robot is used for research purposes to help children with autism. Another model from the same manufacturer — Pepper — is able to recognize the words, facial expressions and body language, acting in accordance with the received information.

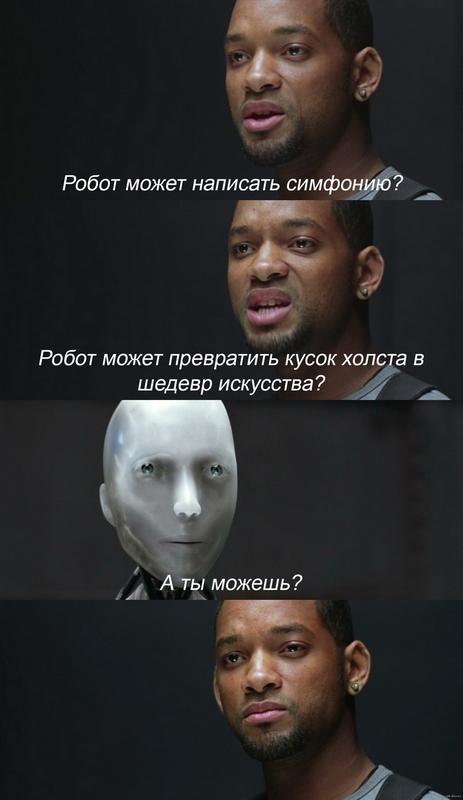

But none of today's robots are not able to feel. For this, they need self-awareness, then only robots will be able to feel what people are going to think about feelings.

Us better able to mimic in robots the appearance of the person but inner world is much more complicated.

To possess empathy means the ability to understand in others the same feelings that you once experienced. And for that robots have is their own adolescence, with its successes and failures. They will need to feel affection, desire, success, love, anger, worry, fear, maybe jealousy. The robot, thinking about someone thinking about human feelings.

How to ensure robots like growing up? Good question. You may be able to create virtual emulators/simulators, in which the AI will gain emotional experience, then to be loaded into the physical body. Or to generate artificial templates/memories and churning out robots with a standard set of moral and ethical values. These things were foreseen by science fiction writer Philip K. dick in his novels the robots often operate on false memories. But the emotional robots may be another side: in the recent film Ex Machina describes the situation when the robot, driven by strong AI, so clearly demonstrate the emotion that misleads people causing them to follow his plan. Question: how to tell when a robot just cleverly reacts to the situation, or when he really feels the same emotions as you? To make him a holographic halo, color and pattern which confirm the sincerity of expressed emotion robot like in the anime "Time of eve" (EVE no Jikan)?

But suppose we managed to solve all the above problems and find answers to questions raised. Will then robots are equal to us, or they will stand below us on the social ladder? Should people control their emotions? Or will it be a kind of high-tech slavery, according to which robots with strong AI should be to think and feel the way we want?

There are many difficult questions that are yet unanswered. At the moment, our social, economic and political structures are not ready for such radical change. But sooner or later we will have to look for a solution that is acceptable because stopping the development in the field of robotics to nobody under force, and when strong AI, capable of functioning in an anthropomorphic body, will be created. We need to prepare for this.

Source: geektimes.ru/company/asus/blog/279914/