1497

Artificial intelligence is not a threat to us

Creating AI only improve our lives, experts said

Babbitt afraid of robots and artificial intelligence. Can long list works of science fiction, in which the mechanical servants and AI were established and firmly occupied an important niche in our lives, but for some reason, out of control and turned against their creators.

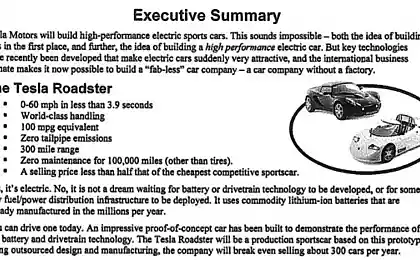

In recent years, direct primal fear began large and influential figures in the world of science and information technology. In particular, the CEO of Tesla Motors Elon Musk said , that AI is "potentially dangerous nuclear weapons", and the British physicist Stephen Hawking AI called "the greatest mistake of mankind».

Oren Etzioni (head of the Institute of Artificial Intelligence Allen) explores the artificial intelligence for the past 20 years, and it is completely calm. Other experts have expressed similar thoughts region.

According to his statement , known gloomy scenarios AI wrong one simple reason: they confuse the mind with autonomy, and assume that your computer will start using their own will to create their own goals, and access to databases and computing capabilities to help people win.

Etzioni believes that these two aspects are far from each other. Calculator in the hands of a man begins to make their own calculations - it always remains a tool to simplify the calculations, which otherwise would be too long to do manually.

Similarly, the artificial intelligence - a tool for the work of the types of work that are either too complicated or too expensive for us: the analysis of large amounts of data, medical research and so on. AI requires human intervention and management.

Scary standalone programs exist: it is cyber-weapons, or computer viruses. But they do not have the mind. The most "intelligent" software has a very narrow niche applications, and a program that can beat a man in an intellectual game, as did Watson company IBM , has zero autonomy.

Watson rushes in the desire to play in other television games, he has no consciousness. As John Searle, Watson did not even realize that he had won.

Arguments against the AI always operate hypothetical terms. For example, Hawking says that the development of a fully artificial intelligence could mean the end of the human race.

The problem in these sentiments is that the emergence of a fully artificial intelligence in the next twenty-five years are less likely than the destruction of mankind from the fall to Earth asteroid.

We have since the story of Frankenstein's monster afraid artificial servants, and if you believe Isaac Asimov, we begin to experience the state, which he called the Frankenstein complex.

Instead of fear that technology can turn against us we should focus on how AI can improve our lives.

For example, the publication of Journal of the Association for Information Science and Technology says that the global output of scientific data doubles every two years. Specialist with good intentions are no longer able to keep up with everything. Search engines show us terabytes of data with which a person can not be found, and in his life.

Therefore, scientists are working on the AI, which will be responsible, for example, the question of how a particular drug affects the body middle-aged women, or at least be able to limit the number of articles to find the answer. We need software that monitors scientific publications and marks important, but not on the basis of keywords, based on the understanding of information.

Etzioni notes that we are now at a very early stage of development of artificial intelligence: current developments can not even read textbooks mladsheklassnikov, programs can not afford to hand over control of ten child, or at least understand the sentence "I threw the ball into the box, and it razibilos." < br />

Work involves overcoming many difficulties, and the skeptics overlooked that even for many years AI will be weaker child. The world of cyber-weapons is not a sphere of discussion, because it does not apply to artificial intelligence.

Вторит Etzioni professor of psychology and neuroscience at New York University and the head of Geometric Intelligence Gary Marcus. He also says that artificial intelligence is still too weak to be able to make such far-reaching conclusions, but also makes comments on the cost of bugs.

To bring billions of dollars in damages and killing people, the program does not necessarily have a mind to create malice: mischief can and usually trading robot due to errors inherent in it.

Error in program management unmanned vehicle can lead to accidents and even death, but this does not mean that we should immediately throw the research in this area - these programs will be able to save hundreds of thousands of lives a year.

However, too alarmist in something right. Over the past decade, computers exceed human and more new problems, and it is impossible to predict what levels of restrictions would be needed to minimize the risks of their activities. The problem is not in the capture of the machine world, the problem of errors inherent in AI.

According to the head of Pecabu Rob Smith in the article "What is not artificial intelligence" , the term "artificial intelligence" will soon become another meaningless term that put everywhere for the fact of its use. This has already happened to the "cloud" and "big date».

The problem is not only that. Society sees AI as edakogo absolute miracle of technology, powerful and expensive.

It should be remembered that the AI - it is not canonical in the form of red light HAL9000 or evil "Skynet". Artificial intelligence has no consciousness. It's just a computer program "smart" enough to perform tasks that would normally require the participation of human analysis. This is not a cold-blooded killing machine.

Unlike human AI - not a living being, even if the program can perform tasks usually solved by people. They were not included feelings, desires and aspirations than those which are incorporated in them, we, or they can form themselves on the basis of the input data.

Like a man can put artificial intelligence to the task, but their nature is laid in the reasons for its creation. The role of "smart" program determines only the creators, and it is unlikely someone will start to write code to implement the subordination of humanity or identity.

Only in science fiction artificial intelligence wants to multiply and replicate. Let you can create a program with the goals of harm, but the problem is whether it is the most AI?

Finally, computer intelligence - is not the whole essence, and specialized community programs. Smith points out that in the near future most likely to be created as a network of AI routines that provide computer vision, linguistic communication, machine learning, movement and so on. Artificial intelligence program - it's not "he", "she" or "it", that "they are».

We are in the decades from that singularity, which is so afraid of Elon Musk. Today, IBM, Google, Apple and other companies are developing a new generation of applications that can only partially replace the human element in many of the same type, time consuming and dangerous work. We do not need to develop a sense of fear in relation to these programs, they only improve our lives. The only thing that is dangerous - it's the people who create artificial intelligence. How do you feel about artificial intelligence?

Babbitt afraid of robots and artificial intelligence. Can long list works of science fiction, in which the mechanical servants and AI were established and firmly occupied an important niche in our lives, but for some reason, out of control and turned against their creators.

In recent years, direct primal fear began large and influential figures in the world of science and information technology. In particular, the CEO of Tesla Motors Elon Musk said , that AI is "potentially dangerous nuclear weapons", and the British physicist Stephen Hawking AI called "the greatest mistake of mankind».

Oren Etzioni (head of the Institute of Artificial Intelligence Allen) explores the artificial intelligence for the past 20 years, and it is completely calm. Other experts have expressed similar thoughts region.

According to his statement , known gloomy scenarios AI wrong one simple reason: they confuse the mind with autonomy, and assume that your computer will start using their own will to create their own goals, and access to databases and computing capabilities to help people win.

Etzioni believes that these two aspects are far from each other. Calculator in the hands of a man begins to make their own calculations - it always remains a tool to simplify the calculations, which otherwise would be too long to do manually.

Similarly, the artificial intelligence - a tool for the work of the types of work that are either too complicated or too expensive for us: the analysis of large amounts of data, medical research and so on. AI requires human intervention and management.

Scary standalone programs exist: it is cyber-weapons, or computer viruses. But they do not have the mind. The most "intelligent" software has a very narrow niche applications, and a program that can beat a man in an intellectual game, as did Watson company IBM , has zero autonomy.

Watson rushes in the desire to play in other television games, he has no consciousness. As John Searle, Watson did not even realize that he had won.

Arguments against the AI always operate hypothetical terms. For example, Hawking says that the development of a fully artificial intelligence could mean the end of the human race.

The problem in these sentiments is that the emergence of a fully artificial intelligence in the next twenty-five years are less likely than the destruction of mankind from the fall to Earth asteroid.

We have since the story of Frankenstein's monster afraid artificial servants, and if you believe Isaac Asimov, we begin to experience the state, which he called the Frankenstein complex.

Instead of fear that technology can turn against us we should focus on how AI can improve our lives.

For example, the publication of Journal of the Association for Information Science and Technology says that the global output of scientific data doubles every two years. Specialist with good intentions are no longer able to keep up with everything. Search engines show us terabytes of data with which a person can not be found, and in his life.

Therefore, scientists are working on the AI, which will be responsible, for example, the question of how a particular drug affects the body middle-aged women, or at least be able to limit the number of articles to find the answer. We need software that monitors scientific publications and marks important, but not on the basis of keywords, based on the understanding of information.

Etzioni notes that we are now at a very early stage of development of artificial intelligence: current developments can not even read textbooks mladsheklassnikov, programs can not afford to hand over control of ten child, or at least understand the sentence "I threw the ball into the box, and it razibilos." < br />

Work involves overcoming many difficulties, and the skeptics overlooked that even for many years AI will be weaker child. The world of cyber-weapons is not a sphere of discussion, because it does not apply to artificial intelligence.

Вторит Etzioni professor of psychology and neuroscience at New York University and the head of Geometric Intelligence Gary Marcus. He also says that artificial intelligence is still too weak to be able to make such far-reaching conclusions, but also makes comments on the cost of bugs.

To bring billions of dollars in damages and killing people, the program does not necessarily have a mind to create malice: mischief can and usually trading robot due to errors inherent in it.

Error in program management unmanned vehicle can lead to accidents and even death, but this does not mean that we should immediately throw the research in this area - these programs will be able to save hundreds of thousands of lives a year.

However, too alarmist in something right. Over the past decade, computers exceed human and more new problems, and it is impossible to predict what levels of restrictions would be needed to minimize the risks of their activities. The problem is not in the capture of the machine world, the problem of errors inherent in AI.

According to the head of Pecabu Rob Smith in the article "What is not artificial intelligence" , the term "artificial intelligence" will soon become another meaningless term that put everywhere for the fact of its use. This has already happened to the "cloud" and "big date».

The problem is not only that. Society sees AI as edakogo absolute miracle of technology, powerful and expensive.

It should be remembered that the AI - it is not canonical in the form of red light HAL9000 or evil "Skynet". Artificial intelligence has no consciousness. It's just a computer program "smart" enough to perform tasks that would normally require the participation of human analysis. This is not a cold-blooded killing machine.

Unlike human AI - not a living being, even if the program can perform tasks usually solved by people. They were not included feelings, desires and aspirations than those which are incorporated in them, we, or they can form themselves on the basis of the input data.

Like a man can put artificial intelligence to the task, but their nature is laid in the reasons for its creation. The role of "smart" program determines only the creators, and it is unlikely someone will start to write code to implement the subordination of humanity or identity.

Only in science fiction artificial intelligence wants to multiply and replicate. Let you can create a program with the goals of harm, but the problem is whether it is the most AI?

Finally, computer intelligence - is not the whole essence, and specialized community programs. Smith points out that in the near future most likely to be created as a network of AI routines that provide computer vision, linguistic communication, machine learning, movement and so on. Artificial intelligence program - it's not "he", "she" or "it", that "they are».

We are in the decades from that singularity, which is so afraid of Elon Musk. Today, IBM, Google, Apple and other companies are developing a new generation of applications that can only partially replace the human element in many of the same type, time consuming and dangerous work. We do not need to develop a sense of fear in relation to these programs, they only improve our lives. The only thing that is dangerous - it's the people who create artificial intelligence. How do you feel about artificial intelligence?

| positive |

| Neutral |

| I hate and seek to destroy tr > |

| Hard to say Only registered users can vote in polls. Sign , please. 261 people voted. 14 people abstained. Source: geektimes.ru/post/242993/ |

December 16, 1917 was born Sir Arthur Charles Clarke

Catch under the tree-2: Head-mounted display Fibrum works with any smartphone from 4 to 6 inches