585

Why are people not robots should drive cars

Recently my colleague Ilya Hel wrote a great article about why people should not drive cars and need to charge by the car. Provided data for statistical studies that have shown that people very often distracted from the road and do their own thing: texting in their phones, check your e-mail, communicate in social networks, take photos myself and even record video. The problem really exists, and it must be solved somehow.

No one will argue that all these factors increase the percentage of accidents, and as a result, mortality on the road. Is there a way out? Whether the best solution is to take away from man the wheel and shift the task of managing a car computer? Whether this will reduce the number of accidents? And in General, how to do it all, while at the same time not destroying the world?

In this article I will try to explain my thoughts on this matter and to give arguments, which I hope will not allow or at least seriously complicate the choice in favor of self-driving cars in the near future.

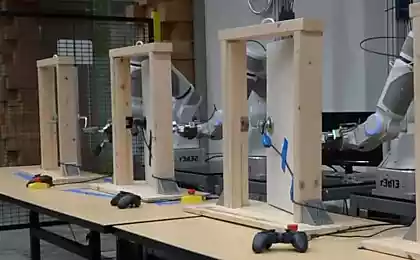

The reason for the Transition to self-driving cars gradually. This is done through equipment vehicles of various systems to facilitate daily movement of people. Different cruise controls that you press the gas pedal and brake, easing the load on the legs during a long country trips; various sensors that monitor a safe distance between your car and other road users; in the end, Parktronic, which helps the drivers to put their car in the Parking lot. For example, the last "option" I'll try to explain why I personally would not trust driverless cars monitoring the situation on the road. At least in the near future.

Talk about two instances that occurred recently. In the first we will talk about the car Swedish group Volvo, which is already quite a long time engaged in the development of the concept car, which could facilitate Parking for its owner. Let's just say work still is over what.

Held in the Dominican Republic demonstrate self-Parking "enraged" the XC60 crossover nearly sent the operator to the hospital. The latter, fortunately, escaped with slight shock.

In the second case featured a Ford-F150, the owner entrusted the vehicle to independently perform a parallel Parking. Unfortunately, the initiative ended in failure. A brand new pickup truck crashed into a parked car.

It would seem, quite similar at each other for the accident. In the case of Volvo, which was parked on the operator, the reason was that people forgot to put the car in the mode of "urban security", which is very strictly enforced speed limits, resulting in the car abruptly began moving forward.

In the case of Ford, people just don't have time to press on the brake pedal. The fact that the automatic Parking system the driver has the right to control the gas and brake pedals. Automation at this point, the only responsible behind the wheel. Excessive pressing on the accelerator pedal and the failure to respond to the situation behind you may lead to this result, which we saw above.

What conclusions can be drawn from that? The fault is in the electronics as an example, accidents? No. Responsibility electronics in the accident happened? No. The only one to blame and is responsible in these cases is a man. The human factor is the only reason we can't build a truly secure self-driving cars.

Sledstviya, now imagine that instead of parked cars from behind could be people. Better yet, imagine that your brand-new self-driving car equipped with "the latest technology" all sorts of Parking sensor, boundary system, select speed mode and a very complex software that is responsible for the operation of all equipment — all of it was collected by people who are not immune from errors that "just forgot" or just "not have time" to do everything right to the desired date.

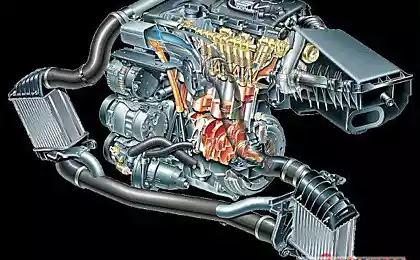

Imagine that it is now a question not just about Parking. Imagine the boundaries of human factor is now applied not only to the steering wheel and the whole car in General. Imagine that one small software bug that was not seen in production, can lead to a chain of events which will result in a serious accident. Imagine that your car is not run you, and where you asked a basic set of rules and behaviors on the road, which made the necessary indicators to a safe distance and a variety of solutions to various traffic situations. Presented? Then I note that with imagination you are all very good. Perfect software does not exist and will not exist until it is created by man. Even DARPA, which wants this problem to fix it, most likely, will fail.

Is there a solution?How to implement the vision of a world where the roads are to move self-driving cars? We need a vehicle equipped with various sensors, about which I wrote above; a computer system that will manage all these sensors and will be connected to a Central database, which will be using traffic cameras and satellite navigation to monitor the situation on the roads, to make decisions and send signals to their performance cars. If such a system will be created by man, then again, do not eliminate the human factor errors.

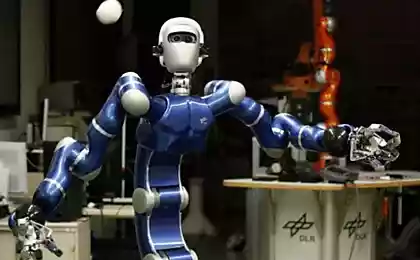

How you can completely eliminate the human error factor within such a complex and multi-tiered chain system of driverless transport? And is it possible in principle? At the moment, this can be done. Why? Because to create the perfect self-driving car would require the same fully Autonomous robot created artificial intelligence, which in turn will be deprived of the factor of human error. Such a perfect creation that will rely only on the absolute root cause of all knowledge of the world, to have impeccable logic and precision. Each car in the chain will be a part of this intelligence, and solutions on avoiding the accident would have to determine the car, not people.

But let's imagine that the accident is inevitable and before AI becomes a matter of choice. Remember the movie "I, robot" and the story of detective Spooner about how he lost his hand and gained her cybernetic? Many will say — "tale for children". Possible. But it was clearly rational.

Imagine that for your fate and the fate of others will be responsible machines that will make the choice based not on moral principles, and on the basis of percentage of opportunities for survival. If we consider this issue from such a position, I fully understand Elon musk, who has repeatedly spoken out against the AI.

"Playing with AI is like playing with demons," — said Musk.

The main problem of safety of movement on roads is to us. And if we want to solve, then you need to start doing it now. Why? Because otherwise, soon the roads will go are not people, and robots. Robots with impeccable logic, in no way related to human qualities, principles and morality. What people? And people in this case will not be needed. Exactly.published

P. S. And remember, only by changing their consumption — together we change the world! ©

Source: hi-news.ru

Miraculous mosquito repellent

Citroen will build a cheaper and wide range of electric vehicles by 2020