202

A drone or when are we going to give robots morality?

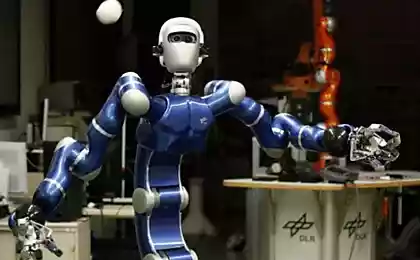

2015 is the year of the X-47B. A new unmanned aircraft developed by Northrop Grumman will be tested on a US warship. It needs to be tested to see if it can do everything existing aircraft can: take off and land safely, maintain standby, and clear the deck for the next aircraft in just 90 seconds. It is not surprising that the development of a military drone riveted so much attention, because the combat aviation of the world is actively developing in recent years.

Among existing autonomous vehicles, this super-drone is probably the most advanced machine, clouding perhaps Google’s self-driving car. Both examples of artificial intelligence were the impetus for a team of influential scientists to sign an open letter on artificial intelligence (AI).

Signed by scientists like Professor Stephen Hawking, as well as entrepreneurs like Elon Musk, he warns that AI is moving so fast toward human-like intelligence that we don’t fully understand its potential. AI systems need to do what we want them to do. But how can people thrive when many jobs are automated, including in banking and finance? The future promises uninterrupted rest – or just unemployment?

There are also many questions that need to be answered today. For example, who should be held responsible if a self-driving car causes an accident? Clarity in the legal field is essential for consumer confidence, on which the future of the industry depends.

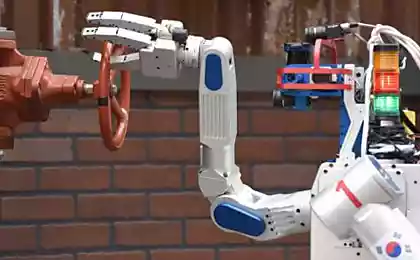

If we're going to give robots morals, what kind of morality should be laid down? What about sabotage? The idea of a moral machine is fascinating, because in an era when machines are already doing many of the things humans can do — drive a car, fly an airplane, run, recognize an image, speak, and even translate — there are still competencies, such as moral considerations, that bypass them.

Indeed, with increasing levels of automation, this omission could become immoral in itself. For example, if drones become our emissaries on the battlefield, they may have to make decisions that humans cannot foresee or encode in the program. And these decisions can be "moral" decisions, like saving two lives.

P.S. And remember, just by changing your consciousness – together we change the world!

Source: mirnt.ru/aviation/bespilotnyy-samolet