705

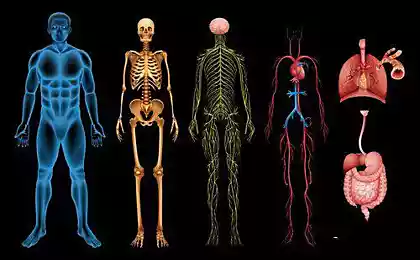

Legs, wings ... the main thing - the tail! The human body from the point of view Intel RealSense

Work Programmer interesting in its diversity. Depending on the problem, you deepen in the modeling of climate processes, the biology of cell division, the stellar physics ... But there is also another way: the most common on the face poser opens in front of you abyss nuances. Developers, faced for the first time with the technology Intel RealSense, certainly surprised how complex processes of recognition and tracking the position of the hands or face, because our brains are doing it almost without our participation. What are the features of our anatomy should be considered in the design of natural interfaces and what successes have RealSense creators in this way?

At the end of the post - invitation to Intel RealSense Meet Up in Nizhny Novgorod on April 24th. Nizhny Novgorod, do not miss it! B>

Look at my hands and try to bend different fingers. Note that when bending, they depend on one another. Therefore it is sufficient to monitor only two joints to achieve realistic bending four fingers (all but large). Only the index finger can be bent without bending while the other fingers, so the index finger tracking algorithm requires its own joints. For other fingers all the easier if bent middle finger, then also bent ring finger and little finger; if you bend the ring finger, with the bent middle finger and little finger; if you bend a little finger, then bend the middle and ring fingers.

We continue the study of our hands. The angle which can bend a specific phalanx depends on the length of the phalanx (instead of the joint). Top joint of the middle finger can bend at a smaller angle than the middle phalanx of the same finger, and middle phalanx bending angle smaller than the angle, which can bend the lower phalanx. Note here that monitor the child's hand is much harder than adults, because it is difficult to obtain data for small hands and accurately interpret them.

Currently, the technology allows you to simultaneously monitor RealSense 22 joints in each hand and two hands (by the way, they do not have to be the right and left, and may belong to different people). In any case, the computer knows what hand in front of it. An important step forward was the exception calibration phase, although in some difficult cases (again, for example, if the child is facing the camera), the system requests an initial calibration. But further than just the human hand vised on key points, and can be completed independently if it came out of the field of view or poorly lit. In that case, if conditions allow, the arm is separated from the background located behind, even if it changes periodically.

The accuracy of determining the position of some parts of the hand relative to the other allows for very interesting options for transmitting information. For instance, you can use the relative values of the open palm - from fully open to fully closed fist (from 0 to 100). Agree this is somewhat similar to the sign language. By the way, the implementation of the classical language of gestures opens for RealSense another important and necessary area of application - the rehabilitation of people with disabilities. Unlikely to have a computer technology can be more humane use ...

We now turn to gesture recognition. Currently Intel RealSense supports 10 ready-sign - you see them in the picture. Recognition can be static (fixed postures) or active (posture in motion). Of course, nothing prevents the switch from one mode to another, for example, static open hand becomes active when Mahaney. Gesture recognition order of magnitude more complex than simple motion tracking, because here it is necessary not just to calculate the position of points, but also to compare with certain movement patterns. Therefore, without learning there is not enough, and must be trained both sides: the computer must learn to detect your movements and you - the right move.

The clearer will be your gesture, the clearer it will be for the machine. Maybe at first you will experience some psychological discomfort: a real "human" life, we almost never fix gestures, and outputs them one by one continuously. RealSense as necessary for the initial and final phase and the long-term effect between them (the duration of the gesture, by the way, may also be involved as a parameter). The dynamic motion is defined depending on the situation at the displacement or time.

As can be seen, the scope for misunderstandings abound in natural interfaces. For instance, those gestures that we call "similar" computer, most likely interpreted as the same. Designers such situations should be avoided. Further, the application must constantly ensure that a person who is in the picture, did not get out of it and, where appropriate, issue warnings. A lot of the nuances of the camera adds RealSense, which has its own characteristics ... well, simple math problem to solve is interesting, is not it? Here we solve complicated.

Next time, if you get a chance, we'll talk about the face detection. In the meantime, take this opportunity to invite all programmers Nizhny Novgorod, interested in technology Intel RealSense, on informal meeting with specialists , which will take place April 24 , on Friday, at st. Magistrate, Building 3

When writing a post used articles IDZ:

Working with Hands / Gestures Tips and Tricks for developing Apps with the Intel RealSense SDK 2014 R1 Советы and recommendations for the use Unity with Intel RealSense SDK 2014

Source: habrahabr.ru/company/intel/blog/256167/

Complete energy autonomy or how to survive with solar panels in the province (Part 5. Sunchaser)

History of the creation of another robot. Part Two, «it's alive!»