1118

CERN plans to increase its computing capacity to 150,000 cores

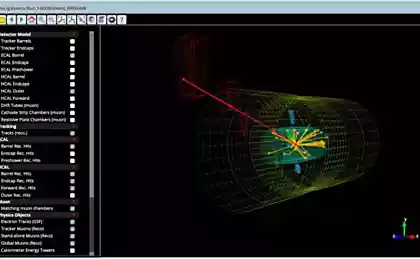

Paris, 1989 - the beginning of the creation of one of the greatest works of modern and expensive, the Large Hadron Collider. This event is certainly a feat, but the installation, forming almost 27-kilometer ring, his tracks more than 90 meters underground on the Franco-Swiss border, is useless without the huge computing power and no less than a huge repository of data.

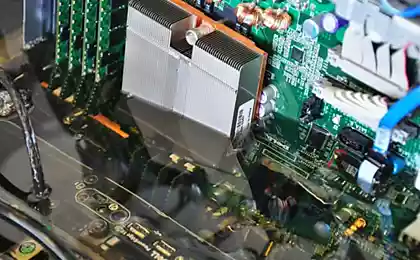

Such computing power for the European Organization for Nuclear Research (CERN) delivered IT team of 4 the cloud-based software with open source OpenStack, which is quickly becoming the industry standard for creating clouds. Currently CERN has four OpenStack clouds located in two data centers - one in Meyrin, Switzerland, the second - in Budapest, Hungary.

The largest cloud located in Meyrin contains about 70,000 cores 3000 servers, the other three clouds contain a total of 45,000 cores. In addition, the division CERN in Budapest will be connected with headquarters in Geneva, two links with a capacity of 100 Gbit / s.

CERN began to build their cloud environment more in 2011 with the help of Cactus (cloud software with open source). Clouds seen the light with the release of the interface OpenStack Grizzly in July of 2013. Today all four are working on cloud nine in a row release platform OpenStack, which was called Icehouse. Currently CERN prepares to activate approximately 2,000 additional servers that are an order of magnitude increase computational power of the cloud. Increased energy particle collisions at the LHC 8 TeV (TeV) to 13-14 TeV will cause that it will generate more data than it generates now. Over the entire period of the experiments were collected over 100 petabytes of data, of which only 27 this year. In the first quarter of 2015. This figure will increase, according to the plans, up to 400 petabytes per year, that the cloud has to be ready for it.

Architecture clouds CERN is a single system, which is located in two data centers. Each data center in Switzerland and Hungary, have clusters, computing nodes and controllers for these clusters. Cluster controller refer to the main controller in Switzerland, which in turn distributes the flow of data between the two balancer.

OpenStack Cloud never created using simple components OpenStack suite, and a cloud of CERN is no exception. Together with him and the other components used open source:

Such computing power for the European Organization for Nuclear Research (CERN) delivered IT team of 4 the cloud-based software with open source OpenStack, which is quickly becoming the industry standard for creating clouds. Currently CERN has four OpenStack clouds located in two data centers - one in Meyrin, Switzerland, the second - in Budapest, Hungary.

The largest cloud located in Meyrin contains about 70,000 cores 3000 servers, the other three clouds contain a total of 45,000 cores. In addition, the division CERN in Budapest will be connected with headquarters in Geneva, two links with a capacity of 100 Gbit / s.

CERN began to build their cloud environment more in 2011 with the help of Cactus (cloud software with open source). Clouds seen the light with the release of the interface OpenStack Grizzly in July of 2013. Today all four are working on cloud nine in a row release platform OpenStack, which was called Icehouse. Currently CERN prepares to activate approximately 2,000 additional servers that are an order of magnitude increase computational power of the cloud. Increased energy particle collisions at the LHC 8 TeV (TeV) to 13-14 TeV will cause that it will generate more data than it generates now. Over the entire period of the experiments were collected over 100 petabytes of data, of which only 27 this year. In the first quarter of 2015. This figure will increase, according to the plans, up to 400 petabytes per year, that the cloud has to be ready for it.

Architecture clouds CERN is a single system, which is located in two data centers. Each data center in Switzerland and Hungary, have clusters, computing nodes and controllers for these clusters. Cluster controller refer to the main controller in Switzerland, which in turn distributes the flow of data between the two balancer.

OpenStack Cloud never created using simple components OpenStack suite, and a cloud of CERN is no exception. Together with him and the other components used open source:

- Git: version control system software.

- Ceph: distributed object store that runs on servers handlers.

- Elasticsearch: distributed search in real time and system analysts.

- Kibana: rendering engine for Elasticsearch.

- Puppet: Configuration Management utility.

- Foreman: a tool for configuring and monitoring of server resources.

- Hadoop: the architecture of distributed computing used in the analysis of large amounts of data on the servers in the cluster.

- Rundeck: job scheduler.

- RDO: software package for deploying OpenStack clouds on the distribution Linux Red Hat.

- Jenkins: continuous integration tool. Li >

The choice was between utilities Chef and Puppet, both tools are mature, well integrated with other developments. However, strict declarative approach Puppet was considered more appropriate for this kind of work.

The current system architecture The diagram below demonstrates:

Wednesday OpenStack CERN already massive, but this is not the limit. In the not too distant future it is planned to increase by at least half, which will be associated with the updating of the collider.

According to the plans it will happen in the first quarter of 2015, the first year, since at this stage of physicists has not enough power collider to find answers to the fundamental questions of the universe.

P.s. Peace to all elementary particles.

Source: habrahabr.ru/company/ua-hosting/blog/242933/