894

The heart of the empire Google

Many people know the diversity of Google - the search engine and popular and social network, as well as lots of other useful and innovative services. But few of us know: how the company was able to deploy and sustain them with such a high performance and fault tolerance? How is the fact that provide such opportunities, - data center Google, what are its characteristics? Just about this and will be discussed in this article.

Source

Actually, Google Inc. has long been no surprise the powerful dynamics of its growth. An active campaigner for "green energy", many patents in various fields, open and friendly towards users - these are the first associations that arise at the mention of Google for many. No less impressive and data center (data center or data center) Company: This is a network of data centers placed throughout the world, the total moschnostyu220 MW (as of last year). Given the fact that investment in data center last year alone amounted to 2, 5 billion dollars, it is clear that the company considers it strategically important direction and promising. At the same time there is a kind of dialectical contradiction, because, in spite of the publicity of the corporation Google, its data centers are the sort of know-how secret, hidden behind seven seals. The company believes that the disclosure of the details of the project can be used by competitors, therefore only part of the information on the most advanced solutions seeping into the masses, but even this information is very interesting.

Let's start with the fact that Google itself is not directly involved in the construction of the data center. He has a department that deals with the development and maintenance, and the company works with local integrators that do the introduction. The exact amount of built data center is not advertised, but press their number varies from 35 to 40 worldwide. The main concentration of data centers accounted for the United States, Western Europe and East Asia. Also, some of the equipment located in leased premises of commercial data center, having good communication channels. It is known that Google used the space to accommodate the equipment of commercial data centers Equinix (EQIX) and Savvis (SVVS). Google aims to strategically shift exclusively to the use of their own data centers - the corporation is attributed to the growing demands of privacy of users who trust us, and inevitably leaks in commercial data centers. Following the latest trends, this summer it was announced that Google will provide rent "cloud" infrastructure to third-party companies and developers an appropriate model IaaS-service Compute Engine, which will provide the computing power of a fixed configuration with an hourly pay-per-use.

The main feature of the network of data centers is not so much in the reliability of a single data center, as in the geo-clustering. Each data center has a lot of high-capacity communication links with the outside world and replicates its data to several other data centers, geographically distributed around the world. Thus, even a force majeure such as meteorite did not substantially affect the safety of the data. Geography data centers

The first public data center is located in Douglas, United States. This container data center (Fig. 1) was opened in 2005. The same data center is the most public. In fact it is a kind of frame structure, which resembles a hangar, inside which are arranged in two rows of containers. In one series - 15 containers arranged in one layer and in the second - 30 containers arranged in two tiers. This data center is approximately 45,000 servers of Google. Containers - 20-foot sea. At the moment, the work put into the containers 45, and the power of IT equipment is 10 MW. Each container has its own connection to the hydraulic circuit cooling a power distribution unit - it has, in addition to circuit breakers, and analyzers has electricity consumption of electric consumers groups to calculate the coefficient PUE. Separately located pumping stations, cascades of chillers, diesel generator sets and transformers. Declared PUE, equal to 1.25. Cooling system - dual, while the second loop with cooling towers used economizers, can significantly reduce the operating time of the unit. In fact it is nothing more than an open cooling tower. The water absorbs heat from the server, is supplied to the top of the tower, where sprayed and flows down. Due to the heat dissipation of the water is transferred to the air, which is blown by fans from outside, and the water itself is partially vaporized. This solution significantly reduces the time of chillers in the data center. Interestingly, initially for the replenishment of water in the external circuit to use purified tap water is suitable for drinking. In Google's quickly realized that the water does not necessarily have to be so clean, so the system was set up, cleaning waste water from the nearby treatment facilities and restocking the water in the external cooling circuit.

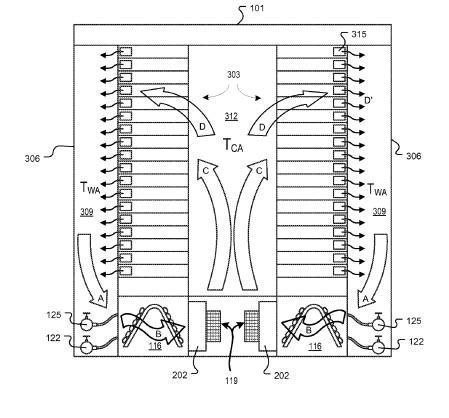

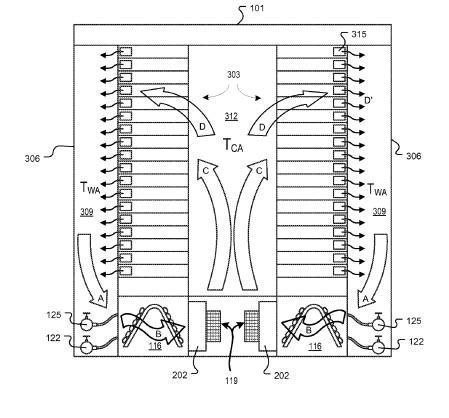

Inside each container rack servers are built on the principle of the common "cold" corridor. Along it are the raised floor heat exchanger and fans that blow cool air through the grille air intakes to the servers. Heated air from the rear of the cabinets is taken under the raised floor, it passes through a heat exchanger and cooled, forming a recirculation. Containers are equipped with emergency lighting, buttons EPO, smoke and temperature sensors fire safety.

Fig. 1. Container data center in Douglas

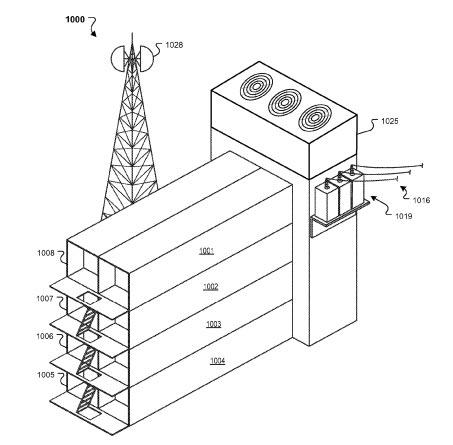

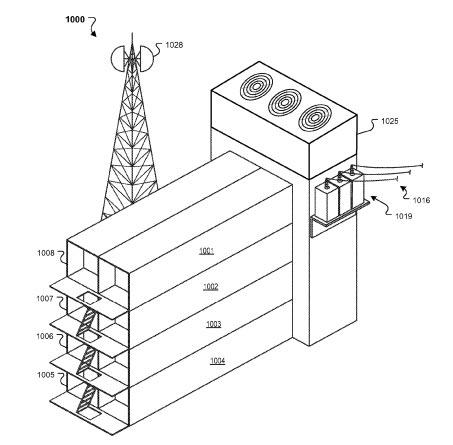

It is interesting that Google later patented the idea of "container tower": the containers are placed on each other, on the one hand organized in, and the other - utilities - electrical system, air conditioning and a supply of external communication channels (Fig. 2).

Fig. 2. The principle of the cooling air in the container

Fig. 3. Patented "container tower»

Open a year later, in 2006, in The Dalles (USA), on the banks of the Columbia River, the data center has consisted of three separate buildings, two of which (each area of 6 400 sq. Meters) have been erected in the first place (Figure .4) - are located in the engine rooms. Near these buildings are buildings that house refrigeration units. The area of each building - 1700 sq. meters. In addition, the complex has an administrative building (1 800 sq. Meters) and a hostel for the temporary accommodation of staff (1 500 sq. Meters).

Previously, the project was known under the code name Project 02. It should be noted that the very place for the data center was not chosen by chance: before there functioned aluminum smelting capacity of 85 MW, which was suspended.

Fig. 4. Construction of the first phase of the data center in Dallas

In 2007, Google began to build a data center in southwest Iowa - in Council Bluffs, near the Missouri River. The concept resembles the previous object, but there are external differences: the building integrated and refrigeration equipment, instead of cooling towers placed along both sides of the main structure (Fig. 5).

Fig. 5. The data center in Council Bluffs - typical concept of building data centers Google.

Apparently, this concept has been taken as the best practices, as it can be seen in the future. Examples of this - the data centers in the United States:

- Lenoir City (North Carolina) -building area of 13 000 sq. meters; Built in 2007-2008. (Fig. 6);

- Moncks Corner (SC) - opened in 2008; It consists of two buildings, between which the reserved area for the construction of the third; having its own high-voltage substations;

- Mayes County (Oklahoma) is the construction dragged on for three years - from 2007 to 2011; Data center was implemented in two stages - each included the construction of a building area of 12 000 sq. meters; data center power supply is provided by a wind power plant.

Fig. 6. The data center in Lenoir City

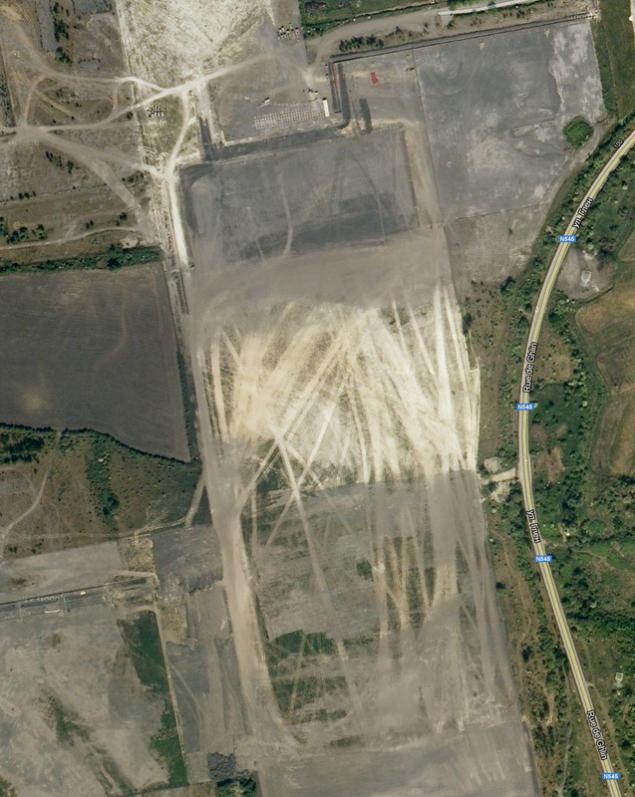

But the primacy of privacy belongs to the data center in Saint-Gislene (Fig. 7a). Built in Belgium in 2007-2008. This data center is larger than the data center in Council Bluffs. In addition, the data center is notable for the fact that when you try to see satellite maps Google, instead you see a blank area in an open field (Fig. 7b). Google says the lack of negative impact on the operation of the data center to the surrounding towns: as water gradiren in the data center using treated wastewater. For this special station was built next to a multi-stage purification, and water is supplied to the station's technical navigable channel.

Fig. 7a. Data center in Saint-Gislene map search engine bing

Fig. 7b. But on Google it does not exist!

Google is in the process of construction noted that water which is used for cooling the outer loop would logically derived from its natural storage and not take away from the aqueduct. Corporation for its future data center acquired in Finland (Hamina), an old paper mill, which was reconstructed by the data center (Fig. 8). The project was implemented in 18 months. Its realization involved 50 companies, and a successful finish of the project took place in 2010. At the same time such a scale was not accidental: indeed developed cooling concept has allowed Google said more about the ecology of their data centers. Northern climate in combination with low freezing point of salt water allowed to provide cooling without any chillers, using only pumps and heat exchangers. It uses the template for a double loop "water - water" and intercooler. Sea water is pumped, it gets on the heat exchanger cools the data center, and then dumped into the bay in the Baltic Sea.

Fig. 8. Reconstruction of an old paper mill in Hamina turns it into an energy-efficient data center

In 2011, in the northern part of Dublin (Ireland) was built data center area of 4000 square meters. meters. To create a data center already existed was renovated warehouse building here. This is the most humble of the famous data center built as a platform for the deployment of services companies in Europe. In the same year began the development of a network of data centers in Asia, three of the data center should be available in Hong Kong, Singapore and Taiwan. And this year, Google announced the purchase of land for the construction of the data center in Chile.

It is noteworthy that in a newly built data center for Google Taiwanese have gone in a different way, determined to take advantage of the economic benefits of nabolee cheaper, night-rate electricity. The water in large tanks cooled by chiller units with tanks, cold accumulators and used for cooling during the day. But if there is a phase transition of coolant used, or the company will focus only on the tank with chilled water - it is unknown. Perhaps, after entering the data center into operation, Google will provide this information.

Interestingly, the polar idea is even more ambitious project -plavuchy corporate data center, which in 2008 patented Google. The patent states that the IT equipment located on a floating vessel, the cooling is carried out with cold sea water, and electricity is produced by floating generators, generating electricity from the motion of waves. For the pilot project will use a floating production generators Pelamius: 40 such generators, floating on an area of 50x70 meters, will allow to produce up to 30 megawatts of electricity, enough to run the data center.

By the way, Google will publish regularly measure PUE energy efficiency of their data centers. And very interesting method of measurement. If in the classic sense of standards Green Grid is the ratio of the power consumption of the data center to its IT capacity, Google measures the PUE of a whole object, including not only the life support system of the data center, but also the conversion loss in the transformer substations, cables, energy consumption in the office buildings and so on. d. - that is, everything that is inside the perimeter of the object. PUE is measured as the average value for a period of one year. As of 2012 the average PUE for all Google data centers was 1.13.

Features of the construction of the data center site selection

Actually, it is clear that by building such enormous data centers, their location selected by Google is not accidental. What are the criteria primarily take into account the company's specialists?

1. Enough cheap electricity, the possibility of its supply and its clean origin. Adhering to the policy of preserving the environment, the company uses renewable energy sources, as one large data center Google consumes about 50-60 MW - enough of being the sole customer throughout the plant. Moreover, renewables are allowed to be independent from energy prices. Currently there are hydroelectric and wind turbine parks.

2. The presence of large amounts of water which can be used for cooling. This can be a channel or a natural body of water.

3. The presence of buffer zones between roads and settlements for building guarded perimeter and maintain maximum privacy of the subject. At the same time it requires for normal routes of transportation to the data center.

4. The area of land to be purchased for the construction of data centers, should allow its further expansion and construction of auxiliary buildings or their own renewable electricity.

5. Communication channels. There should be few, and they should be protected. This requirement has become particularly important after the failure of regular channels of communication problems in the data center located in Oregon (USA). Air link passed along power lines, insulators on which local hunters to become something of a target for shooting competitions. Therefore, in connection with the hunting season data center constantly broke off, and her recovery took a long time and considerable force. In the end, we solved the problem, paving the underground link.

6. Tax incentives. The logical requirement, given that use "green technologies" are significantly more expensive than traditional ones. Accordingly, the calculation of return tax breaks should reduce the already high capital costs for the first phase. Fig. 9. Model "Spartan" server Google - nothing more

All servers are installed in 40-inch, two sash open racks that are placed in series with a common "cold" corridor. Interestingly, the data centers Google does not use special structures to limit the "cold" of the corridor, and uses hinged rigid movable plastic slats, saying that it is a simple and inexpensive solution to quickly install more in the existing rows of cabinets and, if necessary, to curtail existing lamellae over the top of the cabinet.

It is known that, in addition to the hardware, Google uses a file system Google File System (GFS), intended for large data sets. The peculiarity of this system is that it is a cluster: the information is divided into blocks of 64 Mbytes and stored in at least three places at the same time with the possibility of finding the replicated copy. If any of the systems fails, the replicated copies are automatically using specialized software model MapReduce. The model itself implies rasparallelirovanie operations and perform tasks on multiple machines simultaneously. When this information is encrypted inside the system. BigTable system uses distributed storage arrays for storing large amounts of information with fast access to storage, such as web indexing, Google Earth and Google Finance. As a basic web application used Google Web Server (GWS) and Google Front-End (GFE), using an optimized core of Apache. All of these systems are proprietary and customized - Google explains this by the fact that private and customized systems are very stable against external attacks and vulnerabilities are much less.

That's all I wanted to say. I apologize for the delay, a little confused in the post :)

Source:

Source

Actually, Google Inc. has long been no surprise the powerful dynamics of its growth. An active campaigner for "green energy", many patents in various fields, open and friendly towards users - these are the first associations that arise at the mention of Google for many. No less impressive and data center (data center or data center) Company: This is a network of data centers placed throughout the world, the total moschnostyu220 MW (as of last year). Given the fact that investment in data center last year alone amounted to 2, 5 billion dollars, it is clear that the company considers it strategically important direction and promising. At the same time there is a kind of dialectical contradiction, because, in spite of the publicity of the corporation Google, its data centers are the sort of know-how secret, hidden behind seven seals. The company believes that the disclosure of the details of the project can be used by competitors, therefore only part of the information on the most advanced solutions seeping into the masses, but even this information is very interesting.

Let's start with the fact that Google itself is not directly involved in the construction of the data center. He has a department that deals with the development and maintenance, and the company works with local integrators that do the introduction. The exact amount of built data center is not advertised, but press their number varies from 35 to 40 worldwide. The main concentration of data centers accounted for the United States, Western Europe and East Asia. Also, some of the equipment located in leased premises of commercial data center, having good communication channels. It is known that Google used the space to accommodate the equipment of commercial data centers Equinix (EQIX) and Savvis (SVVS). Google aims to strategically shift exclusively to the use of their own data centers - the corporation is attributed to the growing demands of privacy of users who trust us, and inevitably leaks in commercial data centers. Following the latest trends, this summer it was announced that Google will provide rent "cloud" infrastructure to third-party companies and developers an appropriate model IaaS-service Compute Engine, which will provide the computing power of a fixed configuration with an hourly pay-per-use.

The main feature of the network of data centers is not so much in the reliability of a single data center, as in the geo-clustering. Each data center has a lot of high-capacity communication links with the outside world and replicates its data to several other data centers, geographically distributed around the world. Thus, even a force majeure such as meteorite did not substantially affect the safety of the data. Geography data centers

The first public data center is located in Douglas, United States. This container data center (Fig. 1) was opened in 2005. The same data center is the most public. In fact it is a kind of frame structure, which resembles a hangar, inside which are arranged in two rows of containers. In one series - 15 containers arranged in one layer and in the second - 30 containers arranged in two tiers. This data center is approximately 45,000 servers of Google. Containers - 20-foot sea. At the moment, the work put into the containers 45, and the power of IT equipment is 10 MW. Each container has its own connection to the hydraulic circuit cooling a power distribution unit - it has, in addition to circuit breakers, and analyzers has electricity consumption of electric consumers groups to calculate the coefficient PUE. Separately located pumping stations, cascades of chillers, diesel generator sets and transformers. Declared PUE, equal to 1.25. Cooling system - dual, while the second loop with cooling towers used economizers, can significantly reduce the operating time of the unit. In fact it is nothing more than an open cooling tower. The water absorbs heat from the server, is supplied to the top of the tower, where sprayed and flows down. Due to the heat dissipation of the water is transferred to the air, which is blown by fans from outside, and the water itself is partially vaporized. This solution significantly reduces the time of chillers in the data center. Interestingly, initially for the replenishment of water in the external circuit to use purified tap water is suitable for drinking. In Google's quickly realized that the water does not necessarily have to be so clean, so the system was set up, cleaning waste water from the nearby treatment facilities and restocking the water in the external cooling circuit.

Inside each container rack servers are built on the principle of the common "cold" corridor. Along it are the raised floor heat exchanger and fans that blow cool air through the grille air intakes to the servers. Heated air from the rear of the cabinets is taken under the raised floor, it passes through a heat exchanger and cooled, forming a recirculation. Containers are equipped with emergency lighting, buttons EPO, smoke and temperature sensors fire safety.

Fig. 1. Container data center in Douglas

It is interesting that Google later patented the idea of "container tower": the containers are placed on each other, on the one hand organized in, and the other - utilities - electrical system, air conditioning and a supply of external communication channels (Fig. 2).

Fig. 2. The principle of the cooling air in the container

Fig. 3. Patented "container tower»

Open a year later, in 2006, in The Dalles (USA), on the banks of the Columbia River, the data center has consisted of three separate buildings, two of which (each area of 6 400 sq. Meters) have been erected in the first place (Figure .4) - are located in the engine rooms. Near these buildings are buildings that house refrigeration units. The area of each building - 1700 sq. meters. In addition, the complex has an administrative building (1 800 sq. Meters) and a hostel for the temporary accommodation of staff (1 500 sq. Meters).

Previously, the project was known under the code name Project 02. It should be noted that the very place for the data center was not chosen by chance: before there functioned aluminum smelting capacity of 85 MW, which was suspended.

Fig. 4. Construction of the first phase of the data center in Dallas

In 2007, Google began to build a data center in southwest Iowa - in Council Bluffs, near the Missouri River. The concept resembles the previous object, but there are external differences: the building integrated and refrigeration equipment, instead of cooling towers placed along both sides of the main structure (Fig. 5).

Fig. 5. The data center in Council Bluffs - typical concept of building data centers Google.

Apparently, this concept has been taken as the best practices, as it can be seen in the future. Examples of this - the data centers in the United States:

- Lenoir City (North Carolina) -building area of 13 000 sq. meters; Built in 2007-2008. (Fig. 6);

- Moncks Corner (SC) - opened in 2008; It consists of two buildings, between which the reserved area for the construction of the third; having its own high-voltage substations;

- Mayes County (Oklahoma) is the construction dragged on for three years - from 2007 to 2011; Data center was implemented in two stages - each included the construction of a building area of 12 000 sq. meters; data center power supply is provided by a wind power plant.

Fig. 6. The data center in Lenoir City

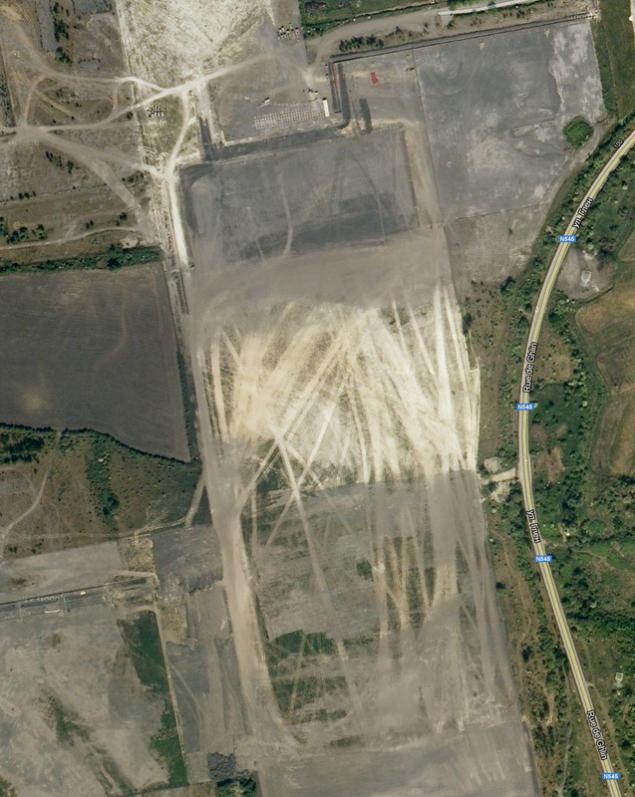

But the primacy of privacy belongs to the data center in Saint-Gislene (Fig. 7a). Built in Belgium in 2007-2008. This data center is larger than the data center in Council Bluffs. In addition, the data center is notable for the fact that when you try to see satellite maps Google, instead you see a blank area in an open field (Fig. 7b). Google says the lack of negative impact on the operation of the data center to the surrounding towns: as water gradiren in the data center using treated wastewater. For this special station was built next to a multi-stage purification, and water is supplied to the station's technical navigable channel.

Fig. 7a. Data center in Saint-Gislene map search engine bing

Fig. 7b. But on Google it does not exist!

Google is in the process of construction noted that water which is used for cooling the outer loop would logically derived from its natural storage and not take away from the aqueduct. Corporation for its future data center acquired in Finland (Hamina), an old paper mill, which was reconstructed by the data center (Fig. 8). The project was implemented in 18 months. Its realization involved 50 companies, and a successful finish of the project took place in 2010. At the same time such a scale was not accidental: indeed developed cooling concept has allowed Google said more about the ecology of their data centers. Northern climate in combination with low freezing point of salt water allowed to provide cooling without any chillers, using only pumps and heat exchangers. It uses the template for a double loop "water - water" and intercooler. Sea water is pumped, it gets on the heat exchanger cools the data center, and then dumped into the bay in the Baltic Sea.

Fig. 8. Reconstruction of an old paper mill in Hamina turns it into an energy-efficient data center

In 2011, in the northern part of Dublin (Ireland) was built data center area of 4000 square meters. meters. To create a data center already existed was renovated warehouse building here. This is the most humble of the famous data center built as a platform for the deployment of services companies in Europe. In the same year began the development of a network of data centers in Asia, three of the data center should be available in Hong Kong, Singapore and Taiwan. And this year, Google announced the purchase of land for the construction of the data center in Chile.

It is noteworthy that in a newly built data center for Google Taiwanese have gone in a different way, determined to take advantage of the economic benefits of nabolee cheaper, night-rate electricity. The water in large tanks cooled by chiller units with tanks, cold accumulators and used for cooling during the day. But if there is a phase transition of coolant used, or the company will focus only on the tank with chilled water - it is unknown. Perhaps, after entering the data center into operation, Google will provide this information.

Interestingly, the polar idea is even more ambitious project -plavuchy corporate data center, which in 2008 patented Google. The patent states that the IT equipment located on a floating vessel, the cooling is carried out with cold sea water, and electricity is produced by floating generators, generating electricity from the motion of waves. For the pilot project will use a floating production generators Pelamius: 40 such generators, floating on an area of 50x70 meters, will allow to produce up to 30 megawatts of electricity, enough to run the data center.

By the way, Google will publish regularly measure PUE energy efficiency of their data centers. And very interesting method of measurement. If in the classic sense of standards Green Grid is the ratio of the power consumption of the data center to its IT capacity, Google measures the PUE of a whole object, including not only the life support system of the data center, but also the conversion loss in the transformer substations, cables, energy consumption in the office buildings and so on. d. - that is, everything that is inside the perimeter of the object. PUE is measured as the average value for a period of one year. As of 2012 the average PUE for all Google data centers was 1.13.

Features of the construction of the data center site selection

Actually, it is clear that by building such enormous data centers, their location selected by Google is not accidental. What are the criteria primarily take into account the company's specialists?

1. Enough cheap electricity, the possibility of its supply and its clean origin. Adhering to the policy of preserving the environment, the company uses renewable energy sources, as one large data center Google consumes about 50-60 MW - enough of being the sole customer throughout the plant. Moreover, renewables are allowed to be independent from energy prices. Currently there are hydroelectric and wind turbine parks.

2. The presence of large amounts of water which can be used for cooling. This can be a channel or a natural body of water.

3. The presence of buffer zones between roads and settlements for building guarded perimeter and maintain maximum privacy of the subject. At the same time it requires for normal routes of transportation to the data center.

4. The area of land to be purchased for the construction of data centers, should allow its further expansion and construction of auxiliary buildings or their own renewable electricity.

5. Communication channels. There should be few, and they should be protected. This requirement has become particularly important after the failure of regular channels of communication problems in the data center located in Oregon (USA). Air link passed along power lines, insulators on which local hunters to become something of a target for shooting competitions. Therefore, in connection with the hunting season data center constantly broke off, and her recovery took a long time and considerable force. In the end, we solved the problem, paving the underground link.

6. Tax incentives. The logical requirement, given that use "green technologies" are significantly more expensive than traditional ones. Accordingly, the calculation of return tax breaks should reduce the already high capital costs for the first phase. Fig. 9. Model "Spartan" server Google - nothing more

All servers are installed in 40-inch, two sash open racks that are placed in series with a common "cold" corridor. Interestingly, the data centers Google does not use special structures to limit the "cold" of the corridor, and uses hinged rigid movable plastic slats, saying that it is a simple and inexpensive solution to quickly install more in the existing rows of cabinets and, if necessary, to curtail existing lamellae over the top of the cabinet.

It is known that, in addition to the hardware, Google uses a file system Google File System (GFS), intended for large data sets. The peculiarity of this system is that it is a cluster: the information is divided into blocks of 64 Mbytes and stored in at least three places at the same time with the possibility of finding the replicated copy. If any of the systems fails, the replicated copies are automatically using specialized software model MapReduce. The model itself implies rasparallelirovanie operations and perform tasks on multiple machines simultaneously. When this information is encrypted inside the system. BigTable system uses distributed storage arrays for storing large amounts of information with fast access to storage, such as web indexing, Google Earth and Google Finance. As a basic web application used Google Web Server (GWS) and Google Front-End (GFE), using an optimized core of Apache. All of these systems are proprietary and customized - Google explains this by the fact that private and customized systems are very stable against external attacks and vulnerabilities are much less.

That's all I wanted to say. I apologize for the delay, a little confused in the post :)

Source: