3889

Dedicated servers under water, literally !? Prospects for breeding fish in servers ?!

We all know that water and electronics - a dangerous combination, but is always? Can modern technology change that?

In this article, we will consider the advantages and disadvantages of placing servers in the liquid, and discuss possible problems of exploitation. We show how it may look like in practice and really work. And to discuss the question of why servers may or may not fish swim :)

For a long time, loss of energy and cooling costs in the operation of the servers did not give rest to many, including us, as the number of servers used by our subscribers constantly growing rapidly, we all think more about creating your own data processing center (DPC) in the foreseeable future. And when more than half of the energy consumed by all TsODom, the cost of cooling air, thanks to which you can not by more than a factor of efficiency of 1.7 divert the waste heat from the equipment, voluntarily involuntarily ask yourself, how you can improve the cooling efficiency and minimize energy loss?

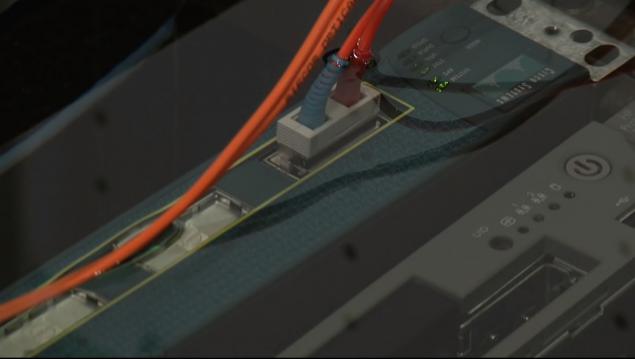

Of course in physics is known that the air - not very efficient conductor of heat, since its thermal conductivity is 25 times lower than the thermal conductivity of water. It is rather more suitable for thermal insulation, rather than for the heat sink. He also has a very small heat capacity, which means that it is constantly necessary to intensively mix and deliver large volumes of cooling. Another thing - water and liquids. It is their use in the cooling system of data centers as a heat exchanger to increase the overall efficiency factor, but directly from the server of the liquid is not in contact only through an air gap and / or the heat sink (for cooling the chipset for example), which improves the efficiency ratio mehnichesky cooling system ( mPUE) up to 1.2 or even 1.15 when using the ambient for cooling purposes.

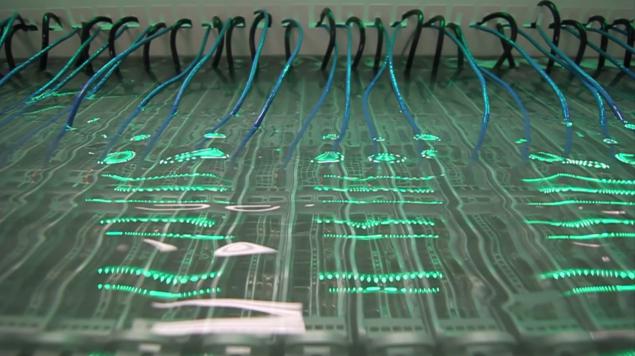

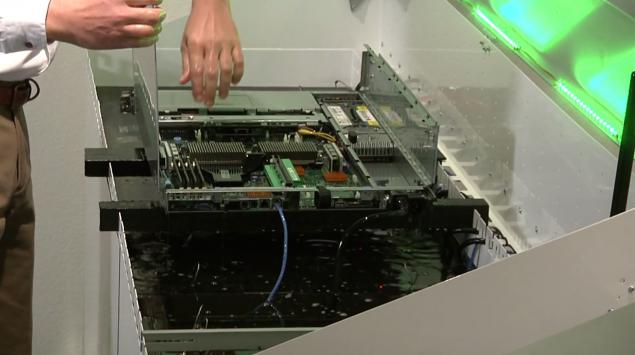

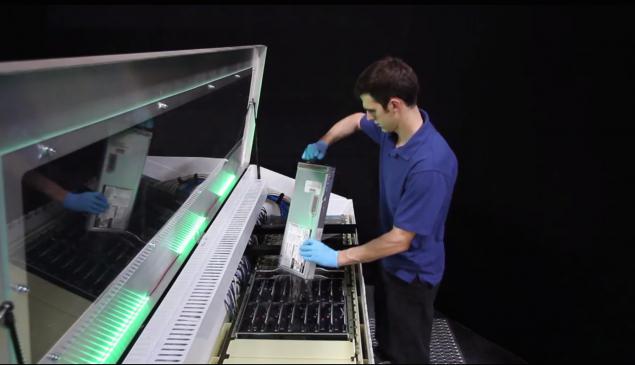

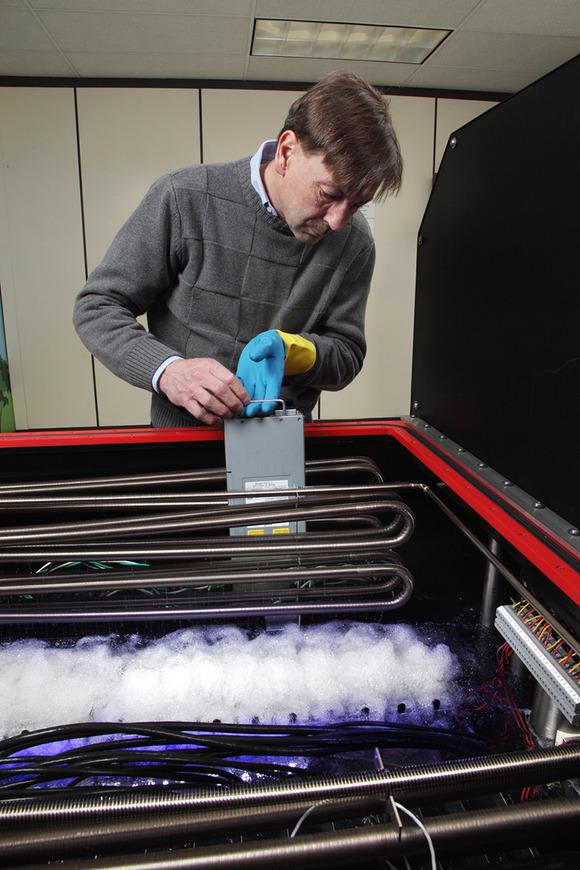

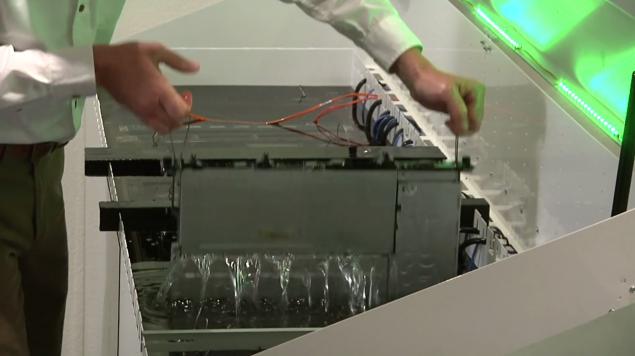

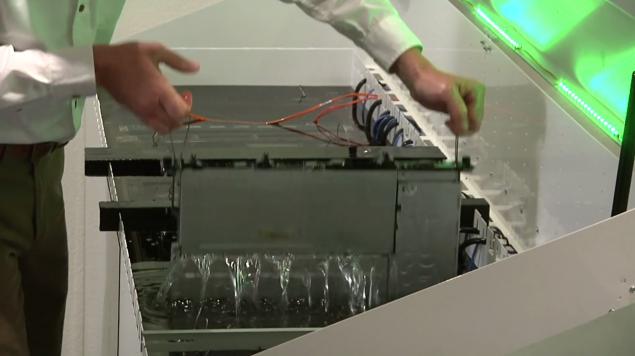

But how cool the server most effectively? Only one way out - to put it completely in the liquid (of course dielektirik), preferably with a greater thermal conductivity and heat capacity, which will not have a negative effect on the components of the server. And such a dielectric can be mineral oil. The idea, unfortunately and fortunately, was not new - it has a few years to develop and implement a number of companies in various forms and with varying efficiency. Modern technology allows to build a "submarine" Data Center! But what are the advantages and disadvantages of this solution?

Advantages and disadvantages of placing servers in the liquid h4> Cooling fluid now saves up to 95 percent of electricity, which is typically used for cooling in the data center and, as a consequence, 50% of all energy consumed by Data Center. < br />

The success of various companies in the field of cooling servers in liquid h4> Mineral oil can effectively protect against corrosion and dust, thanks to the fact that unlike the air contains no water and oxygen, extend the life of the equipment. It is non-toxic and odorless, and thus does not evaporate. But it happens with different efficacy and selection of proper mineral oil - a real art.

Outlook h4> In today's motherboards circuit laid out on "huge" distance from each other to maximize heat dissipation for use as an air cooler, which is not terribly efficient intercooler. The cooling fluid can be started in the production server with more densely packed circuits that allow operation in the liquid and heat dissipation property of the liquid, after the liquid has not only higher thermal conductivity than air, and a much higher heat capacity. The most effective to date mineral oils have heat capacity that exceeds the specific heat of air more than 1,200 times!

Save more than 60% of the funds in the construction of: h5> - there is no need to purchase expensive chillers, HVAC (heating ventilation air cooling) systems;

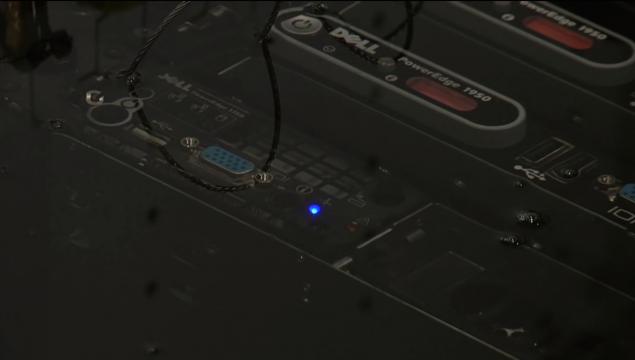

savings of over 50% of the operation: h5> - equipment being in fluid consume 10-20% less energy depending on the type of the cooler due to the absence of loss currents and chips due to their location in the dielektirke and ensure their constant temperature;

From the "underwater" servers to PCs, coolant, or how to create a workstation in a fluid at home h4> Of course, this idea has not received and will not receive such a wide application in the PC market, simply because most already long passed for laptops and other gadgets, home workstations in the body «tower» often used only by professionals, because they need more performance and discharge of large amounts of heat. That's for them to dive their invaluable iron in the liquid can be very useful!

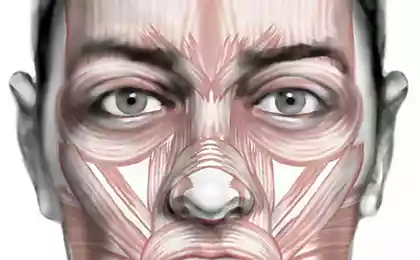

I want in servers swimming fish! Throw the fish there! H4> I dare to admit that seeing different RYBOV under water - an unforgettable experience. I am a diver and would not refuse a workstation at home in the form of an aquarium with tropical or tropical fish is not very.

In this article, we will consider the advantages and disadvantages of placing servers in the liquid, and discuss possible problems of exploitation. We show how it may look like in practice and really work. And to discuss the question of why servers may or may not fish swim :)

For a long time, loss of energy and cooling costs in the operation of the servers did not give rest to many, including us, as the number of servers used by our subscribers constantly growing rapidly, we all think more about creating your own data processing center (DPC) in the foreseeable future. And when more than half of the energy consumed by all TsODom, the cost of cooling air, thanks to which you can not by more than a factor of efficiency of 1.7 divert the waste heat from the equipment, voluntarily involuntarily ask yourself, how you can improve the cooling efficiency and minimize energy loss?

Of course in physics is known that the air - not very efficient conductor of heat, since its thermal conductivity is 25 times lower than the thermal conductivity of water. It is rather more suitable for thermal insulation, rather than for the heat sink. He also has a very small heat capacity, which means that it is constantly necessary to intensively mix and deliver large volumes of cooling. Another thing - water and liquids. It is their use in the cooling system of data centers as a heat exchanger to increase the overall efficiency factor, but directly from the server of the liquid is not in contact only through an air gap and / or the heat sink (for cooling the chipset for example), which improves the efficiency ratio mehnichesky cooling system ( mPUE) up to 1.2 or even 1.15 when using the ambient for cooling purposes.

But how cool the server most effectively? Only one way out - to put it completely in the liquid (of course dielektirik), preferably with a greater thermal conductivity and heat capacity, which will not have a negative effect on the components of the server. And such a dielectric can be mineral oil. The idea, unfortunately and fortunately, was not new - it has a few years to develop and implement a number of companies in various forms and with varying efficiency. Modern technology allows to build a "submarine" Data Center! But what are the advantages and disadvantages of this solution?