472

Created program to recognize emotions

The emotional state can be determined according to how the person is typing on the computer keyboard.

Our emotions are the easiest to identify by facial expression and voice. However, faces and voices in the world as much as people, and how can we be sure that some emotional face different people Express the same – for example, fear, or joy, or sadness?

In fact, for us there is no problem, our mind can easily cope with a variety of features of someone else's appearance and easily isolates the facial expressions of a shared emotional components. The same thing happens with the voice. However, if we let us aim to teach recognition of emotions of the robot, we need clear criteria on which machine would be able to distinguish fear from joy, joy from sadness in any of their intensity, with any colors and any persons.

Researchers from the Islamic University of technology in Bangladesh have chosen to solve this problem an original way: they focused not on the facial expressions and fingers. For recognition of emotions was to focus on how a person types on the keyboard. In the first part of the experiment, 25 volunteers aged 15 to 40 years reprinted a passage from "Alice in Wonderland" by Lewis Carroll, while noting their emotional state: joy, fear, anger, sadness, shame, etc. If certain emotions were not, it was possible to choose fatigue or neutral emotions. (Of course, that emotions could be in any way with the text are not related, the man could do the typing while under the influence of some of their thoughts and feelings.)

In the second part of the experiment, the volunteers and published something of their own, but every half hour they were reminded about a certain emotion: sadness, shame, fear, joy and the list goes on – they had to get into that emotional state and remain in it while typing. Simultaneously a special program to collect information about how users click on buttons on the keypad.

In an article in Behavior & Information Technology, the authors write that they were able to identify 19 key parameters on which to judge the emotional state of the printing. Among them were, for example, print speed in the interval of five seconds, and the time during which the key remains pressed. The values of the parameters measured on the free text, were compared with standard values for specific emotions, and words which are received via text Carroll. In this way, as we are assured by researchers, it is possible with great certainty to identify seven different emotions. Authentic just managed to do it with joy (the correct response was 87% of the time) and anger (the correct answer is in 81% of cases).

Compared to the detectors of emotions that work with the facial expressions and voice intonations, the "print" method has one notable drawback: if the emotion on her face and in her voice manifests itself, then then the person needs to do something – typing. If it does not print, then emotions do not get to determine. So, obviously, this method needs to work in conjunction with facial and voice detectors. However, by itself, it can also be useful: imagine, for instance, psychological counseling online – in this situation, the psychologist could obtain information about the emotional state of a patient only by his manner of print, and at the same time to compare how human emotions correspond to the content of typed messages to them.

Our emotions are the easiest to identify by facial expression and voice. However, faces and voices in the world as much as people, and how can we be sure that some emotional face different people Express the same – for example, fear, or joy, or sadness?

In fact, for us there is no problem, our mind can easily cope with a variety of features of someone else's appearance and easily isolates the facial expressions of a shared emotional components. The same thing happens with the voice. However, if we let us aim to teach recognition of emotions of the robot, we need clear criteria on which machine would be able to distinguish fear from joy, joy from sadness in any of their intensity, with any colors and any persons.

Researchers from the Islamic University of technology in Bangladesh have chosen to solve this problem an original way: they focused not on the facial expressions and fingers. For recognition of emotions was to focus on how a person types on the keyboard. In the first part of the experiment, 25 volunteers aged 15 to 40 years reprinted a passage from "Alice in Wonderland" by Lewis Carroll, while noting their emotional state: joy, fear, anger, sadness, shame, etc. If certain emotions were not, it was possible to choose fatigue or neutral emotions. (Of course, that emotions could be in any way with the text are not related, the man could do the typing while under the influence of some of their thoughts and feelings.)

In the second part of the experiment, the volunteers and published something of their own, but every half hour they were reminded about a certain emotion: sadness, shame, fear, joy and the list goes on – they had to get into that emotional state and remain in it while typing. Simultaneously a special program to collect information about how users click on buttons on the keypad.

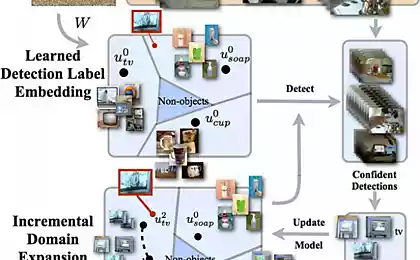

In an article in Behavior & Information Technology, the authors write that they were able to identify 19 key parameters on which to judge the emotional state of the printing. Among them were, for example, print speed in the interval of five seconds, and the time during which the key remains pressed. The values of the parameters measured on the free text, were compared with standard values for specific emotions, and words which are received via text Carroll. In this way, as we are assured by researchers, it is possible with great certainty to identify seven different emotions. Authentic just managed to do it with joy (the correct response was 87% of the time) and anger (the correct answer is in 81% of cases).

Compared to the detectors of emotions that work with the facial expressions and voice intonations, the "print" method has one notable drawback: if the emotion on her face and in her voice manifests itself, then then the person needs to do something – typing. If it does not print, then emotions do not get to determine. So, obviously, this method needs to work in conjunction with facial and voice detectors. However, by itself, it can also be useful: imagine, for instance, psychological counseling online – in this situation, the psychologist could obtain information about the emotional state of a patient only by his manner of print, and at the same time to compare how human emotions correspond to the content of typed messages to them.