486

Artificial intelligence has learned to describe in human language what he sees

First, computers have learned to recognize our faces in the pictures, and now they all are able to accurately describe what a person does on a particular image.

Last month, Google engineers showed the public a neural network Deep Dream, which is able to transform pictures into fantastic abstract visions, but now scientists from Stanford presented their project NeuralTalk to describe in human language what she sees.

For the first time NeuralTalk was mentioned in last year. The development of this system is led by the Director of the artificial intelligence Lab at Stanford University FEI-FEI Li and his graduate Andrew Carpathians are. Software written in the framework of the project, able to perform complex image and accurately determine what happens to him, describing everything you see is spoken in human language.

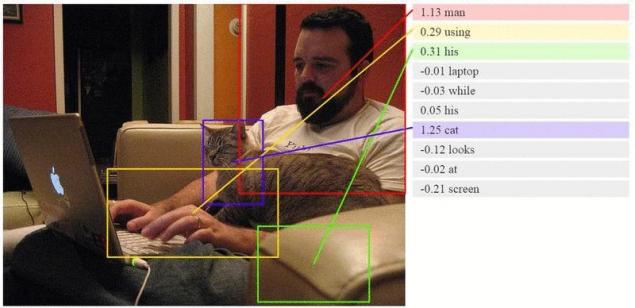

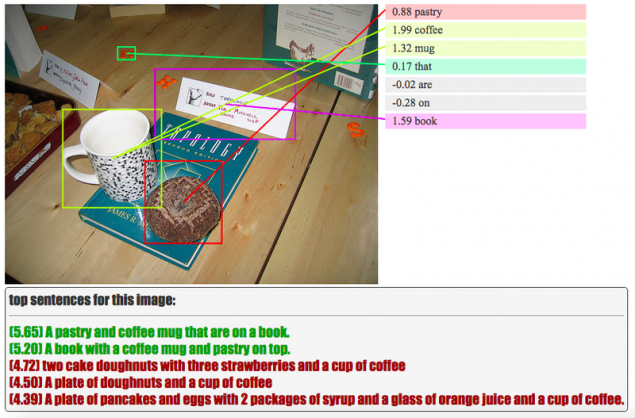

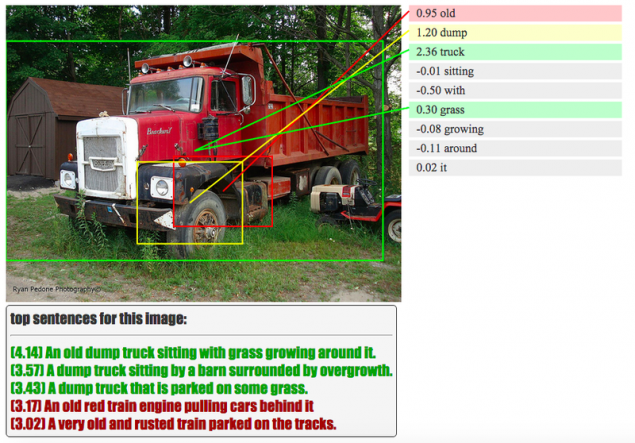

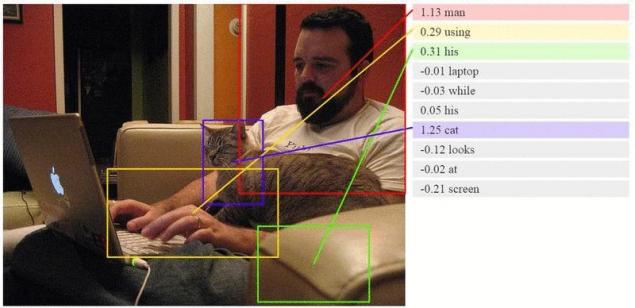

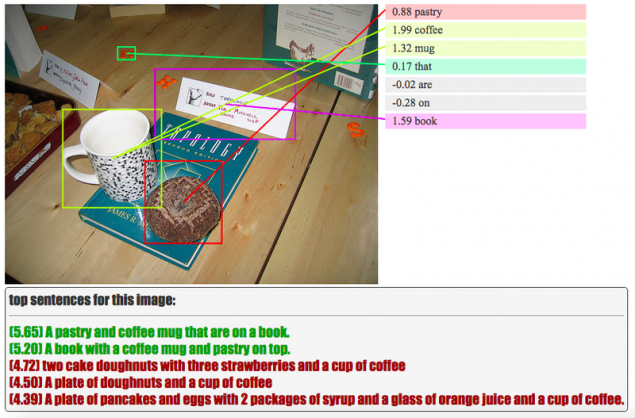

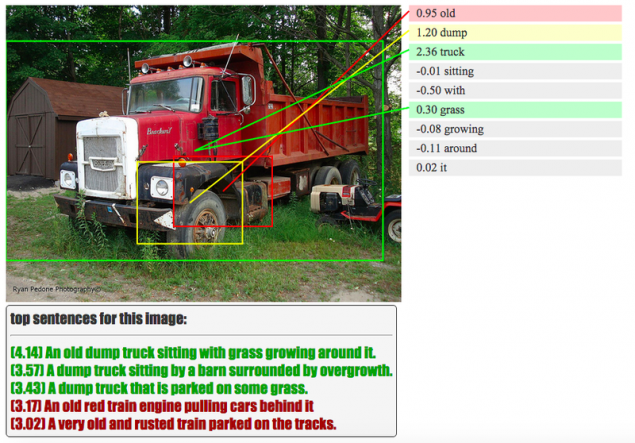

For example, if the photo depicts a man in a black shirt playing the guitar, artificial intelligence and describe what he saw: "a man in a black shirt plays the guitar". Of course, the work is still in progress, so the algorithm is quite often makes funny mistakes, but without it modern science anywhere. You can see below, looks like a UI test version of the algorithm. AI finds in the image are separate objects, events or actions and assigns them individual words, eventually folding them into a meaningful sentence.

Different colors marked objects, the correct recognition of which is artificial intelligence I am sure in one way or another. You can see the process of learning artificial intelligence on the official website of the researchers.

Like a Deep Dream system from Google, NeuralTalk uses a neural network. The algorithm compares the new image with the already seen earlier photos, like a small child learning new words and remembering the images of objects. Scientists repeatedly explain artificial intelligence looks like a cat Burger or boots, and NeuralTalk remembers all this, and almost unmistakably identifies these images in the future.

Developers will have a difficult and very tedious work to teach the hungry to the knowledge of artificial intelligence. They should put up millions "of tags with names" to the different objects depicted on thousands of shots before the AI learns to describe images showing him and the situation. For starters, scientists hope that they will create a search engine, which for the moment will be able to find an interesting picture in the vast expanses of the Internet.

Potentially in the future, such a neural network is capable of much more. For example, the algorithm may find not only photos, but you are interested in the moment in the film, TV series or video from YouTube.published

P. S. And remember, only by changing their consumption — together we change the world! ©

Source: hi-news.ru

Last month, Google engineers showed the public a neural network Deep Dream, which is able to transform pictures into fantastic abstract visions, but now scientists from Stanford presented their project NeuralTalk to describe in human language what she sees.

For the first time NeuralTalk was mentioned in last year. The development of this system is led by the Director of the artificial intelligence Lab at Stanford University FEI-FEI Li and his graduate Andrew Carpathians are. Software written in the framework of the project, able to perform complex image and accurately determine what happens to him, describing everything you see is spoken in human language.

For example, if the photo depicts a man in a black shirt playing the guitar, artificial intelligence and describe what he saw: "a man in a black shirt plays the guitar". Of course, the work is still in progress, so the algorithm is quite often makes funny mistakes, but without it modern science anywhere. You can see below, looks like a UI test version of the algorithm. AI finds in the image are separate objects, events or actions and assigns them individual words, eventually folding them into a meaningful sentence.

Different colors marked objects, the correct recognition of which is artificial intelligence I am sure in one way or another. You can see the process of learning artificial intelligence on the official website of the researchers.

Like a Deep Dream system from Google, NeuralTalk uses a neural network. The algorithm compares the new image with the already seen earlier photos, like a small child learning new words and remembering the images of objects. Scientists repeatedly explain artificial intelligence looks like a cat Burger or boots, and NeuralTalk remembers all this, and almost unmistakably identifies these images in the future.

Developers will have a difficult and very tedious work to teach the hungry to the knowledge of artificial intelligence. They should put up millions "of tags with names" to the different objects depicted on thousands of shots before the AI learns to describe images showing him and the situation. For starters, scientists hope that they will create a search engine, which for the moment will be able to find an interesting picture in the vast expanses of the Internet.

Potentially in the future, such a neural network is capable of much more. For example, the algorithm may find not only photos, but you are interested in the moment in the film, TV series or video from YouTube.published

P. S. And remember, only by changing their consumption — together we change the world! ©

Source: hi-news.ru