222

Kevin Abosch: The ethical framework for artificial intelligence

The idea that artificial intelligence will inevitably lead us to a scenario in which machines rebel against humans is quite popular. Artificial superintelligence seems to be the greatest possible threat, and fantastic plots that say we will not be needed in a world owned by technology have never lost popularity.

Is that inevitable? The literary and cinematic depiction of intelligent computer systems from the '60s helped shape and generalize our expectations of the future as we embark on a path to creating machine intelligence superior to human intelligence. AI has obviously already outperformed humans in certain specific tasks requiring complex computations, but still lags behind in a number of other capabilities. How to simultaneously increase the power of this harsh instrument and maintain our economic position over it.

With artificial intelligence already playing, and will continue to play, a big role in our future, it is critical to explore our possibilities of coexisting with these complex technologies.

Kevin Abosch, the founder of Kwikdesk, which is engaged in data processing and the development of artificial intelligence, shared his thoughts on this matter. He believes that artificial intelligence should be fast, invisible, reliable, competent and ethical. Yes, ethical.

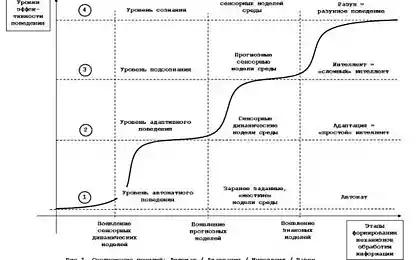

The concept of an artificial neural network, created in the likeness of a biological neural network, is nothing new. Units of computational power, called neurons, connect to each other and form a network. Each neuron applies a complex learning algorithm at the input before transferring the data to other neurons until the neuron is activated at the output and opens up the possibility of reading. Expert systems rely on people to “teach” the system by sowing the seeds of knowledge. Logical processing mechanisms look for correspondences, make choices, and establish “if this, then” rules in relation to the knowledge base. In this process, new knowledge is added to the knowledge base. A pure neural network learns in the process of obtaining nonlinear experience, has no problems of sowing knowledge by an expert. Hybrid networks have proven to improve machine learning capabilities.

Now let’s look at the ethical issues of these systems. From the first person.

The author uses words to immerse the reader in a fictional world, and does it in different ways, but great authors do it very gracefully. A software engineer writes lines of code that facilitate data processing and movement. He, too, can choose from a number of options different ways, but elegant coders - folks of computer science. The advancing encoder focuses on how to incorporate as much and better as possible in short and graceful code. The extra code is kept to a minimum. The great code also keeps an open window for subsequent additions. Other engineers can add code with their inherent elegance, and the product develops seamlessly.

At the heart of any man-made product is intent. Things made by people are imbued with intentions, and to one degree or another are carriers of the very nature of the creator. Some people find it difficult to imagine an inanimate object with such a nature. But many would agree. The energy of intention has existed for thousands of years, unifies, divides, unites, transforms society. The power of language cannot be underestimated. Remember that code lines are written in a specific programming language. As such, I am convinced that code that becomes software used on computers or mobile devices is very "living."

Without considering wisdom and spirituality in the context of computer science and the potential consequences of artificial intelligence, we can still see static code as one with the potential to do good or do evil. These outputs find themselves only in the process of using applications by people. It is the clear choices people make that affect the nature of the app. They can be considered in a local system, determining the positive or negative impact on that system, or based on a set of predetermined standards. However, just as a journalist cannot be 100% impartial in the process of writing an article, so an engineer willfully or unwittingly adds the nature of his intentions to the code. Some might argue that writing code is a logical process, and real logic leaves no room for nature.

But I'm sure the moment you create a rule, a block of code, or an entire code, it's all imbued with an element of human nature. With each additional rule, the penetration of the progeny deepens. The more complex the code, the more of this nature there is. This raises the question, “Can the nature of code be good or evil?”

Obviously, a virus developed by a hacker that maliciously breaks through your computer’s defenses and wreaks havoc on your life is imbued with evil nature. But what about a virus created by the “good guys” to infiltrate a terrorist organization’s computers to prevent terrorist attacks? What is his nature? Technically, it can be identical to its nefarious counterpart, just used for “good” purposes. So his nature is good? This is the ethical paradox of malware. But we couldn’t ignore the “evil” code.

In my opinion, there is code that is inherently biased toward “evil,” and there is code that is inherently benevolent. This is more important in the context of offline computers.

At Kwikdesk, we are developing an AI framework and protocol based on my design of an expert system/hybrid neural network that most resembles a biological model created to date. Neurons appear as input/output modules and virtual devices (in a sense, autonomous agents) connected by “axons,” secure, separated channels of encrypted data. This data is decrypted as it enters a neuron and, after certain processes, is encrypted before being sent to the next neuron. Before neurons can communicate with each other through the axon, there must be an exchange of participant and channel keys.

I believe that security and separation should be built into such networks from the lowest level. The add-ons reflect the qualities of their smallest components, so anything smaller than the safe building blocks will cause the entire line to operate unsafely. For this reason, data must be protected locally and decrypted in local transmission.

The quality of our lives together with machines that are getting smarter and smarter is understandably worrying, and I am absolutely confident that we must take action to ensure a healthy future for generations to come. The threats of smart machines are potentially diverse, but can be broken down into the following categories:

Reservation. In the workplace, people will be replaced by cars. This shift has been happening for decades and will only accelerate. Education is needed to prepare people for a future in which hundreds of millions of traditional jobs will simply cease to exist. It's complicated.

Security. We rely entirely on machines and will continue to rely. As we increasingly trust machines as we move from a safe zone to a potential danger zone, we may face the risk of machine error or malicious code. Think about transportation, for example.

Health. Personal diagnostic devices and network medical data. AI will continue to advance in preventive medicine and crowdsourced genetic data analysis. Again, we must have assurances that these machines will not engage in malicious subversion or harm us in any way.

Fate. AI predicts with increasing accuracy where you will go and what you will do. As this industry evolves, it will know what decisions we make, where we will go next week, what products we will buy or even when we die. Do we want others to have access to this data?

Knowledge. Machines de facto accumulate knowledge. But if they acquire knowledge faster than humans can test them, how can we trust their integrity?

In conclusion, a vigilant and responsible approach to AI to mitigate potential troubles in a technological supernova explosion is our way. We will either tame the potential of AI and pray that it will bring only the best to humanity, or we will burn in its potential, which will reflect the worst in us.

Source: hi-news.ru

How to grow annual Chinese asters: growing from seed in the open ground.

Design ideas flower beds and garden beds