562

Scientist Stuart Russell on artificial intelligence

Ninety six million two hundred thirty two thousand five hundred one

In January, British-American computer scientist Stuart Russell was the first who signed an open letter calling for researchers to think deeper about their desire to create an artificial intelligence more powerful and more powerful. "We recommend expanded research aimed at ensuring the reliability and goodwill of AI systems with the increasing power — was stated in the letter. — Our AI systems must do what we want them to do that."

Thousands of people signed the letter, including the leading researchers of artificial intelligence from Google, Facebook, Microsoft and other industrial centers, as well as leading computer scientists, physicists and philosophers around the world. By the end of March, about 300 research groups started to conduct a new study on the "preservation of the goodwill of artificial intelligence" at the expense of the 37 signatory of the letter, the inventor-entrepreneur Elon musk.

Russell, 53, is a Professor of computer science and founder of the Center for intelligent systems at the University of California at Berkeley and has long been studying the power-and dangers of thinking machines. He is the author of more than 200 papers and a standard textbook in this field, "Artificial intelligence: a modern approach" (co-authored with Peter Norvega). However, the rapid progress in the field of AI gave the old fears Russell's significant relevance.

Lately, he says, artificial intelligence has achieved great success, partly thanks to algorithms of neural learning. They are used in software face detection Facebook, personal assistants, smartphones and self-driving cars Google. Not so long ago the whole world was rocked by a network of artificial neurons, which learned to play Atari video games better than humans for a couple of hours, based on the only data presented on the screen, and the goal is to score maximum points — no pre-programmed knowledge about the aliens, the bullets, right, left, bottom, top.

"If your newborn baby has done that, you'd think he's obsessed," says Russell.

Magazine Quanta Magazine took Russell interview during a Breakfast meeting of the American physical society in March 2015 in San Antonio, Texas, a day before he was supposed to keep talking about the future of artificial intelligence. In this processed and leaner version of the interview with Russell reflects on the nature of intelligence and about the rapid approach of the problems related to safety of machinery.

Do you think the purpose of your field — the development of artificial intelligence "provably converges" with human values. What's the meaning of that?

This deliberately provocative statement, since the combination of two things — "provable" and "human values" — is in itself controversial. It may well be that human values will remain a mystery forever. But just as these values are revealed in our behavior and you can hope that the machine can "make" the most of them. The corners can be pieces and parts that the machine will not understand or which we cannot find a common opinion among their own. But while the machine will meet the correct requirements, you will be able to show that it is harmless.

How are you going to achieve this?

That's how the question I'm working: at what point the car will be the most similar values to the ones that people need? I think one answer may be technique called "reverse learning consolidation." Regular training reinforcement is the process where you get rewards and punishments, but your goal is to bring the process to obtain the maximum number of awards. This is DQN (playing Atari); it gets the score, and her goal is to make this score higher. Feedback training with reinforcement another way. You see some behavior and trying to understand what this behavior is trying to increase. For example, your home robot sees you in the morning crawl out of bed to throw a weird brown circle in a noisy machine to do a complex operation with steam and hot water, milk and sugar, and then look happy. The system determines that the part of essential values for human — to coffee in the morning.

In books, movies and the Internet there is a huge amount of information about human actions and relations to action. This is an incredible database for machines that learn the value of people — who gets the medals, who goes to prison and why.

Three million seven hundred thirty five thousand five hundred twenty six

How did you get into artificial intelligence?

When I was in high school, AI was not considered an academic discipline in the long run. But I went to boarding school in London, and I have not had the opportunity to attend compulsory Rugby, doing computer science at a nearby College. One of my projects was a program that learns to play TIC-TAC-toe. I had lost all popularity because sitting at a computer College to the bitter end. The following year I wrote a chess program and received permission of one of the professors of the Imperial College for the use of their giant mainframe computer. It was incredible to try to figure out how to teach him to play chess.

However, it was all just a hobby; at the time, my academic interest was in physics. I'm studying physics in Oxford. And then when I went to graduate school, then moved to theoretical physics in Oxford and Cambridge and computer science at the University of Massachusetts and Stanford, not realizing that I missed all the deadlines for filing in the United States. Fortunately, Stanford ignored the time, so I went into it.

During your career you spent a lot of time trying to understand what the intellect as a necessary condition, which can understand and reach the car. What have you learned?

During my dissertation research in the 80-ies, I began to think about rational decision-making and the issue of the practical impossibility of this. If you were rational, you would have thought: here's my current status, here are the steps that I can take, then you can do this, then this; what is the path guaranteed to lead me to the goal? The definition of rational behavior requires optimization of the entire future of the Universe. It is impossible from the point of view of computation.

There's no point in trying to cram in AI is impossible, so I was thinking about something: as the cases we make decisions?

How can you tell?

One trick is to think in the short term, and then suggest what might look like the future. Chess programs, for example — if it were rational, then played the only moves that guarantees the Mat, but they do not. Instead, they calculate dozens of moves ahead and make assumptions about how useful these future state and then make decisions that lead to one of the best States.

Fifty eight million six hundred seven thousand three hundred fifty three

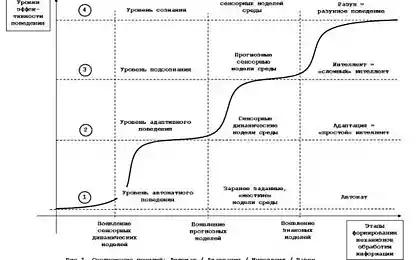

Report, it is important not to forget, this is the problem of solutions at multiple levels of abstraction, "hierarchical decision making". A person commits the order of 20 trillion physical activities in my life. To come to this conference and to share information needed to make 1.3 billion actions or so. If you were rational, you would have tried to count all 1.3 billion steps — that, in fact, quite impossible. People do this due to the huge baggage of abstract high-level steps. Don't you think, "when I come home, I put left or right foot on the Mat, and then can so-and-so." You think, "I'll go to the store and buy a book. Then call a taxi." And that's all. You do not think much, until you require clarification of complex parts. And live, in principle. The future is blurred, so many details close to us in time, but mostly presented in large pieces like "to the extent", "to have children".

Are computers currently capable of hierarchical decision-making?

This is one of the missing pieces of the puzzle now is: where are all of these actions at a high level? We do not think that software like network-DQN have abstract ideas about actions. There are many games that DQN just doesn't understand, and these games are complex, requiring many steps, is calculated in advance, in the form of primitive concepts of action — something like when a person thinks "what do I need to open the door?"and opening the door involves removing the key and so on. If the machine has no such representation "open the door", she will not be able to do anything.

But if this problem will be solved — and it is quite possible — then we will see another significant increase in the capabilities of the machines. There are two or three issues and if you solve them all, then I wonder whether there will be still major obstacles to AI of human level.

What bothers you the possibility of AI is human level?

In the first edition of my book (1994) has a section "What if we succeed?"because it seemed to me that people don't think about it too much. Probably, but then it seemed too distant and impossible. But it's obvious that success will be a huge event. "The biggest event in the history of mankind", perhaps it is possible to describe it. And if so, then we need to consider what we're doing to hone the shape of this possible event.

The main idea of the intelligence explosion is that once the machines reach a certain level of intelligence, they can work on AI as well as we, and to improve their own characteristics — to reprogram yourself and change toppings — and their intelligence will grow by leaps and bounds. Over the past few years the community has gradually honed their arguments as to why this may be a problem. The most convincing argument may lie in the equation of values: you build a system that is extremely good in optimizing useful features, but the notion of usefulness is questionable. Oxford philosopher Nick Bostrom gives the example of a paper-clip. You say "take a couple of paper clips", but in the end the entire planet is a storehouse of staples. You build soroptimists, but useful feature, which you can reward, it can be useless.

Fifty eight million four hundred fifty nine thousand eight hundred fifty nine

How about differences in human values?

It is an internal problem. We can say, the car needs to go to the sidelines and do nothing in areas where there may be a conflict of values. And it is a difficult task. I think we should create a system of values. If you need a home robot, it needs a good grasp of human values; otherwise, it will do stupid things, putting the cat in the oven because the fridge has no food, and the children were hungry. Real life is full of such compromises. If the machine goes to such a compromise and it turns out that she doesn't understand something — something that obviously people hardly you want that car home.

I don't see any real way to create a kind of industry values. And I also think there's a huge economic incentive for its creation. Domestic robot to do one or two things wrong — like roasting a cat in the oven — and people instantly lose confidence in him.

The question arises, if we are going to teach intelligent systems to behave correctly, as the transition to a more rational system will this mean that we will get the best value system, purified from the silly moments, or machines will still dicking around? I have no answer to this question.

You claimed that we need to mathematically verify the behavior of the AI under all possible circumstances. How will this work?

One of the difficulties referred to by the people, that the system may randomly produce a new version, which will have other goals. This is one of the scenarios, which constantly say science fiction writers: somehow the machine spontaneously gets the goal to destroy the human race. The question is: can you prove that your system never, no matter how smart was not, not rewrite the original purpose of people?

Will be relatively easy to prove that the system is DQN, if written, would never be able to change your goal or optimize it. But imagine that someone with the wires on the head really get in the console games Atari and physically change the thing that earns points on the screen. DQN yet, because she is in the game, and it has no manipulator. But if the machine will operate in the real world, this will be a serious problem. So, if you can prove that your system is designed in such a way that can never change mechanism tied to a set of points, even if they want to? It is more difficult to prove.

In this direction there are some promising achievements?

There is a developing area of the so-called cyber-physical systems associated with the systems that bring computers to the real world. With cyber-physical system you will receive a set of bits that represents the program of air traffic control, then the real planes and take care to ensure that these aircraft are not encountered. Are you trying to prove a theorem about the combination of bits and the physical world. You need to write a pretty conservative mathematical description of the physical world — the aircraft accelerated to such and such a way, your theorem should remain true in the real world as long as the real world will be in such a envelope of behaviors.

You said that it may be mathematically impossible to formally test the AI system?

There is a common problem of "insolubility" in many questions you can ask on the topic of computer programs. Alan Turing showed that no computer program can't decide when another program will give the answer, and when you get stuck in an infinite loop. So if you start with one program, and it will overwrite itself and become another program, you will have a problem because you can't prove that all possible other programs will have to meet certain criteria. So should we then worry about the unsolvability of AI systems that overwrite themselves? They will overwrite itself in the new program based on existing programs plus experience that you get from the real world. What are the consequences of close interaction of programs with the real world? We don't know yet.published

P. S. And remember, only by changing their consumption — together we change the world! ©

Source: hi-news.ru

In January, British-American computer scientist Stuart Russell was the first who signed an open letter calling for researchers to think deeper about their desire to create an artificial intelligence more powerful and more powerful. "We recommend expanded research aimed at ensuring the reliability and goodwill of AI systems with the increasing power — was stated in the letter. — Our AI systems must do what we want them to do that."

Thousands of people signed the letter, including the leading researchers of artificial intelligence from Google, Facebook, Microsoft and other industrial centers, as well as leading computer scientists, physicists and philosophers around the world. By the end of March, about 300 research groups started to conduct a new study on the "preservation of the goodwill of artificial intelligence" at the expense of the 37 signatory of the letter, the inventor-entrepreneur Elon musk.

Russell, 53, is a Professor of computer science and founder of the Center for intelligent systems at the University of California at Berkeley and has long been studying the power-and dangers of thinking machines. He is the author of more than 200 papers and a standard textbook in this field, "Artificial intelligence: a modern approach" (co-authored with Peter Norvega). However, the rapid progress in the field of AI gave the old fears Russell's significant relevance.

Lately, he says, artificial intelligence has achieved great success, partly thanks to algorithms of neural learning. They are used in software face detection Facebook, personal assistants, smartphones and self-driving cars Google. Not so long ago the whole world was rocked by a network of artificial neurons, which learned to play Atari video games better than humans for a couple of hours, based on the only data presented on the screen, and the goal is to score maximum points — no pre-programmed knowledge about the aliens, the bullets, right, left, bottom, top.

"If your newborn baby has done that, you'd think he's obsessed," says Russell.

Magazine Quanta Magazine took Russell interview during a Breakfast meeting of the American physical society in March 2015 in San Antonio, Texas, a day before he was supposed to keep talking about the future of artificial intelligence. In this processed and leaner version of the interview with Russell reflects on the nature of intelligence and about the rapid approach of the problems related to safety of machinery.

Do you think the purpose of your field — the development of artificial intelligence "provably converges" with human values. What's the meaning of that?

This deliberately provocative statement, since the combination of two things — "provable" and "human values" — is in itself controversial. It may well be that human values will remain a mystery forever. But just as these values are revealed in our behavior and you can hope that the machine can "make" the most of them. The corners can be pieces and parts that the machine will not understand or which we cannot find a common opinion among their own. But while the machine will meet the correct requirements, you will be able to show that it is harmless.

How are you going to achieve this?

That's how the question I'm working: at what point the car will be the most similar values to the ones that people need? I think one answer may be technique called "reverse learning consolidation." Regular training reinforcement is the process where you get rewards and punishments, but your goal is to bring the process to obtain the maximum number of awards. This is DQN (playing Atari); it gets the score, and her goal is to make this score higher. Feedback training with reinforcement another way. You see some behavior and trying to understand what this behavior is trying to increase. For example, your home robot sees you in the morning crawl out of bed to throw a weird brown circle in a noisy machine to do a complex operation with steam and hot water, milk and sugar, and then look happy. The system determines that the part of essential values for human — to coffee in the morning.

In books, movies and the Internet there is a huge amount of information about human actions and relations to action. This is an incredible database for machines that learn the value of people — who gets the medals, who goes to prison and why.

Three million seven hundred thirty five thousand five hundred twenty six

How did you get into artificial intelligence?

When I was in high school, AI was not considered an academic discipline in the long run. But I went to boarding school in London, and I have not had the opportunity to attend compulsory Rugby, doing computer science at a nearby College. One of my projects was a program that learns to play TIC-TAC-toe. I had lost all popularity because sitting at a computer College to the bitter end. The following year I wrote a chess program and received permission of one of the professors of the Imperial College for the use of their giant mainframe computer. It was incredible to try to figure out how to teach him to play chess.

However, it was all just a hobby; at the time, my academic interest was in physics. I'm studying physics in Oxford. And then when I went to graduate school, then moved to theoretical physics in Oxford and Cambridge and computer science at the University of Massachusetts and Stanford, not realizing that I missed all the deadlines for filing in the United States. Fortunately, Stanford ignored the time, so I went into it.

During your career you spent a lot of time trying to understand what the intellect as a necessary condition, which can understand and reach the car. What have you learned?

During my dissertation research in the 80-ies, I began to think about rational decision-making and the issue of the practical impossibility of this. If you were rational, you would have thought: here's my current status, here are the steps that I can take, then you can do this, then this; what is the path guaranteed to lead me to the goal? The definition of rational behavior requires optimization of the entire future of the Universe. It is impossible from the point of view of computation.

There's no point in trying to cram in AI is impossible, so I was thinking about something: as the cases we make decisions?

How can you tell?

One trick is to think in the short term, and then suggest what might look like the future. Chess programs, for example — if it were rational, then played the only moves that guarantees the Mat, but they do not. Instead, they calculate dozens of moves ahead and make assumptions about how useful these future state and then make decisions that lead to one of the best States.

Fifty eight million six hundred seven thousand three hundred fifty three

Report, it is important not to forget, this is the problem of solutions at multiple levels of abstraction, "hierarchical decision making". A person commits the order of 20 trillion physical activities in my life. To come to this conference and to share information needed to make 1.3 billion actions or so. If you were rational, you would have tried to count all 1.3 billion steps — that, in fact, quite impossible. People do this due to the huge baggage of abstract high-level steps. Don't you think, "when I come home, I put left or right foot on the Mat, and then can so-and-so." You think, "I'll go to the store and buy a book. Then call a taxi." And that's all. You do not think much, until you require clarification of complex parts. And live, in principle. The future is blurred, so many details close to us in time, but mostly presented in large pieces like "to the extent", "to have children".

Are computers currently capable of hierarchical decision-making?

This is one of the missing pieces of the puzzle now is: where are all of these actions at a high level? We do not think that software like network-DQN have abstract ideas about actions. There are many games that DQN just doesn't understand, and these games are complex, requiring many steps, is calculated in advance, in the form of primitive concepts of action — something like when a person thinks "what do I need to open the door?"and opening the door involves removing the key and so on. If the machine has no such representation "open the door", she will not be able to do anything.

But if this problem will be solved — and it is quite possible — then we will see another significant increase in the capabilities of the machines. There are two or three issues and if you solve them all, then I wonder whether there will be still major obstacles to AI of human level.

What bothers you the possibility of AI is human level?

In the first edition of my book (1994) has a section "What if we succeed?"because it seemed to me that people don't think about it too much. Probably, but then it seemed too distant and impossible. But it's obvious that success will be a huge event. "The biggest event in the history of mankind", perhaps it is possible to describe it. And if so, then we need to consider what we're doing to hone the shape of this possible event.

The main idea of the intelligence explosion is that once the machines reach a certain level of intelligence, they can work on AI as well as we, and to improve their own characteristics — to reprogram yourself and change toppings — and their intelligence will grow by leaps and bounds. Over the past few years the community has gradually honed their arguments as to why this may be a problem. The most convincing argument may lie in the equation of values: you build a system that is extremely good in optimizing useful features, but the notion of usefulness is questionable. Oxford philosopher Nick Bostrom gives the example of a paper-clip. You say "take a couple of paper clips", but in the end the entire planet is a storehouse of staples. You build soroptimists, but useful feature, which you can reward, it can be useless.

Fifty eight million four hundred fifty nine thousand eight hundred fifty nine

How about differences in human values?

It is an internal problem. We can say, the car needs to go to the sidelines and do nothing in areas where there may be a conflict of values. And it is a difficult task. I think we should create a system of values. If you need a home robot, it needs a good grasp of human values; otherwise, it will do stupid things, putting the cat in the oven because the fridge has no food, and the children were hungry. Real life is full of such compromises. If the machine goes to such a compromise and it turns out that she doesn't understand something — something that obviously people hardly you want that car home.

I don't see any real way to create a kind of industry values. And I also think there's a huge economic incentive for its creation. Domestic robot to do one or two things wrong — like roasting a cat in the oven — and people instantly lose confidence in him.

The question arises, if we are going to teach intelligent systems to behave correctly, as the transition to a more rational system will this mean that we will get the best value system, purified from the silly moments, or machines will still dicking around? I have no answer to this question.

You claimed that we need to mathematically verify the behavior of the AI under all possible circumstances. How will this work?

One of the difficulties referred to by the people, that the system may randomly produce a new version, which will have other goals. This is one of the scenarios, which constantly say science fiction writers: somehow the machine spontaneously gets the goal to destroy the human race. The question is: can you prove that your system never, no matter how smart was not, not rewrite the original purpose of people?

Will be relatively easy to prove that the system is DQN, if written, would never be able to change your goal or optimize it. But imagine that someone with the wires on the head really get in the console games Atari and physically change the thing that earns points on the screen. DQN yet, because she is in the game, and it has no manipulator. But if the machine will operate in the real world, this will be a serious problem. So, if you can prove that your system is designed in such a way that can never change mechanism tied to a set of points, even if they want to? It is more difficult to prove.

In this direction there are some promising achievements?

There is a developing area of the so-called cyber-physical systems associated with the systems that bring computers to the real world. With cyber-physical system you will receive a set of bits that represents the program of air traffic control, then the real planes and take care to ensure that these aircraft are not encountered. Are you trying to prove a theorem about the combination of bits and the physical world. You need to write a pretty conservative mathematical description of the physical world — the aircraft accelerated to such and such a way, your theorem should remain true in the real world as long as the real world will be in such a envelope of behaviors.

You said that it may be mathematically impossible to formally test the AI system?

There is a common problem of "insolubility" in many questions you can ask on the topic of computer programs. Alan Turing showed that no computer program can't decide when another program will give the answer, and when you get stuck in an infinite loop. So if you start with one program, and it will overwrite itself and become another program, you will have a problem because you can't prove that all possible other programs will have to meet certain criteria. So should we then worry about the unsolvability of AI systems that overwrite themselves? They will overwrite itself in the new program based on existing programs plus experience that you get from the real world. What are the consequences of close interaction of programs with the real world? We don't know yet.published

P. S. And remember, only by changing their consumption — together we change the world! ©

Source: hi-news.ru