1731

Logic avtorobota from machine vision to control transmission

A.Zhukovsky, S.Usilin, V.Postnikov h6> Today we want to talk about the new project, which began just over a year ago at the department "cognitive technologies" MIPT.

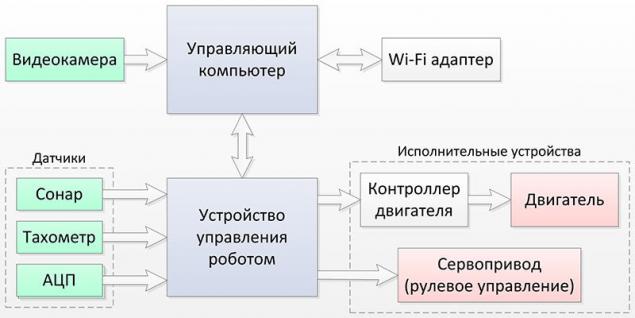

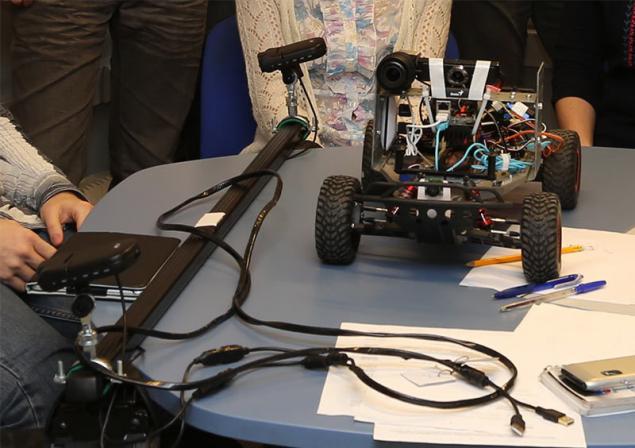

It consists in creating a machine vision system, the robot - the vehicle ( Fig. 1 ), which is a real-time video stream should process to recognize the surrounding scene to detect objects and generate the manipulated variable, aimed at the solution of the problem.

Figure. 1 h6> Here we are not trying to recreate the actual conditions of the road scene, eliminating all the charm of a small-size modeling.

For starters, simple examples, we would like to work out the basic architectural components of the system (base receiving the video stream and distributed processing on a combination of mini-computers and video cameras, as the prototype of System-on-a-Chip (SoC)), potentially useful for solving more complex problems.

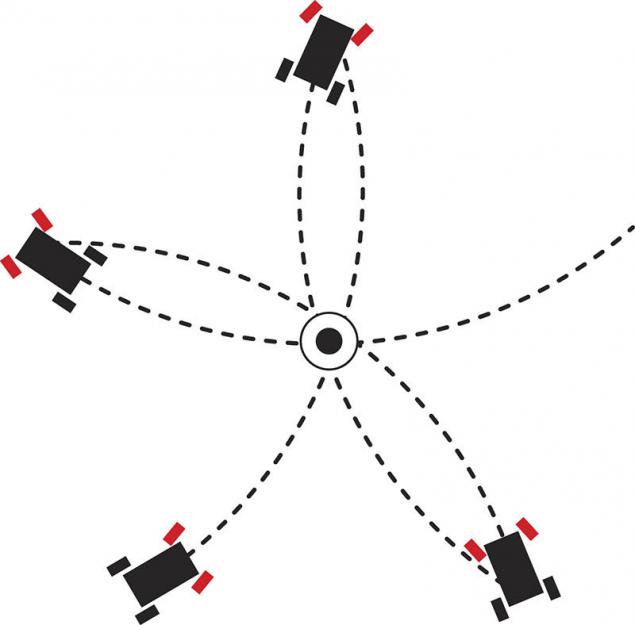

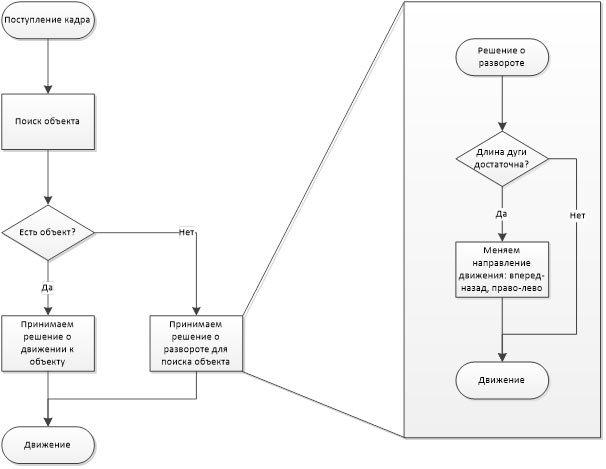

WE WILL taught the robot to move along the corridor and to detect simple objects, such as an orange traffic cone. The challenge was that he could drive up to the object and stop. And then decided to play with the ball. In the current version, if the ball is in the camera field of view, the robot detects the ball accelerates and pushes his bumper. If the ball leaves the field of view of the camera, the robot starts to look for him.

Video shot during a report on the fall conference of young scientists MIPT,

right in the corridor of the main building h6> Now we teach the robot to pass "snake" at the time. This exercise makes it possible to assess the quality management system and its progress from version to version. And compare with the quality of the manual control with the person.

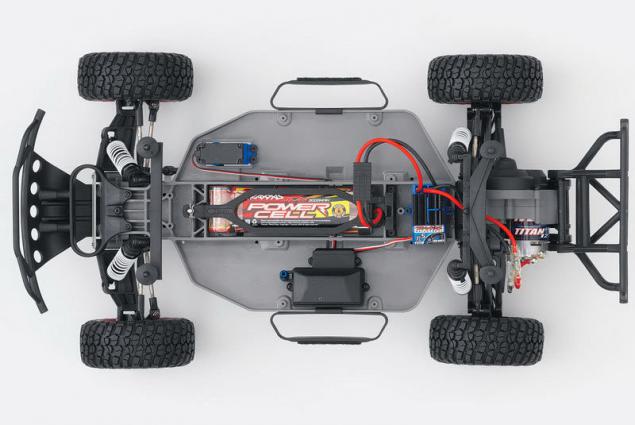

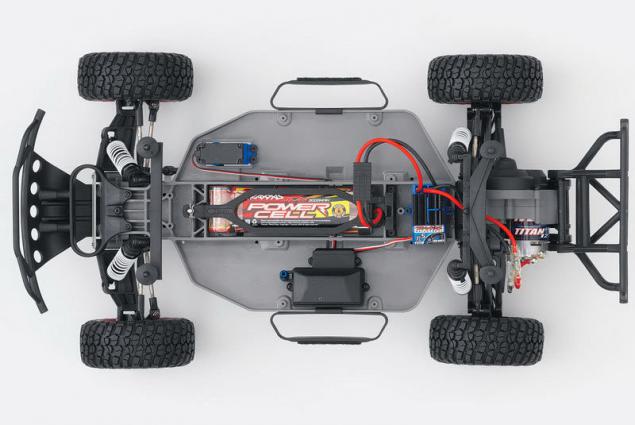

Initially, our robot contained only the control computer, camera and, in fact, the chassis. This model is a sports utility vehicle Traxxas Slash 2wd made in 1:10. ( Fig. 2 , Fig. 3 )

Figure. 2 Traxxas Slash 2wd h6> Controller chassis is made based on the Arduino nano, but in fact it only uses a microcontroller ATMega32.

A little later, we added a front diagram sonar to monitor the distance to the obstacle - simply put, the robot has not fought in the bumper corners and walls.

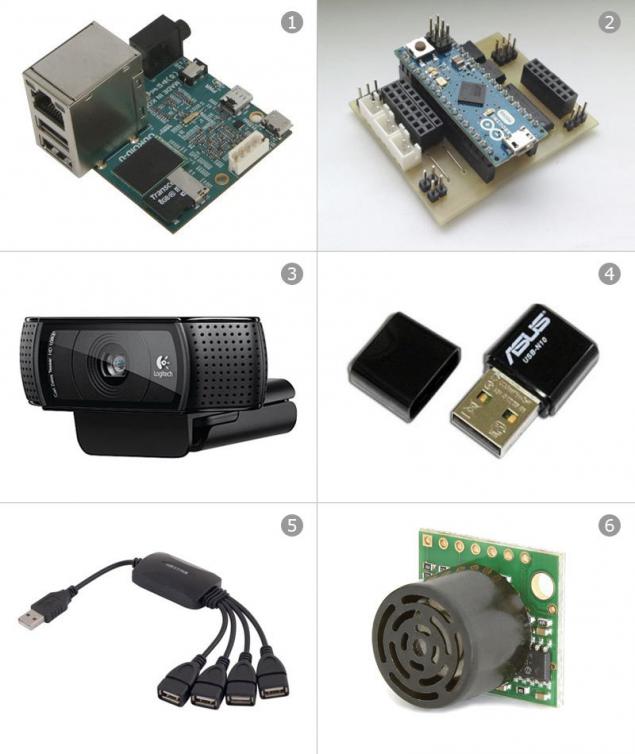

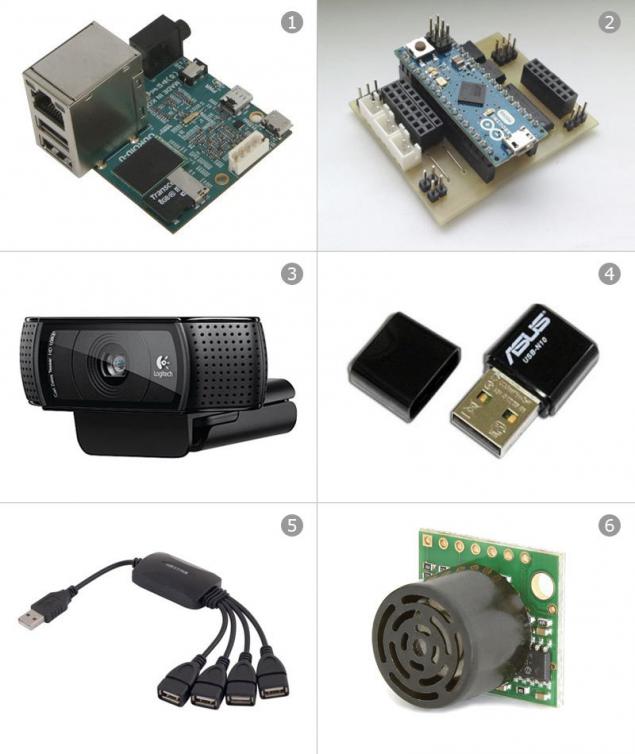

Figure. 3 Traxxas Slash 2wd h6> If the first version of the robot to broadcast video over HTTP back and generating control signals conducted on the desktop, then in the current version 2.0 (shown on video) the whole cycle is closed on board, with the main burden fell on the video processing on minicomputer Odroid U2. ( Fig. 4 -1 )

In addition to computer equipment in version 2.0 include:

- the robot control unit (Fig. 4, -2);

- Video Camera Logitech HD Pro C920 / Genius WideCam 1050 (can be used practically arbitrary webcam) (Fig. 4, 3);

- Wi-Fi-adapter ASUS USB-N10 (Fig. 4, -4);

- USB-hub (Fig. 4 -5)

- sonar LV -MAXSONAR-EZ2 (Fig. 4 -6)

Figure. 4 h6> to device features a robot control include:

- Implementation Team Manager computer

- the formation of Governors of the PWM signal,

- management of the external load (7 channels);

sonars (8 channels), The Hall sensor, Battery voltage sensor (ADC); protection robot emergency stop on the frontal sonar, stop control signal is lost.