1439

Why growth causes an increase in the quality nekachestva, or whether to work the main function

foolish to argue with the fact that analog CCTV thing of the past: cheap IP cameras provide image quality comparable with expensive analog. In addition, IP cameras are unbounded by anything except performance registrar, whereas analog cameras require strict compliance with the reception cards, matching the levels of the signal transmitter / amplifier / receiver and other shamanism.

Designing a system based on IP cameras at any time the camera can be removed and replaced by a better - if still keep the IP address and login password, you probably do not even have to change the settings of the receiver - just go to the archive better picture. < br /> On the other hand, it imposes restrictions on the receptionist - he must be ready to work at any resolution, any bitrate, any codec and any protocol ... Well, or at least to work correctly with the declared.

In the world of software, there are two ways - there are linux-way: a collection of small programs, each of which makes one function, but it is very good; and there are windows-way: this is a huge food processors that can do everything, and a little more. The main problem linux-way - is the lack of interface. To get all the benefits by smoking have mana (or at least read --help), and experiment. And just to figure out what is and what can be combined and how. The main problem of windows-way - is the loss of the main function. Very quickly on the fouling dop.funktsionalom lost key functional tests, and eventually the problems begin even with him. And thus begins the inertia of thinking, "this is the main function, it is extensively tested most of all, there can not be a bug, the user is doing something wrong».

Now go to our sheep: now there is a steady increase in the quality of IP cameras. Anyone who has seen the difference FullHD camera installed at the same place where before there was even ultrakrutaya 700TVL, will not want to go back (all the more so for the price it is now about the same). Further development leads to the fact that the camera 3MP (2048x1536) and a 5MP (2592x1944) are not uncommon. The only price for better quality - rising costs for storage and transmission. However, the price gigabyte hard drive has long falls (and quite recovered from the flood at the plant), and therefore is not a problem.

Just today there was a small dispute with maxlapshin on the topic whether the software manufacturer anything to the user, after the sale or not. Yes, any software is sold "as is" without any promises. Therefore, even paying much else, you do not get the fact that even the working software. That's only if the software does not work, and it is known - the flow of customers will run out one day. Although it is bought, the evidence of bug fixes (and even more so, the implementation of features) under the big question.

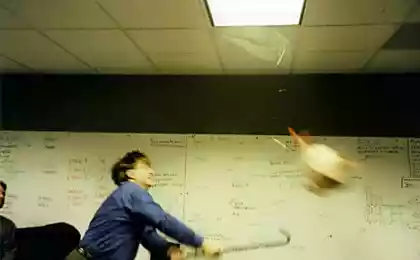

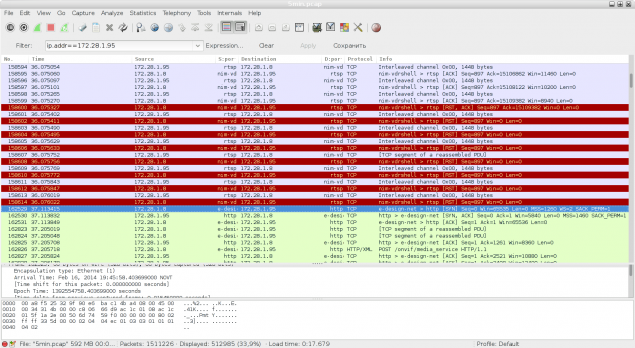

Finish the entry and see a small but very significant video (you can not even watch - on stop.kadre see everything):

This textbook glitch that I watch almost all software designed for video recording. I saw this on VideoNet, see regularly on Axxon Next, as you can see in the video - it greeted me Trassir. The same canoe, even in your own camera viewer. It would be possible to write off all of the problems and the camera. Can be attributed to the network. Can be a high load on the CPU. Possible to electromagnetic interference. It may be advisable to check the memory. You can reinstall the system. In general, the methods instead of proceeding to make a person принести toilet ...

Here are just connected to the same flow through vlc rtsp no artifacts and glitches - no. On the same computer I run nakolenny test script that reads from the stream and camera writes to disk - and no loss and no problems, and therefore is only one method - to reduce camera resolution and bit rate reduction.

That is, despite the flexibility, claiming it to be a heap of cameras work on ONVIF and RTSP ... Anyway, you can not get any advantages from the IP surveillance software since receiving was not ready for it.

The main reason for this behavior, oddly enough, is an IP network, the codecs and ... sewage .

So, to begin, a brief basic theory over an IP network. In all runs the network in the form of bags. Each package has a maximum size (MTU, 1,500 conventional LINC), the sender and receiver. Bag once it is forwarded along the way and in the end to get to the recipient. Can not be reached. Can cause injury. Piece can come ... In general, possible options. Of these bags is wound on top of transport protocols: UDP and TCP (of interest to us). UDP-nothing changes, but there is a port of the sender and the destination port to allow packets to share with each other; and a TCP screwed a bunch of logic that "guarantees delivery." More precisely - guarantees delivery or generate errors if something can not be delivered. Well ... promises to guarantee (promise - not to marry;) Any admin repeatedly saw "hung" connections on the same mobile Internet, for example).

As TCP guarantees delivery? A simple - each packet must be confirmed. There is no evidence for some time - the package is lost - Reship it. But if each packet wait for confirmation - the speed drops horribly, and the more, the higher the delay between communicating points. Therefore introduces the concept of "windows" - we can send a maximum of N packets without confirmation, and then wait for confirmation. But wait, too much evidence N - receiver will also send and receive no confirmation on each, but simply "maximum vision". Then the evidence is less. And confirmation can be sent along with the send packets, so do not get up twice. In general - the logic of the sea, all aimed at the fulfillment of the promise of delivery, but the maximum utilization of the channel. Window size - vacillates, and selected by the system based on voodoo, settings, weather on Mars. Plus, changes in the process stream. Touch on this point later.

So now let's move to our sheep - H264 over RTSP. (In fact, it almost does not matter - what a codec is and what transport protocol. Do not think that if you are using any protocol your genius, which at times easier RTSP, it's none of your business). Feed composed of periodically repeating keyframe and a flow change from the current state. By connecting to the video have to wait for a keyframe, and then we take it to the current state, and then take diffs, which are superimposed and displayed. What does this mean? This means that every X seconds flies a lot of data - full frame. And the more, the higher the resolution of the camera and the higher the bit rate (although let's be honest, bit rate has little effect on the size of keyframes). So, here we have time 0 - beginning keyframe at which arrives once a full-frame (for example, we have the camera all 3 megapiselya - is 2048x1536 = 3,145,728 pixels. After compression is pathetic ~ 360 KB). Total flow we have 8 megabit = 1 megabyte, keyframe every 5 seconds, and FPS = 18. Then we will have something like 360k, then 52K every 1/18 of a second, 5 seconds later again 360k, then again at 52K.

Now again go back to the UDP and TCP. Who came to the network card bag folded into the buffer network card and processor put the flag (or an interrupt) that has data. Processor suspends execution of all useful, pulls out from a map pack, and begins to rise and undress him on the TCP / IP stack. This process is performed at the highest priority (for working with iron). But we still Windows or Linux, or any that is not RTOS, so there are no guarantees when it can get to the application of this package. And therefore, as soon as the system figured out what kind of package to which the connection is, the package is trying to meet the buffer.

On UDP: if there is no space in the buffer, the packet is discarded.

On TCP: if there is no space in the buffer, flow control algorithm is included - the sender sends a signal overflow, they say shut up while I'm not shake myself a little bit. Once the app takes some of the data from the buffer, the system sends "OK, drove more» sender communication resumes.

Now let's put everything and write out how the reception of data from the camera. For a start - a simple case for UDP. The camera reads the next frame, puts it in the sphincter of detrusor gets compressed data packets on the cuts, adds headers and sends the recipient. The recipient receives the first 260 UDP packets, then a pause, another 40 packets, pause, another 40 packets, etc. The first 260 UDP packets arrive instantly - somewhere for 30 milliseconds; and already on the 55y arrive next 40 millisecond (for another 4 milliseconds). Suppose the buffer we have is 128 kilobytes. Then, they will score 10 milliseconds. And if at that time the application will not empty the buffer in a single burst of proofreads all (actually read at a time in one package ...) - we will lose the rest of the key frame. Given that we did not RTOS, and the application can force the "sleep" for any reason (such as OS resets the buffer to disk) at the same moment, the only way not to lose anything - you need to have a network buffer size is greater than we can sleep. That is, ideally, should be installed OS buffer equal to about 2 seconds of flow, in this case - 2 megabyte. Otherwise loss guaranteed.

Okay, but we also have TCP! Which guarantees delivery, and ask the sender to wait if that! Let's switch to TCP, and look at the same picture. Additional overhead can be neglected, let's just see what happens. So, we have flies 360 kilobytes of data. They are sent to 100Mbps link somewhere in 30 milliseconds. Somewhere on the 10th millisecond receiver buffer is full, the camera and asked to be quiet. Suppose, after a further 20ms application read all the available buffer (actually - read 4 bytes, then 1400, then another 4, 1400 still ...) and asked the camera to continue operating. camera has sent another third keyframe, and again shut up. After another 20ms drove on - but the camera still produced data that formed the buffer of the camera itself. And here we come to the slippery moment - and what size of TCP buffer in the chamber? In addition, because of the constant "shut up" - "keep" the effective bandwidth decreases dramatically - instead of 100Mbps we 128kbayt in 30ms = 32 megabits maximum. In Windows Server, the default quantum is a fixed 120ms . When we 120ms speed limit will 8.5mbit. That is, the server operating system using a buffer in 128kbayt take 8mbitny flow will not just difficult, but daunting. On the desktop operating system easier, but still the problem will in any chihe. If the buffer is more - is getting better, but still - at any instability subtraction problems begin that result in the simplest case to move jerkily, in some cases, to the damage to the stream or bug Similarly, if the buffer overflow TCP inside the chamber.

Where you can draw only one conclusion - the buffer as well, ideally, should be a margin of about 2 seconds of flow, in this case - 2 megabytes. Otherwise, the problem is likely.

Maybe I'm wrong, but if the application task is to accept and keep the flow of the cameras can not do this - it is a bug. And this should fix the bug, and not to propose to reduce the problem already solved reducing the quality to an analog camera. Dixi.

Source: habrahabr.ru/post/227483/