1693

Time of flight

You know, I wonder sometimes bizarre structure of public opinion. Take for example the technology 3D-visualization. The huge public outcry caused the recent technology of virtual reality glasses: Oculus Rift , Google Glass . But there's nothing new here, the first virtual reality helmets appeared in the late 90s. Yes, they were difficult, they were ahead of their time, but then why it did not cause such a WOW-effect? Or 3D-printers. Articles about how they are cool or how quickly they take over the world appear in the information field twice a week the past three years. I do not argue, it's cool and the world they still capture. But this technology was created back in the 80s and since then sluggishly progressing. 3D-TV? year 1915 ...

All these technologies are nice and curious, but how much hype because every sneeze?

What if I say that in the last 10 years was invented, developed and introduced into the mass production technology of 3D shooting is very different from any other? In this technology is already widely used. Well-established and accessible to ordinary people in the stores. Have you heard about it? (Probably the only experts in robotics and related fields of science have already guessed that I'm talking about the ToF-camera).

What is a ToF camera? In the Russian Wikipedia ( English ) you will not find even a mention of his short that it is. «Time of flight camera» translated as "Vremyaprolёtnaya camera." The camera determines the distance by the speed of light by measuring the time of flight of the light signal emitted by the camera, and reflected by each point of the image. Today's standards is a matrix of 320 * 240 pixels (the next generation will be 640 * 480). The camera provides accurate measurements of the depth of about 1 inch. Yes. Matrix of 76,800 sensors that ensure accurate measurement of time of the order of 1/10, 000, 000, 000 (10 ^ -10) of a second. On sale. For 150 bucks. And you can even use it.

And now a little more about physics, operating principles, and where you met this beauty.

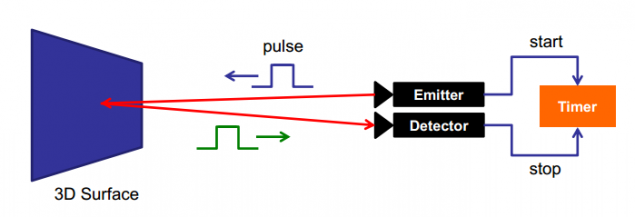

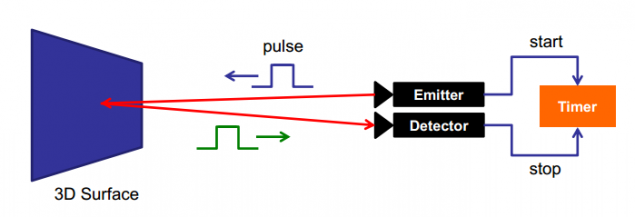

There are three main types of ToF-cameras. For each of the types of uses its technology range measurement point position. The most simple and clear - «Pulsed Modulation» aka «Direct Time-of-Flight imagers». Given impetus and at each point of the matrix is measured the exact time of his return:

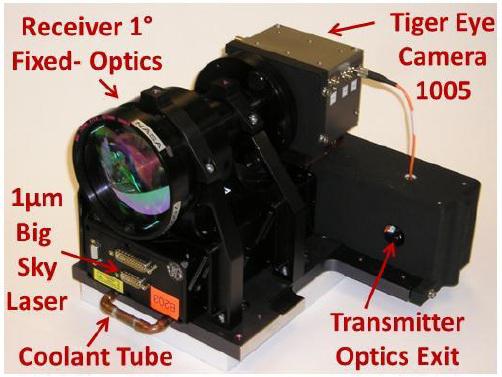

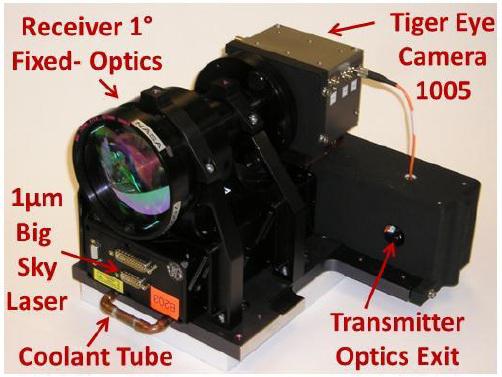

In fact the matrix consists of triggers that fire on the wave front. The same method is used for a Synchronous Optical flashes. Only here on the orders of accuracy. That's the main and complexity of the method. Requires very precise detection of the response time, which requires specific technical solutions (no - I could not find). Now NASA is testing these sensors to their landers кораблей.

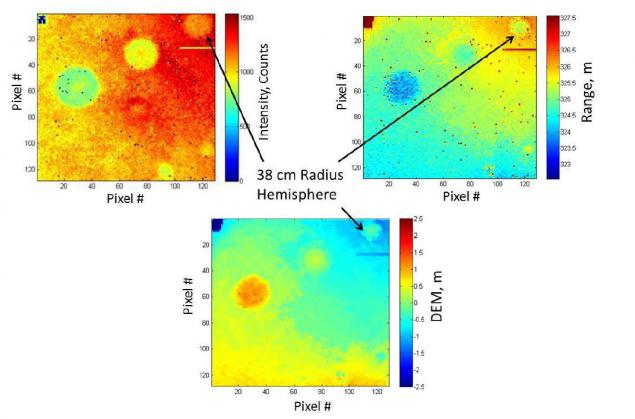

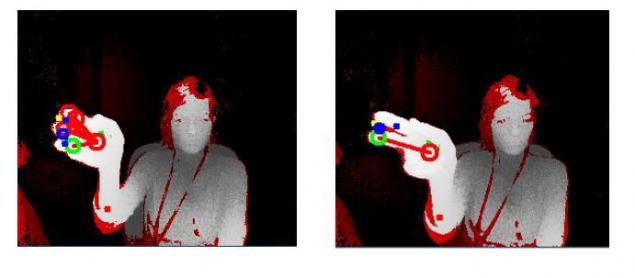

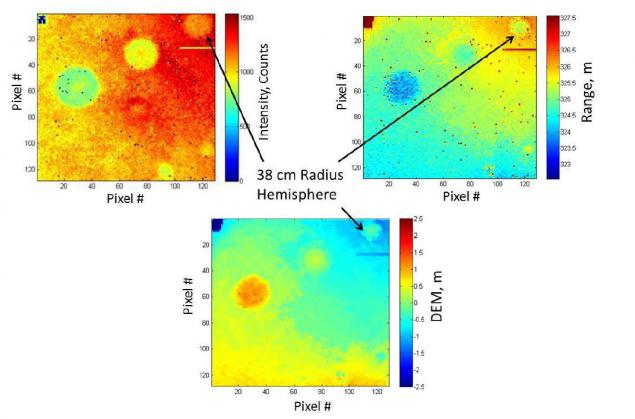

But the picture that it produces:

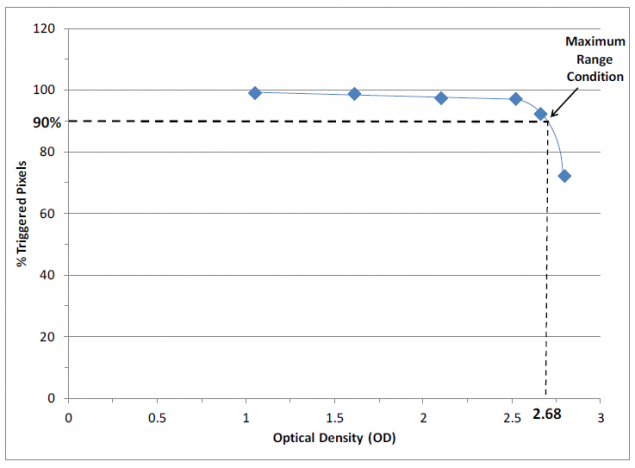

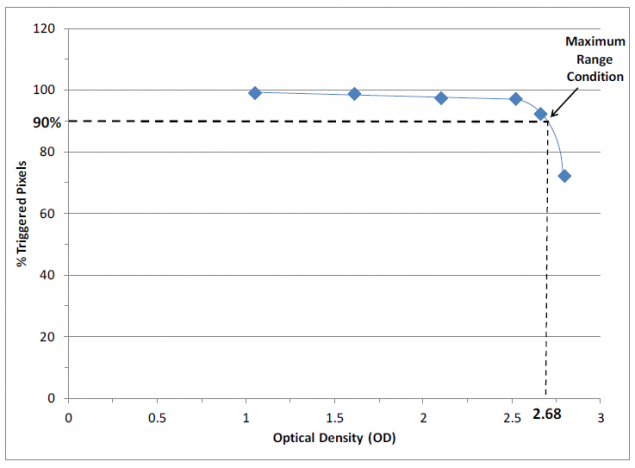

Backlight on them enough to trigger fires on optical flow reflected from a distance of about 1 kilometer. The graph shows the number of triggered pixels in the matrix according to the distance of 90% work at a distance of 1km:

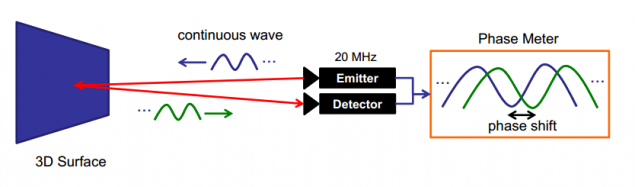

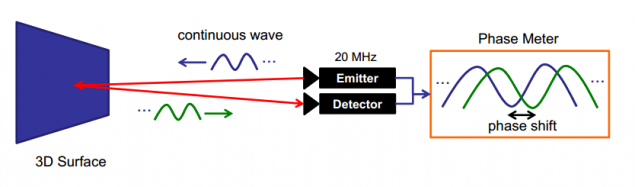

The second way - the constant modulation signal. The transmitter emits a certain modulated wave. The receiver is a maximum correlation of what he sees with this wave. This determines the time that the signal spent on it to reflect and come to the receiver.

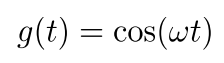

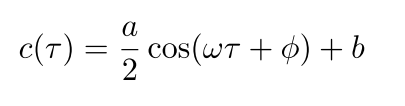

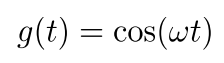

Let the emitted signal:

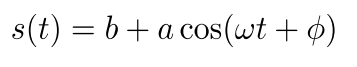

where w - the modulating frequency. Then, the received signal will look like:

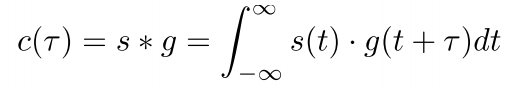

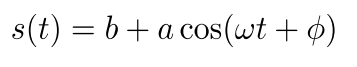

where b-a shift, a-amplitude. Correlation of incoming and outgoing signals:

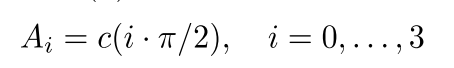

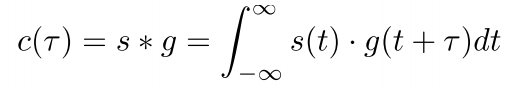

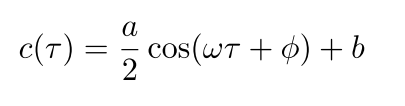

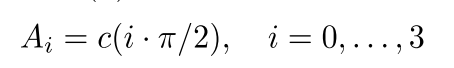

But the full correlation with all possible shifts in time to make difficult enough for real time in each pixel. Therefore, using a cunning feint ears. The resulting signal is taken in 4 adjacent pixels with a shift 90⁰ phase and correlated with itself:

Then the phase shift is defined as:

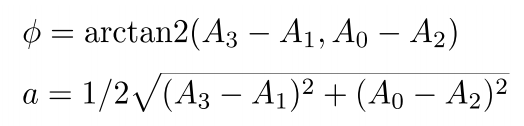

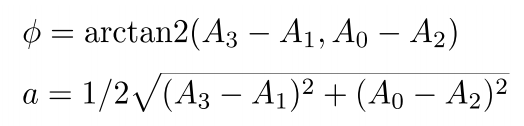

Knowing the resulting phase shift and the speed of light to obtain the distance of the object:

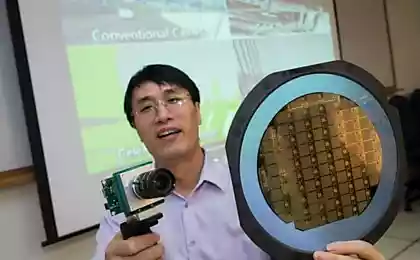

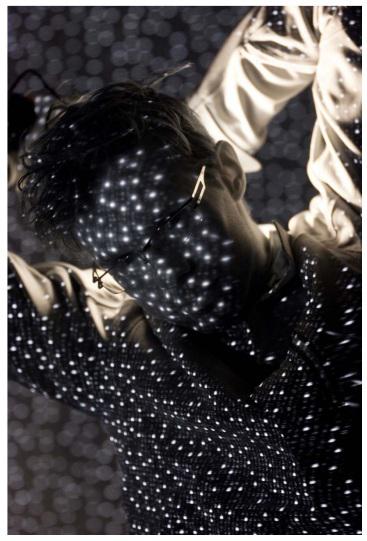

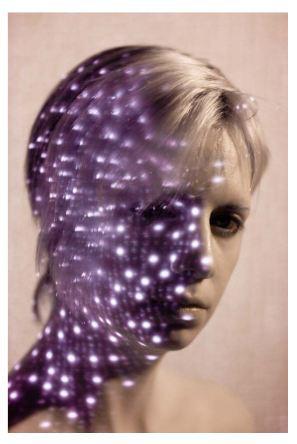

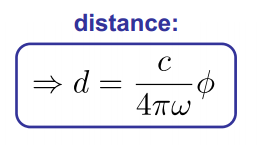

These cameras are a little simpler than the ones that are built on the first technology, but still complex and expensive. Makes them here this company . And they are of the order of 4килобаксов. But simpatishnye and futuristic:

The third technology - & quot; Range gated imagers & quot ;. Essentially slide chamber. The idea here is simple and to the horror does not require high-precision receivers or complex correlation. Before matrix worth the shutter. Suppose that we have it perfect and works instantly. At time 0, the lighting scene. The shutter is closed at time t. Then, objects that are further away than t / (2 ∙ c), where c - velocity of light will not be visible. Light just does not have time to fly to them and come back. Point located close to the camera will be covered at all times and have exposure t brightness I. Hence, any point of the exhibition will be the brightness from 0 to I, and this is a representation of the brightness of the distance to the point. The brighter - the closer.

You're done in just a couple of small things: enter into the model while the shutter is closed and the behavior of the matrix at the event, non-ideality of the light source (for a point source of light and brightness-range dependence is not linear), different reflectivity of materials. This is a very large and complex task, which the authors have decided to devices.

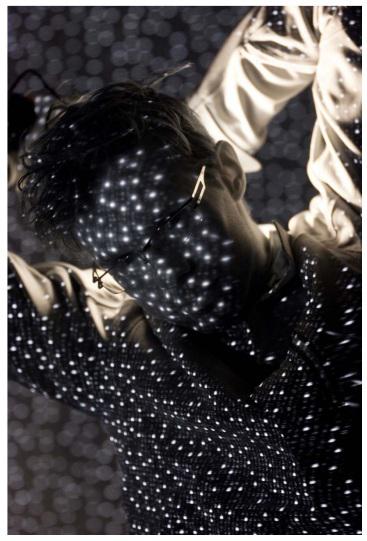

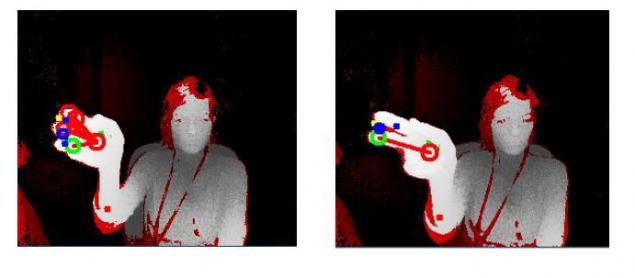

Such cameras are the most inaccurate, but the most simple and cheap: the complexity of the algorithm in them. Want an example looks like this camera? Here it is:

Yes, in the second Kinect worth just such a camera. Just do not confuse the second with the first Kinect (Habre once upon a time there was a good and detailed article where everything is mixed up). In the first Kinect uses structured illumination . This is where the older, less reliable and slower technology:

There used conventional infrared camera, which looks at the projected pattern. His distortions determine the range (comparison of methods can be found here тут).

But Kinect is not the only representative of the market. For example Intel releases camera for $ 150, which gives the 3D map image. It focuses on a near zone, but they have an SDK for the analysis of gestures in the frame. Here is another вариант from SoftKinetic (they too have the SDK, plus they are somehow tied to the texas instruments).

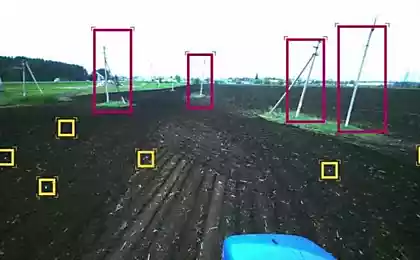

I myself, though still not faced with any of these cameras, which is a pity and a shame. But I think and hope that in five years they will enter into the everyday life and my turn will come. As far as I know, they are actively used in the orientation of the robots, introducing a system of recognition by individuals. Range of tasks and applications is very wide.

Source: habrahabr.ru/post/224605/

All these technologies are nice and curious, but how much hype because every sneeze?

What if I say that in the last 10 years was invented, developed and introduced into the mass production technology of 3D shooting is very different from any other? In this technology is already widely used. Well-established and accessible to ordinary people in the stores. Have you heard about it? (Probably the only experts in robotics and related fields of science have already guessed that I'm talking about the ToF-camera).

What is a ToF camera? In the Russian Wikipedia ( English ) you will not find even a mention of his short that it is. «Time of flight camera» translated as "Vremyaprolёtnaya camera." The camera determines the distance by the speed of light by measuring the time of flight of the light signal emitted by the camera, and reflected by each point of the image. Today's standards is a matrix of 320 * 240 pixels (the next generation will be 640 * 480). The camera provides accurate measurements of the depth of about 1 inch. Yes. Matrix of 76,800 sensors that ensure accurate measurement of time of the order of 1/10, 000, 000, 000 (10 ^ -10) of a second. On sale. For 150 bucks. And you can even use it.

And now a little more about physics, operating principles, and where you met this beauty.

There are three main types of ToF-cameras. For each of the types of uses its technology range measurement point position. The most simple and clear - «Pulsed Modulation» aka «Direct Time-of-Flight imagers». Given impetus and at each point of the matrix is measured the exact time of his return:

In fact the matrix consists of triggers that fire on the wave front. The same method is used for a Synchronous Optical flashes. Only here on the orders of accuracy. That's the main and complexity of the method. Requires very precise detection of the response time, which requires specific technical solutions (no - I could not find). Now NASA is testing these sensors to their landers кораблей.

But the picture that it produces:

Backlight on them enough to trigger fires on optical flow reflected from a distance of about 1 kilometer. The graph shows the number of triggered pixels in the matrix according to the distance of 90% work at a distance of 1km:

The second way - the constant modulation signal. The transmitter emits a certain modulated wave. The receiver is a maximum correlation of what he sees with this wave. This determines the time that the signal spent on it to reflect and come to the receiver.

Let the emitted signal:

where w - the modulating frequency. Then, the received signal will look like:

where b-a shift, a-amplitude. Correlation of incoming and outgoing signals:

But the full correlation with all possible shifts in time to make difficult enough for real time in each pixel. Therefore, using a cunning feint ears. The resulting signal is taken in 4 adjacent pixels with a shift 90⁰ phase and correlated with itself:

Then the phase shift is defined as:

Knowing the resulting phase shift and the speed of light to obtain the distance of the object:

These cameras are a little simpler than the ones that are built on the first technology, but still complex and expensive. Makes them here this company . And they are of the order of 4килобаксов. But simpatishnye and futuristic:

The third technology - & quot; Range gated imagers & quot ;. Essentially slide chamber. The idea here is simple and to the horror does not require high-precision receivers or complex correlation. Before matrix worth the shutter. Suppose that we have it perfect and works instantly. At time 0, the lighting scene. The shutter is closed at time t. Then, objects that are further away than t / (2 ∙ c), where c - velocity of light will not be visible. Light just does not have time to fly to them and come back. Point located close to the camera will be covered at all times and have exposure t brightness I. Hence, any point of the exhibition will be the brightness from 0 to I, and this is a representation of the brightness of the distance to the point. The brighter - the closer.

You're done in just a couple of small things: enter into the model while the shutter is closed and the behavior of the matrix at the event, non-ideality of the light source (for a point source of light and brightness-range dependence is not linear), different reflectivity of materials. This is a very large and complex task, which the authors have decided to devices.

Such cameras are the most inaccurate, but the most simple and cheap: the complexity of the algorithm in them. Want an example looks like this camera? Here it is:

Yes, in the second Kinect worth just such a camera. Just do not confuse the second with the first Kinect (Habre once upon a time there was a good and detailed article where everything is mixed up). In the first Kinect uses structured illumination . This is where the older, less reliable and slower technology:

There used conventional infrared camera, which looks at the projected pattern. His distortions determine the range (comparison of methods can be found here тут).

But Kinect is not the only representative of the market. For example Intel releases camera for $ 150, which gives the 3D map image. It focuses on a near zone, but they have an SDK for the analysis of gestures in the frame. Here is another вариант from SoftKinetic (they too have the SDK, plus they are somehow tied to the texas instruments).

I myself, though still not faced with any of these cameras, which is a pity and a shame. But I think and hope that in five years they will enter into the everyday life and my turn will come. As far as I know, they are actively used in the orientation of the robots, introducing a system of recognition by individuals. Range of tasks and applications is very wide.

Source: habrahabr.ru/post/224605/

Panoramic HD-camera Giroptic: shoot underwater, is screwed into the socket of the bulb, Stream via Ethernet and WiFi

Laser autofocus on your smartphone LG rearranged with a vacuum cleaner