278

Language of the Future: Can We Communicate Without Words by 2030?

Introduction. Our speech is one of the most vivid and complex tools of interaction in human society. But rapid advances in neural interfaces, automatic gesture translation, and observation of the experiences of deaf communities raise the question: Are we closer to the point where verbal speech will no longer play a decisive role in communication? In this article, we look at what silent communication technologies might look like by 2030, discuss the opinions of linguists and artificial intelligence developers, and what social and cultural consequences such a transformation could have.

For many people, the idea of communication without words is still like the plot of science fiction. But if you follow the development in the field of bioengineering and digital solutions, then already now you can notice a certain “arc”: from the invention of writing and the emergence of telephone conversations to the rapid growth of instant messengers and video communication. The next step is a deeper integration of devices into the human body and perhaps a complete transformation of what we used to call “language.” Let's try to look into that future!

Neurointerfaces: from science fiction to real prototypes

Talking about reading thoughts and transmitting information directly between people’s brains has long been the domain of science fiction writers. Today, companies like Neuralink and a number of research universities around the world, conduct active research in the field of invasive and noninvasive neural interfaces.

What does that mean in practice? The ability to establish contact with the human nervous system and read the electrical activity of the brain. As technology improves, signal reading becomes more accurate, and machine learning algorithms are able to “interpret” the received data into commands: from controlling the cursor on the screen to a full set of text messages.

According to Dr. Anna Voronina, a specialist in cognitive neuroscience, “As early as 2030, we can expect the emergence of portable neural interfaces that will read the simplest signals of the brain for the rapid transfer of thoughts to the digital environment.” In the long run, this could be an alternative to speech for people with severe disabilities or simply speed up the exchange of information.”

Gestural languages and automatic translators

An example that shows that communication can go without words is the experience of deaf communities. Sign languages not only effective, but also have a rich grammar, able to convey the finest shades of meaning. For many who hear this, it’s “terra incognita,” where simple gestures and facial expressions are transformed into full-fledged linguistic systems.

Today, automatic glossaries and gesture translators based on computer vision and artificial intelligence algorithms are increasingly appearing. A smartphone camera or a camera in glasses can read the movement of the interlocutor’s hands and translate them into sounding text or voiceover. Machine learning technologies form motion databases and facial expressions, creating a bridge between deaf and hearing people.

Estimated World Federation of the DeafMore than 70 million people in the world use sign languages. If sign-translation programs reach a new level of accuracy and convenience by 2030, it will mean the emergence of another strong “wordless” channel, understandable to a wide range of users.

Integration of Gesture in Mass Culture

In addition to sign language translators, many companies are experimenting with Motion Capture technology. It allows you to recognize complex shapes that make up the language of the human body, including microgestures and facial expressions. Given that different countries and cultures have their own ethnic sign systems, a comprehensive translation platform can become a kind of “Babylonian gesture lever”, opening new horizons for travel and international cooperation.

style="display:block; margin:20px auto; width:90%; height:auto;"

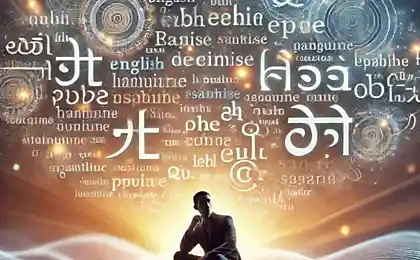

Opinion of linguists and AI developers

Talk about the possibility of “silent” communication is impossible without taking into account linguistic component. According to Professor Lev Perfilov, a specialist in comparative linguistics, “Language is not only a means of transmitting information, but also an expression of cultural characteristics.” If we shift all communication solely to neural signals, we risk losing the layer of meaning and cultural contexts that have been shaped for centuries.”

On the other hand, the developers AI The more we use technological intermediaries (online translators, fast gesture recognition systems), the more language barriers are erased. Maria Golubeva, co-founder of an artificial intelligence startup, believes that by 2030 “we will be able to get super-applications that translate not only words but also intentions in real time, interpreting tone of speech, facial expressions, gestures.” Then it is pointless to argue about the importance of words, because more and more contact with a virtual assistant will take their place.

Can speech disappear?

Here, linguists and developers agree that a complete replacement of speech is unlikely in the coming decades. Speech packs a huge number of associative meanings, historical heritage and emotional nuances that are not easily reproduced by gestures or neurosignatures. However, by 2030, a new language format may emerge. "hybrid communication"When people combine familiar forms of speech with mental commands or optical gestures.

Possible development scenarios by 2030

To get a better idea of what communication channels will look like closer to the middle of the century, consider a few scenarios:

- The Neuronet script. Most megacities are equipped with infrastructure for connecting to public neural interfaces. People can send short messages, bypassing speech and physical contact. Students master the “basic neurofont” – a system of coding thoughts in a digital format.

- Scenario "Lingua Reform". The popularity of gesture systems is growing, supported by gadgets that automatically translate gestures into words. Social involvement of deaf communities is increasing, and sign languages are becoming one of the fashion trends of pop culture.

- Screenplay "Hybrid Speech". Some of the information is voiced, including emotional components, while neural signals convey complex commands and data. An example is a business meeting where project details are discussed using a mixture of voice and telepathic “tips” in the form of emojis displayed in participants’ special lenses.

Challenges and ethics

However, such radical changes in the field of communication can bring problems. First, the question arises. privacyIf a person is able to mentally send messages, how to protect against leakage of internal thoughts or “hacking” of the neural interface? Second, some cultural traditions and languages may be at risk if younger generations increasingly rely on digital tools.

Also, do not forget about mental health. According to some studies, people who are constantly in the online environment, there is an overload of information and a deterioration in the ability to live contact. If you add to this the constant feeling that our thoughts may be intercepted or misinterpreted, stress levels can increase markedly.

Conclusion: What awaits us by 2030?

According to most experts, speech will not disappear, but rather be transformed and supplemented by other types of communication: neural signals, gesture translations and hybrid methods. This can give humanity unprecedented freedom of communication, make it more accessible to people with hearing or speech disabilities, and erase language barriers.

On the other hand, too rapid adoption of such technologies can lead to social inequality or cultural losses. Balancing technical innovation with respect for language heritage will remain a key challenge for linguists and AI developers in the coming decades.

In any case, the future seems incredibly exciting: you can already imagine how by 2030 there will be “silent conversation clubs” in which people communicate thoughts through neural interfaces, or mass applications that instantly translate. gesture Dozens of different languages. And perhaps what seems like audacious futurology today will become as familiar as smartphones in every pocket in a few years.

Glossary

Neural interface

A technology that can read or transmit brain signals to control devices or communicate without traditional speech or motor organs.

Sign language

A complete linguistic system in which information is transmitted through hand movements, facial expressions and body position. It is used in deaf communities around the world.

Motion Capture (MoCap)

Technology to record human movements for further analysis or transfer to a digital environment (e.g., in movies or gesture recognition).

Machine learning

A branch of artificial intelligence where computer systems “learn” on large data sets to recognize objects, translate texts, or analyze language.

Hybrid Communication

An approach in which a person combines traditional speech, gestures and signals generated by neural interfaces to transmit information.

Digital detox: How to restore the brain’s ability to think

The Art of Memes: How Funny Pictures Control Our Brains