346

The Great Fallacies in Science: From Alchemy to Modern Myths

Introduction. Science is a dynamic process of continuous search for truth, where assumptions are first made and then tested by experiments and logical analysis. At times, even the most authoritative theories and ideas, whether ancient views of the universe or modern medical hypotheses, are either partially wrong or undergo serious adjustments. This process forces science to move forward, getting rid of outdated concepts and forming new, more accurate models of reality. In this article, we will examine some striking historical and contemporary misconceptions that illustrate how scientific views evolve and why mistakes are a natural and even necessary part of the path to truth.

Alchemy: The Dream of the Philosopher's Stone

Alchemy is one of the most famous early forms of "science" that arose long before the advent of systematic chemistry. Alchemists believed that there was a universal catalyst or “philosopher’s stone” that could turn ordinary metals into gold. In addition, it was believed that the stone can confer immortality or at least significantly prolong life. For centuries, in laboratories in Europe, the Middle East and China, enthusiastic scientists have mixed different substances, boiled them in furnaces, invented ingenious distillers - all for a great goal that was never achieved.

However, alchemy made a huge contribution to the development of the future science of chemistry. The accumulated practical knowledge about the properties of metals, acids and other reagents became the basis for the formation of more rigorous scientific disciplines. As scientific methodology developed, the chemist began to rely strictly on experimental data and calculations. Thus, the alchemical search for an exorbitant goal retreated, and modern scientific methods replaced it.

Causes of error

- Lack of rigorous methodology: Alchemists relied more on mystical ideas and empirical guesses than on rigorous experimentation.

- Limited knowledge of the structure of matter: There was no clear understanding of atoms and molecules, so the idea of "transmutation" seemed quite possible.

Geocentric Model of the World: The Earth as the Center of the Universe

For many centuries, there was a belief that the Earth is the fixed center of the universe, and all celestial bodies (the Sun, Moon, planets and stars) revolve around it. This geocentric model, elaborated in detail by Claudius Ptolemy in his Almagest, remained the main cosmological doctrine in Europe for several centuries. Ptolemy’s principles were woven into medieval theology and seemed indisputable.

Only in the Renaissance, thanks to the works of Nicolaus Copernicus, Giordano Bruno, Galileo Galilei and Johannes Kepler, it became clear that the Sun is the central star around which the Earth and other planets revolve. Copernicus presented a heliocentric model, and Galileo with the help of a telescope discovered the phases of Venus and the moons of Jupiter, which destroyed the idea of complete “subordination” of all bodies to the Earth. Although the evidence has gradually accumulated, the transition to a new picture of the world has faced resistance from church and tradition, which shows how long and painfully established misconceptions can be overcome.

Key lessons from history

- We need proof: Galileo and Kepler were able to confirm heliocentrism by observing the motion of planets and satellites, making a revolution in astronomy.

- The success of the new theory takes time: Even serious evidence is not immediately accepted if it conflicts with religious or philosophical dogmas.

35087

The theory of “spontaneous generation” of life

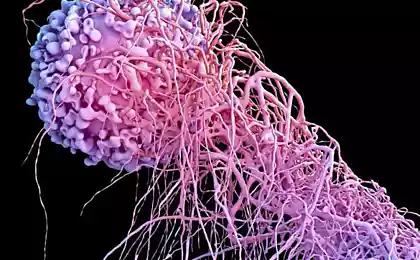

Until the seventeenth century, the idea was widely accepted that living organisms could spontaneously arise from inanimate matter. So, it was believed that the larvae of flies are taken directly from rotting meat, and frogs “grow” in the damp ground. This view, from the time of Aristotle, was “obvious” to many. But already in the XVII century, the scientist Francesco Redi put a series of experiments, demonstrating that if a piece of meat is covered, then there will be no larvae on it.

The concept of self-generation continued to be discussed in the XVIII century, until Louis Pasteur in the XIX century, with the help of his famous experiments with swan neck flasks, proved that microorganisms cannot themselves appear in a sterile environment without an external source. It was Pasteur’s research that finally dispelled belief in the “spontaneous emergence” of life, laying the foundations of microbiology and proving the role of microorganisms in fermentation, disease and other biological processes.

Why the theory of spontaneous generation held for a long time

- Limited observation capabilities: The lack of microscopes and modern techniques did not allow to track the appearance of microorganisms.

- The daily experience was deceptive: rotting foods really seem to be the “first source” of larvae or mold, unless you know about the life cycle of bacteria and insects.

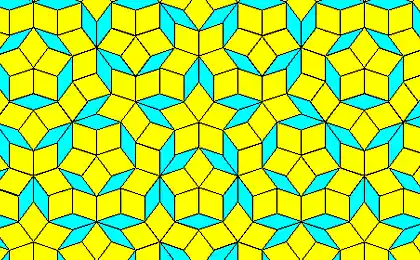

The Aetheric Theory of Light and Its Refutation

In the nineteenth century and early twentieth century, physicists held the idea that electromagnetic waves, including light, should propagate in a special medium - "ether". The concept of ether dates back to ancient philosophers, but in classical physics it was reinterpreted to explain how a wave can propagate without a material carrier (an analogue of water for waves on the surface). However, the famous Michelson-Morley experiment (1887) demonstrated that the “wind of ether” does not exist, that is, the speed of light is the same in all directions. This result caused confusion in the scientific community.

Finally, the ether was "defeated" by Albert Einstein's general and special theories of relativity, which showed that the speed of light is constant and does not need a stationary medium for its propagation. These new views have revolutionized the concept of space and time and laid the foundation for modern high-energy physics and quantum theory.

Lesson for science

- The need to revise even established concepts: Despite the authority of the aether, the Michelson-Morley experiment forced scientists to look for alternative explanations.

- The integration of theories: Maxwell’s ideas about the nature of electromagnetic waves merged with Einstein’s principles of relativity, leading to a technological breakthrough (GPS, satellite communications, etc.).

Phrenology: "read character" by skull shape

At the beginning of the XIX century, the idea of phrenology, based on the assumption that mental abilities and character traits can be determined by “bubbles” on the head, became popular. Phrenologists believed that the brain consists of separate “organs” responsible for courage, musicality, kindness and other qualities, and the shape of the skull reflects the development or underdevelopment of these “regions”.

Although phrenology has become an object of interest in many countries, including Europe and the United States, up to and including litigation, over time it has been disproved by physiological and anatomical research. It turned out that the boundaries of the brain regions do not correspond to those proposed by phrenologists, and the shape of the skull in most cases does not indicate the intellectual and personal qualities of a person. Modern neuroscience is much more complex and subtle explanation of the interaction of different brain regions and mental processes.

Why the theory stuck

- The pseudoscientific "simplicity": People like it when the complex is easily explained: the shape of the skull, and everything about you is known!

- Weak development of neuroscience at the time: There were no accurate methods of studying the brain (MRI, CT), and a lot of guesses.

Contemporary myths and pseudoscience

Although modern research has much more accurate methods (electron microscopes, colliders, supercomputers for calculations), this does not mean that there are no more errors or errors in science. Myths and unscientific theories continue to emerge. In particular, some alternative treatment approaches (e.g., homeopathy) are still controversial without a sufficient experimental basis.

Even in high-tech fields, “fashionable” ideas emerge without convincing evidence. It is important to understand that the scientific community is not a monolithic club of “recognized truths”, but a platform where discussions are constantly conducted, new research is published, old conclusions are refuted and hypotheses of the future are formulated. Mistakes make science alive, stimulating search and validation.

Conclusion

The history of science is full of cases in which what was once considered an indisputable truth turned out to be only a stage on the way to a deeper understanding of reality. Alchemy gave way to chemistry, geocentrism to heliocentrism, spontaneous origin of life to microbiology, ether to the theory of relativity, and phrenology to modern methods of neuroimaging. As tools and methodologies improve, science itself moves to new levels of accuracy and predictiveness.

It is important to realize that errors, false hypotheses, or outdated views are not something “shameful” for science. On the contrary, it is an organic part of the learning process. As Karl Popper put it, “science begins with problems,” and it takes time and experience to answer them. So myths and misconceptions, whether in history or modernity, only tell us how capable science is of self-correction and renewal. That's what makes it the most efficient cognition system we have.

In time, we will surely see some current concepts give way to new, more accurate theories. And this fact does not speak of the “error” of science as such, but, on the contrary, emphasizes its flexibility, openness to criticism and constant striving for truth. And if we learn to treat mistakes not as “failures” but as steps in learning, we can more easily accept change and participate more actively in scientific progress.

Discover the artist: 5 unusual drawing techniques

Emotional Intelligence: Understanding and Managing Your Feelings