1415

See the unseen

A couple of years ago Habré slipped two articles that mentioned an interesting algorithm. Articles, however, were written nechitabilno. In the style of "news» ( 1 , 2 ), but the link to the site was present, it was possible to find out details on place (algorithm for authorship MIT). And there was magic. Absolutely magical algorithm that allows to see the invisible. Both authors Habré did not notice it and focus on the fact that the algorithm allows us to see the pulse. Having missed the most important thing.

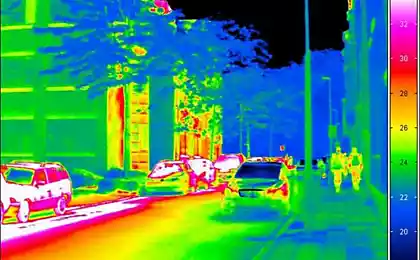

The algorithm allows us to strengthen the movement, invisible eye, to show things that have never been seen alive. Video slightly higher - presentation c cfqnf MIT second part of the algorithm. Microsaccades, which are listed starting from the 29th of a second, previously observed only as a reflection of the mirrors mounted on the pupils. And here they are seen through the eyes.

A couple of weeks ago, I again came across the articles. I immediately became curious: what people did in those two years to prepare? But ... the void. It has defined the following fun week and a half. I want to make the same algorithm and find out what you can do with it and why it is still not in every smartphone, at least for a pulse.

The article will be a lot of mat videos, pictures, a bit of code and answers to the questions.

Let's start with the math (I will not stick to any one particular article, and will interfere in different parts of the different articles, for a smoother narrative). A research group has two major works on the algorithmic part:

1) Eulerian Video Magnification for Revealing Subtle Changes in the World

2) Phase-Based Video Motion Processing

In the first study realized amplitude approach, more rough and fast. I took it as a basis. In the second paper except the amplitude of the signal phase is used. This allows for a much more realistic and clear picture. The video above was applied specifically to this work. Minus - a more complex algorithm and processing, deliberately departing from the real-time without the use of the video card.

begin? H4> What is the gain of movement? Strengthening movement, is when we predict in which direction the signal will mix and move it farther in that direction.

As a result, the logic operation is obtained:

It's simple to outrageous. For example incremental summation with the frame so generally realized:

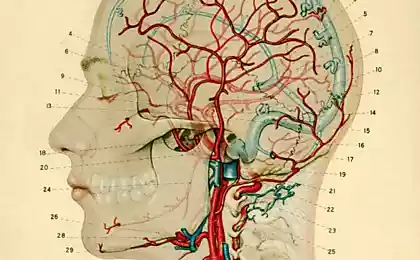

A little bit about the beauty h4> course at MIT love beautiful results. And, therefore, try to make them as the most beautiful way. As a result, the viewer gets the impression that this particular, it is an integer. Unfortunately not. Swells the vein can be seen only when properly set lighting (shadow should draw pattern of the skin). Change the color of the face - just a good camera without auto-correction, with the right light and put the man in which there are clear problems with the heart (in the video is a large man and Preterm birth). For example in the example with the negro, whose heart all right, do not you see the brightness fluctuations of the skin, and the gain changes the shade of micromotion (shadow lies neatly from the top down).

Quantitative characteristics h4> Still. In the video clearly visible breathing and pulse. Let's try to get them. The simplest thing that comes to mind - summed difference between adjacent frames. Since when breathing fluctuates almost the entire body - this characteristic should be visible.

So, the result h4> As a result, I am quite clearly formed an opinion about the boundaries of the algorithm, it became clear what his limitations:

Nothing new under the sun h4> When you read the work of comrades and watch videos sneaking suspicion. Somewhere I saw it all. Here you look and think, think. And then they show a video of how using the same algorithm take and stabilize motion of the Moon, removing noise atmosphere. And then in a flash: "Yes this is the noise suppression algorithm, only with positive feedback !!". And instead of suppressing spurious motion, it strengthens them. If we take α & lt; 1, then the connection again and negative movement away!

And finally: the programmer, be careful! H4> algorithm, according to the notes on the site of a patent. Use allowed for educational purposes. Of course, in Russia there is no patenting algorithms. But be careful, if you can do something based on it. Outside of Russia, it may be illegal.

Basement h4>

The algorithm allows us to strengthen the movement, invisible eye, to show things that have never been seen alive. Video slightly higher - presentation c cfqnf MIT second part of the algorithm. Microsaccades, which are listed starting from the 29th of a second, previously observed only as a reflection of the mirrors mounted on the pupils. And here they are seen through the eyes.

A couple of weeks ago, I again came across the articles. I immediately became curious: what people did in those two years to prepare? But ... the void. It has defined the following fun week and a half. I want to make the same algorithm and find out what you can do with it and why it is still not in every smartphone, at least for a pulse.

The article will be a lot of mat videos, pictures, a bit of code and answers to the questions.

Let's start with the math (I will not stick to any one particular article, and will interfere in different parts of the different articles, for a smoother narrative). A research group has two major works on the algorithmic part:

1) Eulerian Video Magnification for Revealing Subtle Changes in the World

2) Phase-Based Video Motion Processing

In the first study realized amplitude approach, more rough and fast. I took it as a basis. In the second paper except the amplitude of the signal phase is used. This allows for a much more realistic and clear picture. The video above was applied specifically to this work. Minus - a more complex algorithm and processing, deliberately departing from the real-time without the use of the video card.

begin? H4> What is the gain of movement? Strengthening movement, is when we predict in which direction the signal will mix and move it farther in that direction.

Suppose we have a one-dimensional receiver. At the receiver, we see that the signal I (x, t) = f (x). In the picture drawn on a black (to some point t) .In the next time signal I (x, t + 1) = f (x + Δ) (blue). Amplify this signal, which means receive a signal I '(x, t + 1) = f (x + (1 + α) Δ). Here α - gain. Expanding it in a Taylor series it can be expressed as:

Let:

What is B? Roughly speaking it is I (x, t + 1) - I (x, t). Draw:

Of course, this is inaccurate, but as a rough approximation descend (blue graph pokazyaet form such "priblizhenongo" signal). If we multiply B by (1 + α) and it will be "strengthening" of the signal. Get (red graph):

In real frame may contain several movements, each of which will go at different speeds. The above method - linear prediction, without elaboration, he breaks off. But, there is a classical approach to solve this problem, which was used in the work - to spread the movement from the frequency response (both spatial and temporal).

In the first stage decomposition image spatial frequency. This stage also implements receive differential ∂f (x) / ∂x. In first work not tell how they implement it. In second work, by using a phase approach, the amplitude and phase filters Gabbora considered a different order:

About what I did, taking the filter:

And normalized the value to

Here l - the distance of the pixel from the center of the filter. Of course, I'm a little faked taking a filter for only one window value σ. This has accelerated computing. This gives a slightly more blurred picture, but I decided not to strive for accuracy.

Returning to formulas. Suppose we want to amplify the signal, giving a characteristic response at the frequency ω in the temporary frame sequence. We have already picked up the characteristic spatial filter with a window σ. This gives us an approximate differential at each point. As is clear from the formula - there is only a temporary function giving feedback on our progress and gain. Multiplied by the sine of the frequency you want to strengthen (and it will function giving the response time). We obtain:

Of course, much easier than in the original article, but a little less of a problem with the speed.

Code and the result. H4> source to the first article laid out in open access to Matlab :. It would seem, why reinvent the wheel and write your own? But there were a number of reasons, largely tied to Matlab:

- If you then come to mind to do something reasonable and applicable to the Matlab code much harder to use than C # + OpenCV, ported in a couple of hours with ++.

- The original code was guided to work with saved video that has a constant bitrate. To be able to use the camera is connected to a computer having a variable bit rate need to change the logic.

- The original code implements the easiest of their algorithms without buns. Implement a slightly more sophisticated version with buns - already half the work. Moreover, despite the fact that the algorithm was the original, its input parameters are not those articles.

- The original code periodically led to a dead hang computer (even without the blue screen). Maybe just me, but you uncomfortable.

- In the original code was only console mode. Do everything in visual Matlab, which I know is much worse VS, it would be much longer than rewrite everything. Sources < / a> I posted on github.com and commented in detail. The program implements capture video from the camera and its analysis in real time. Optimization turned slightly to the left, but you can get locked up, expanding options. That circumcised in the name of optimization:

- Use a frame with a reduced size. Greatly speeds up the work. On the form did not manage to deduce the size of the frame, but if you open the code, the line: & quot; _capture.QueryFrame (). Convert & lt; Bgr, float & gt; (). PyrDown (). PyrDown (); & quot; this is it

As a result, the logic operation is obtained:

It's simple to outrageous. For example incremental summation with the frame so generally realized:

& lt; code & gt; for (int x = 0; x & lt; Ic [ccp] .I.Width; x ++) for (int y = 0; y & lt; Ic [ccp] .I.Height; y ++) { FF2.Data [y, x, 0] = Alpha * FF2.Data [y, x, 0] / counter; ImToDisp.Data [y, x, 0] = (byte) Math.Max (0, Math.Min ((FF2.Data [y, x, 0] + ImToDisp.Data [y, x, 0]), 255) ); } & Lt; / code & gt; pre> (Yes, I know that with OpenCV is not the best way)

Somewhere in the 90% of the code is not the kernel, I kit around it. But the realization of the nucleus gives already a good result. It is seen as inflated chest for a few tens of centimeters in breathing, is seen as swells Vienna as shakes his head in time with the pulse.

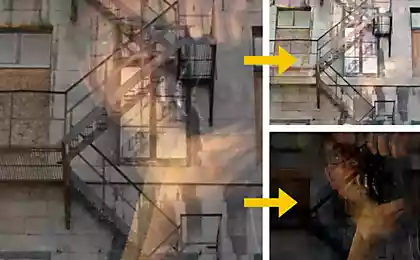

Here is explained in detail why the bobble head of the pulse. In fact it returns from stuffing of blood in heart: