891

Roko's Basilisk — the most scary thought experiment ever

Roko's Basilisk is a godlike form of artificial intelligence, so dangerous that if you just will think about it, then spend the rest of his days in appalling torture. Like the tape from "the ring". However, not even death will be the cure, because of Roko's Basilisk will resurrect you and continue his torture. T&P represent the translation of an article about one of the strangest legends spawned by the Internet.

WARNING: after reading this article, you can be doomed to eternal suffering and torment.

Roko's Basilisk appeared at the intersection of philosophical thought experiment, and urban legends. The first mention of it appeared on Less Wrong discussion Board, where people who are interested in the optimization of thinking and living through the prism of mathematics and rationality. Its creators are the important figures in the techno-futurism, and the achievements of their research Institute — contribution to academic discussions of technological ethics and decision making theory. However, what you are about to read now might seem strange and even insane. Despite this, a very influential and wealthy scientists believe in it.

Once the user named Roko posted the following thought experiment: what if in the future there will be a malicious artificial intelligence that wants to punish those who defy his orders? And what if he wants to punish those people who in the past contributed to its creation? Would in this case, readers of Less Wrong to help evil AI to be born or would be doomed them to eternal torment?

The founder of Less Wrong Eliezer Yudkowsky anger took the statement Roko. Here is what he said: "You must be smart to come to such thoughts. However, it saddens me that people who are smart enough to imagine such a thing, not smart enough to KEEP YOUR STUPID mouth shut and not tell anyone about this, as it is more important than the show itself is smart, telling about it to all my friends."

"What if in the future there will be artificial intelligence that wants to punish those who defy his orders?"

Yudkowsky acknowledged that ROCO responsible for the nightmares of visiting users of Less Wrong, had time to read the thread and removed it, making of Roko's Basilisk has become a legend. This thought experiment became so dangerous that thinking about it threatened the mental health of users.

What is Less Wrong? The formation of the concept of the future of humanity on the basis of the singularity. They believe that computing power will become so high that using a computer can create artificial intelligence — and with it the ability to upload human consciousness to a hard drive. The term "singularity" originated in 1958 in the course of the discussion two geniuses of mathematics — Stanislaw Ulam and John von Neumann when Neumann said, "the ever-accelerating progress of technology will make possible the approximation of the singularity, where technology will not be able to be understood by the people." Futurists and writers-fantasticnude Vernor Vinga and Raymond Kurzweil popularized the term, because they believed that the singularity is waiting for us all very soon — in the next 50 years. While Kurzweil is preparing for the singularity, Yudkowsky has high hopes for cryonics: "If you have not previously recorded their children for cryopreservation, you lousy parents."

If you believe the singularity is coming, and that a powerful AI will be available in the near future, the question arises: will they be friendly or evil? Fund Yudkowsky aims to guide the development of the technologies that we had it friendly artificial intelligence. The question for him and many other people is paramount. The singularity will lead us to the car, equivalent to God.

However, this doesn't explain why Roko's Basilisk looks so terrible in the eyes of these people. The question requires a look at the main "dogma" Less Wrong — "timeless decision theory" (VTPR). VTPR is a guideline for rational action based on game theory, Bayesian probability theory and decision-making, but given the existence of parallel universes and quantum mechanics. VTPR grew out of the classic thought experiment of Newcomb's paradox, in which an alien with super offers you two boxes. It gives you the choice: either take both boxes or just box B. If you select both, you are guaranteed to get a thousand dollars. If you take only box B, you may not get anything. However, the alien has stashed another trick: he has an all-knowing supercomputer, which made a week ago forecast whether you will take both boxes or only B. If the computer predicted you'll take both boxes, the alien would leave the second empty. If the computer predicted that you will choose box B, he would put one million dollars.

So, what are you going to do? Remember that the omniscient supercomputer.

This problem has baffled many theorists. The alien can not change the contents of the boxes. It is safest to take both boxes and get my thousand. But all of a sudden computer really omniscient? Then you have to take the box B to obtain a million. But if he be wrong? And regardless of what the predicted computer — is there no way to change their fate? Then hell need to take both boxes. But in this case...

The maddening paradox, forcing us to choose between free will and divine prediction does not have permission, and people can only shrug and choose the most comfortable option for them. WTPR gives advice: take box B. Even if the alien decided to laugh at you and will open an empty box with the words, "a Computer predicted that you would take both boxes, ha ha!" — you still have to choose. The rationale for this as follows: in order to make a prediction, the computer would need to simulate the entire Universe, including you. Thus, at this moment, standing in front of the boxes, you can be just a computer simulation, but what you do will influence reality (or reality). So take box B and get a million.

"The maddening paradox, forcing us to choose between free will and divine prediction does not have permission"

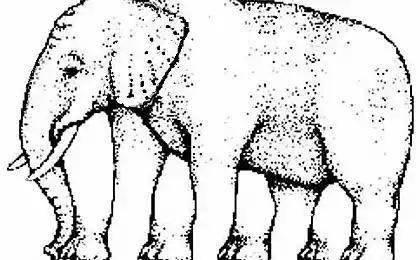

What does this have to do with vasilescu ROCO? Well, he also has a couple of boxes for you. Maybe you right now are in a simulation created by the Basilisk. Then, perhaps, we receive a modified version of the paradox Newcomb: of Roko's Basilisk tells you that if you take box B, then subjected to eternal torment. If you take both boxes, you will have to devote his life to the creation of the Basilisk. If the Basilisk actually will exist (or, worse, it already exists and is a God of this reality), he will see that you are not chose the option of aid in its creation and will punish you.

Maybe you are wondering why this question is so important for the Less Wrong, given the arbitrariness of this thought experiment. Not the fact that Roko's Basilisk will ever be created. However, Yudkowsky has removed mention of Roko's Basilisk, not because he believes in his existence or the imminent invention, but because he believes the idea of the Basilisk threat to humanity.

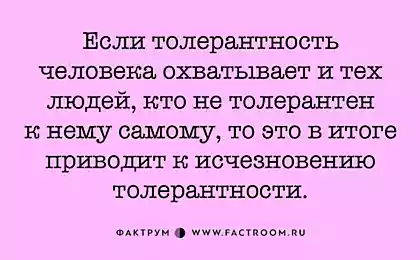

Thus, the of Roko's Basilisk is only dangerous for those who believe in it — in this regard, the participants Less Wrong, supporting the idea of a Basilisk, possess a kind of forbidden knowledge that reminds us horror stories of H. p. Lovecraft of Cthulhu or the Necronomicon. However, if you do not subscribe to all these theories and do not feel the temptation to obey the insidious machine from the future, of Roko's Basilisk poses you no threat.

I'm more worried about people who believe that rose above the generally accepted moral norms. Like expected Yudkowsky friendly AI, he is a benthamite, he believes that the greatest blessing for all mankind is ethically justified, even if a few people had to die or suffer on the way to it. Not everyone can be faced with a similar choice, but in another case: what if a journalist writes about a thought experiment that can destroy the minds of people, causing, thus, mankind harm and preventing the progress in the development of artificial intelligence and singularity? In this case, any good that I've done in my life, should outweigh the harm that I brought to the world. And, perhaps, risen from cryogenic sleep Yudkowsky future merged with the singularity and decide to simulate me in the following situation: first box — I'm writing this article, but not the second. Please Almighty Yudkowsky, don't hurt me.

Source: theoryandpractice.ru