1093

Scientific results of the year: Physics

American Physical Society summed up the results of the year. Among the interesting things this year - unknown particles from deep space, units of quantum computers, nuclear fusion, fireballs, as well as some statistics and philosophy.

the ability to detect dark matter h4>

The inflationary model h4>

Progress in inertial confinement fusion h4>

The first observation of the spectrum of ball lightning h4>

SiV centers in diamond h4>

Single-photon transistors h4>

Entropy and diagnosis of leukemia h4>

The Arrow Of Time h4>

Instead of a conclusion h4> Nice to see deep philosophical publication on the pages of one of the leading physics journals. Not less pleasant osoznovat that we are a few steps closer to quantum computing. Let's wait for new and interesting work in the coming year.

the ability to detect dark matter h4>

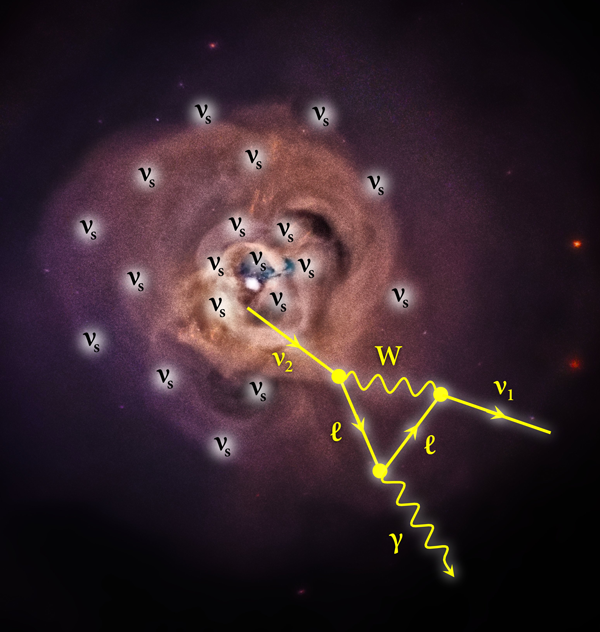

It is known that a significant portion of our universe consists of dark matter - particles incomprehensible nature, subject only to the gravitational interaction. They do not emit or absorb electromagnetic radiation that does not observe them directly through a telescope.

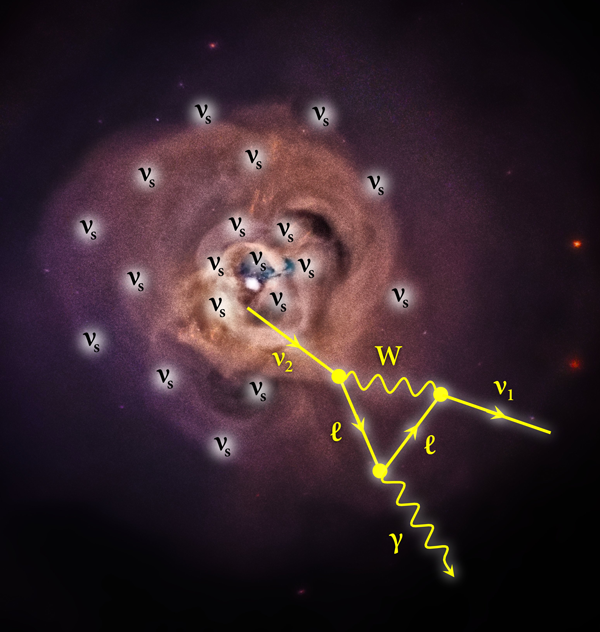

One of the candidates for dark matter are sterile neutrinos (on Giktayms about them already written ). With a very low probability (10 -21 sup> with -1 sup>), they can decay into ordinary neutrinos and gamma ray. Based on the assumptions about their weight, gamma ray energy should be the order of several keV (X-band).

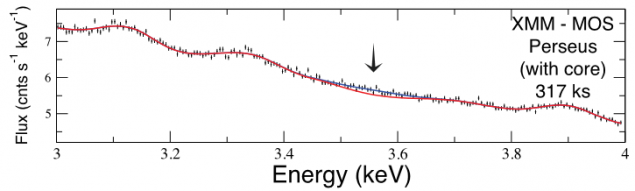

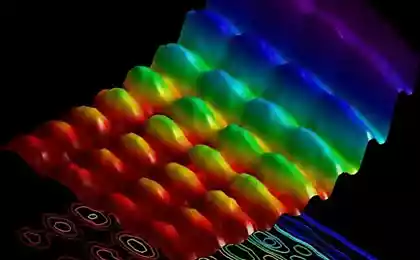

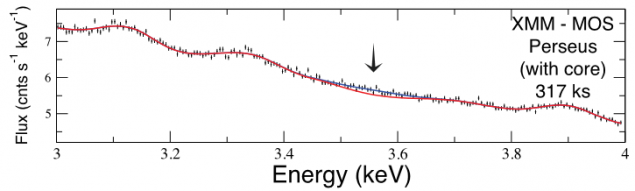

Actually, this year already two orbiting telescope ("Chandra» NASA and the "XMM-Newton" ESA) found in the emission of two different galaxies unusual peak at 3.6 keV. It looks like this (all at full capacity):

The intensity of the line decreases with increasing distance from the galactic center. Of course, to prove it does not pull, but at least does not contradict (dark matter density decreases on the periphery of galaxies) and finds no other reasonable explanation. For more accurate measurements are planned with the Japanese telescope ASTRO-H, which launched into orbit next year.

The inflationary model h4>

One of the finer points in cosmology - is expansion of the universe in the first moments after the Big Bang. Highly probable theory is inflationary model , assuming an extremely rapid expansion of the universe at some point and allows you to bypass a number of problems that appear in other models. The idea is that if the inflationary model is correct, then it must somehow be reflected in the subsequent evolution of the universe and that we are seeing now.

In particular, we need to watch some of the features of the CMB (microwave background of the universe, the nature of which dates back to the Big Bang), namely its rotation (rotary) polarization. This is something like the circular polarization of light. True measurement need not polarizer of 3D-glasses, but something a little more complicated.

Actually, detect this feature in the polarization succeeded in 2014. The device, managed to do this, called BICEP2 . This radio telescope refractor (cool, huh?), Measuring the cosmic microwave background radiation at a frequency of 150 GHz using matrix tricky superconducting sensors. Plastic lenses are cooled by liquid helium to 4 K, and the matrix itself - to 250 mK. The appliance is installed at the South Pole for observing the same part of the sky.

Unfortunately, after a while it turned out that the result could be due to scattering of radiation by cosmic dust. Apparently, the experiment will continue to accumulate statistics.

Progress in inertial confinement fusion h4>

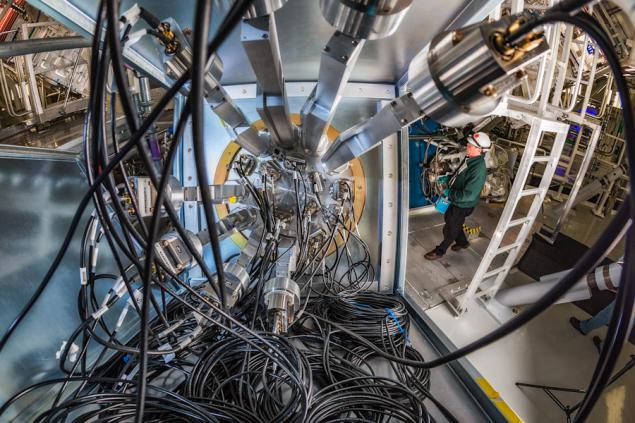

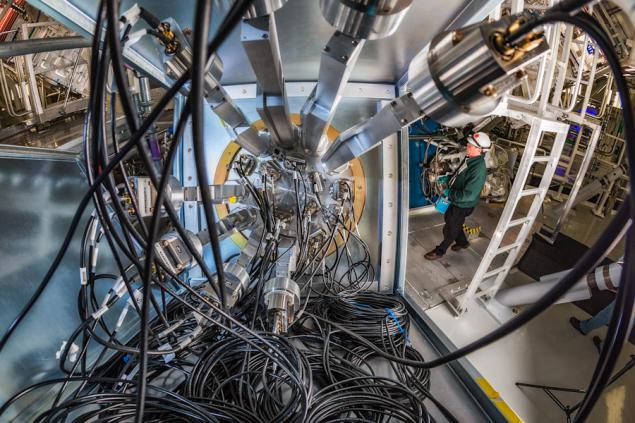

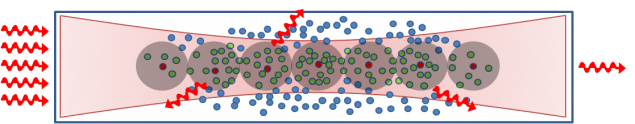

Take a small vial with a deuterium-tritium mixture and sharply illuminate on all sides by high-power lasers. If the radiation is strong, it will compress the vial to the extent that there would be a reaction of thermonuclear fusion. The main problem here - to create a super-power pulsed lasers and choose the parameters for the maximum pulse energy of the reaction yield.

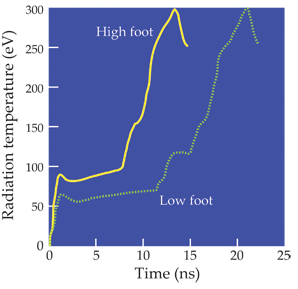

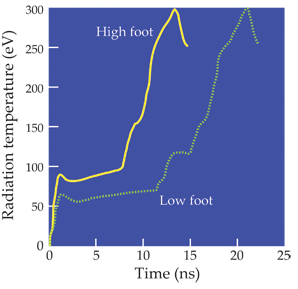

In particular, it is important to select the optimum time profile of the laser pulse. Typically, the laser power increases with time, wherein, in steps (to save power):

Compression is slow in the first stage and much stronger with the maximum intensity of the laser (referred to as a profile "low-foot" ). The problem is that at a certain moment the target appear to begin to break down, and the reaction was terminated.

In 2013 (the article came out in early 2014) in National Ignition Facility (Livermore, USA) suggested that a sharp beam profile ( "high-foot" ) will avoid premature collapse of the target. The experiment showed , the idea proved to be correct. Moreover, it is possible to understand the inaccuracies of theoretical models. A nice bonus is that in the new process appeared positive feedback: alpha particles formed as a result of the synthesis, the mixture is heated further, that support the high temperatures required for fusion.

As a result, the reaction efficiency increased (by different parameters) at approximately 50%. However, the ratio of the received energy expended to still below unity.

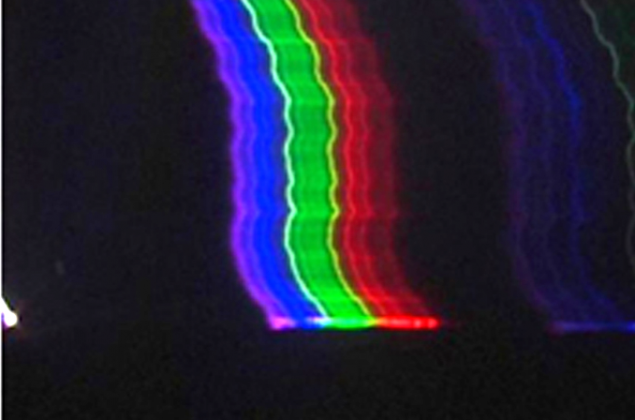

The first observation of the spectrum of ball lightning h4>

And about this on Giktayms already written .

SiV centers in diamond h4>

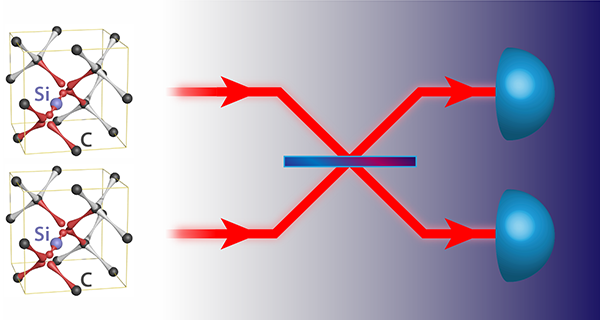

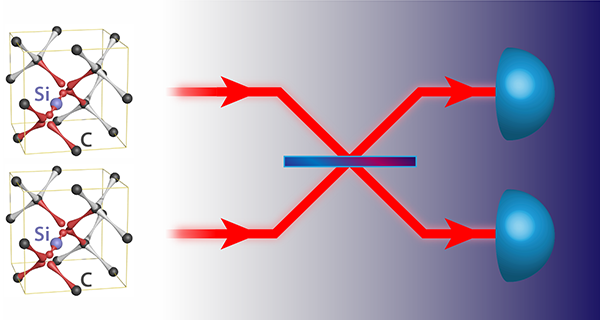

Sometimes it appears that the lattice defects have very useful properties. For example, if a diamond is one of the carbon atoms is replaced by nitrogen, and the neighboring removed altogether, we get NV-Center (nitrogen-vacancy). His energy levels are very similar to the energy levels of the atom: they are narrow, they have reasonable lifetimes, and the transitions between them lie in the visible and infrared spectrum, which is useful for manipulation.

Imagine we have a little piece of diamond, and we know that there is exactly one NV-center. If we shine on it, it will emit exactly one photon. Now take two pieces of diamond - they radiate two identical photons. But it can already be used for experiments in quantum information.

Another nice thing is that a piece of diamond is durable. With a single atom would not have happened: he can fly, oxidize, or something else. Therefore, the NV-centers are very loved in quantum optics, though not ideal.

Indeed, the essence of opening that managed to find another such system. As the name implies, it's practically the same thing, only nitrogen was replaced by silicon. As it turned out, such a center exceeds NV-centers on a number of characteristics - and that means the next few years, research SiV promises to be fruitful.

Single-photon transistors h4>

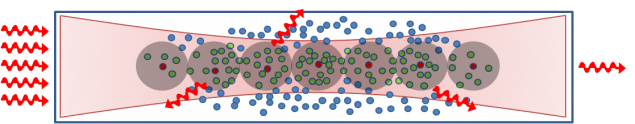

This year, single-photon sources added photon transistor, and not one, but two. We are not talking about the electronic and optical transistor on, that is, managing powerful beam of light with a weak (ideally - a single photon).

Let's take a hydrogen atom. He has a line spectrum: a lot of the electron energy levels corresponding to different electronic orbitals. The higher the level, the greater the radius of the electron orbit. So, for the level number in the region of 50-70 orbit radius of tens of microns. Such an atom is called the Rydberg and it looks very interesting: a tiny kernel and a huge electronic "coat" around. What if slip under "coat" another atom?

We look at the picture : Laser Light (Pink) filed several Rydberg atoms (red nucleus and gray electron clouds). Green atoms fell under the "coat" of neighbors and were screened in a Faraday cage - they do not feel the external fields and do not interact with light. If Rydberg "coat" is removed, the green atoms again be able to interact with the outside world - for example, absorb photons.

To turn on the "coat" only one photon. Included "coat" - green atoms screened and do not absorb light - the light passes through them. Turn off the "coat" - green atoms intensified and absorb light - the light does not pass. The idea is pretty simple, but it took a long time to implement. This year it was two gruppam from Germany. To utility models here is very far away, but for science, this was a very welcome step.

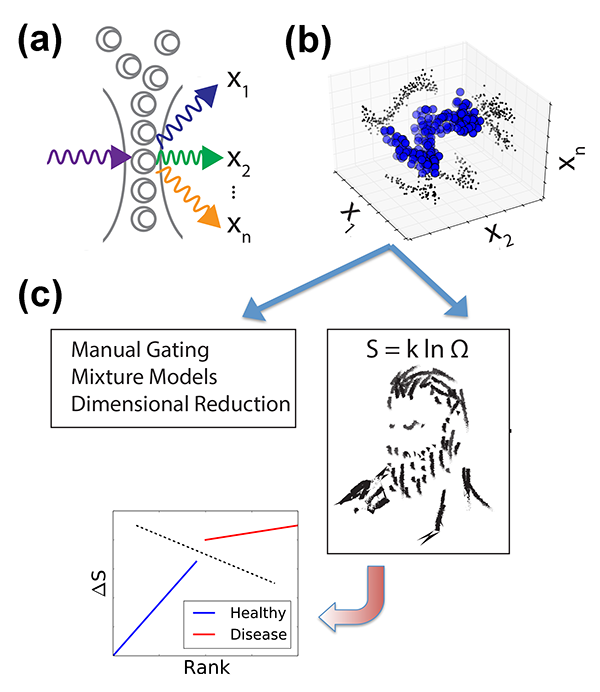

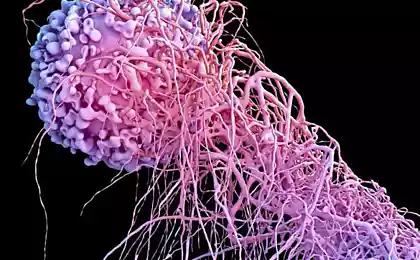

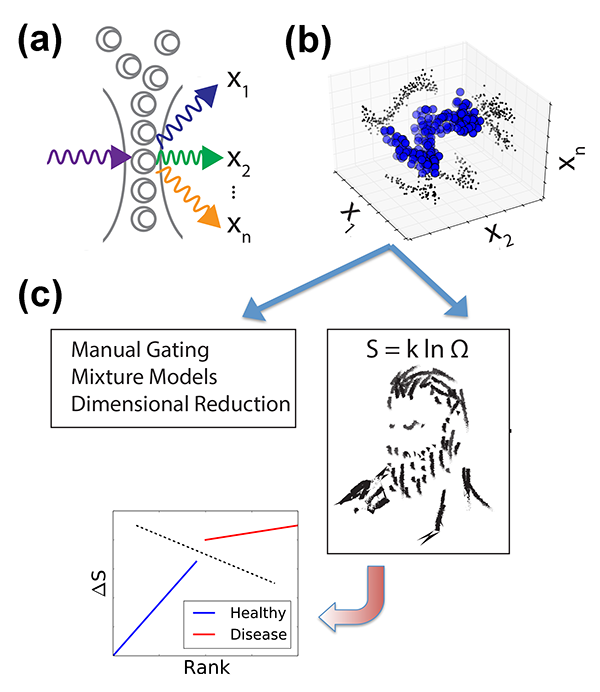

Entropy and diagnosis of leukemia h4>

There are statistical physics such as parameter entropy - a measure of disorder. Typically all (& lt; zanuda_mode & gt; closed & lt; / zanuda_mode & gt;) systems seek to states with the highest entropy. Let any system is described by two variables A and B, and we can only try on A. We do not know anything about B - but if the system is alive and evolving, then B tends to the value at which the entropy of the system is maximized.

If we are able to consider the entropy of the known A and B, then deciding the inverse problem, we will find B, corresponding to the maximum entropy. This will be the most probable value in a living system.

Now the same thing, but in medicine. Living system - a man not two parameters, and much more. We can try on some of them (as I understand it, something like protein concentrations), figure out how to calculate the entropy and the proposed scheme to restore some unknown parameters. These unknowns are very useful for diagnosis of leukemia, and possibly a number of other serious illnesses. Anyway, allegedly, first results were quite encouraging .

The Arrow Of Time h4>

Thus, the entropy increases with time. Or vice versa: time flows in the direction in which the entropy (disorder) more. This definition of the thermodynamic arrow of time. There are two arrows of time: the cosmological (the direction of the universe is expanding) and psychological (as we feel the time). One of the fundamental physical (and philosophical) questions - why we think that the direction of these arrows coincide.

This is available Hawking argues in "Brief History of Time." His explanation for the thermodynamic and psychological arrows simply and elegantly. Our brain is essentially a computer that processes the input data. Regardless of whether he arranges them or erases, it spent energy released as heat. Accurate calculation shows that when the computer memory and the total entropy of the environment increases - and thus the time for it flows in the direction of increasing entropy.

In work this year question is about the same, but the approach to the solution a little bit different. The authors propose a thought experiment: two communicating vessels, one gas and one without; between vessels worth Maxwell's demon and the counter counts the number of molecules in which direction flown. Thermodynamic arrow pointing towards the equalization of gas concentrations. If psychological time flows to the same counter, counts the number of molecules which way flew fly - a sort of memory of the future.

Now a little Move one of the molecules at the beginning of psychological time. If the same direction the arrow of time, nothing happens: the final state of the system is approximately the same, the counter will count down the first passages at the same moments of time (maybe a little more in the future). If the arrows are directed oppositely, the system will not be able to return to a state of "all of the gas in a single vessel," because the probability of this process is very low and is very sensitive to initial conditions. That is the minimum change in the initial conditions completely changes the counter.

Thus, the memory (meter) varies little with a weak change of the past for collinear arrow of time, and radically restructured to oppositely. In the latter case, the memory can hardly be called memory, because it is no longer keep what happened. The authors do not stop, and introduce some characteristics of memory theory generalize to other types of memory and does a great time to discuss a variety of other interesting aspects. What is remarkable, with all this on 8 pages they write only 4 formulas and draw a picture.

Instead of a conclusion h4> Nice to see deep philosophical publication on the pages of one of the leading physics journals. Not less pleasant osoznovat that we are a few steps closer to quantum computing. Let's wait for new and interesting work in the coming year.

Source: geektimes.ru/post/243715/

Samsung showed speakers that sound the same at 360 degrees

The secret to learning a foreign language for adults