1544

Future and present neyroimplantov and neurointerface

A short note on the principles of operation, current technical limitations and possible future neyroimplantov in particular and in general neurointerface.

I think for most it is no secret that neyroimplant - is already a reality. For example: Кохлеарный implant . There bionic prostheses and implants to restore vision.

Very impressive video Naytzhelom Ekland, naturally Deus EX:

On the other hand, when the apparent success of the prosthesis, in terms of why the transmission of information via neyrointerfeys current level - is «only transmit a few bits of information per minute »?

It would seem that, in real-time learned to control bionic prosthesis (even with feedback in the form of sensations), then suddenly - just barely get pass the information?

This is a significant difference arises from the fact that in one case the work is with the peripheral and the other - the central nervous system.

In the first case is relatively simple. There are bundles of nerves and a good enough model (understanding) of their work. In the case of muscles - is the momentum, there is contraction of the muscle, and vice versa. Transfer sensations - too "simple", by joining the existing nerves as "ports". With cochlear / visual implants - about the same.

is not difficult to "ping" the nerves and learn how to read / send signals i>

But to create a "neurointerface" is a little applicable. Simply because we need an extra interface, in this case, when you connect via a peripheral NA-free "port" is not provided. Is not much of a problem now create serious enough neurointerface connecting to the nerves limbs (as well as connect bionic prostheses). Have to spend time learning, but you can retrain and get used to. Pulses muscle contraction will encode arbitrary information. For example, the characters - "blind bespaltsevy" printing method.

But it is clear, almost no one wants to sacrifice limbs or other parts of the body for the sake of such an interface ...

So basically now trying to tie the dog wheel - using EEG and shifting patterns of brain activity in the command / information.

By and large, this approach is a dead end, or very niche.

The fact that the "resolution" of the method by orders of magnitude lower than the EEG of what information is encoded by the brain. If strongly exaggerate, the state of the neuron can be compared with byte 0 - inactive 1 - active (sends email. Pulse). In fact, neurons, and the processes much more difficult, but we have enough until this simplification. Ie coding an abstract idea, provided that we can influence and perceived status of individual neurons - need tens / hundreds / thousands of neurons. It's hard to say exactly because of the lack of sufficient understanding of the processes, but it may well be that last tens to hundreds of specialized neurons to create sustainable particular skill. Skill level "to submit a unique team for the computer." As well as we can issue the command finger tapping a letter on the keyboard, with the same degree of accuracy and precision. And with minimum energy consumption. Ideally costs "mental energy" to transfer the character to be several times smaller than pressing a finger. Due to the fact that will not need to activate the motor neurones and the periphery.

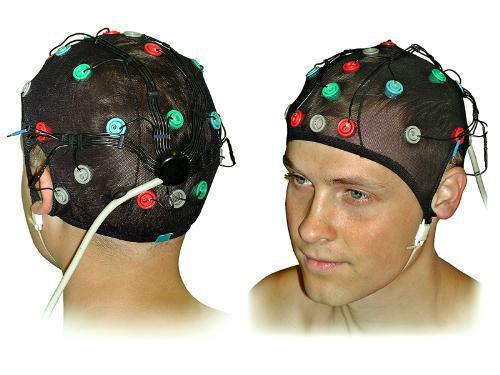

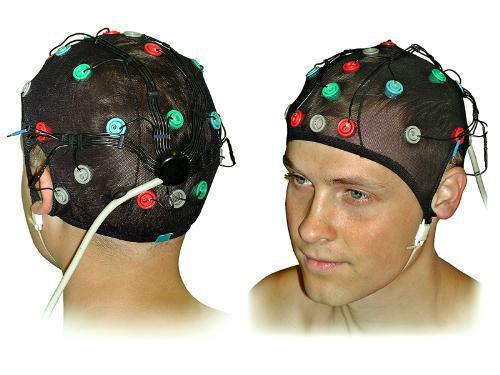

Returning to the EEG - with the help of the potentials are removed from the scalp electrodes 16-24-32. Feel the difference of proportions. Number of neurons in the cortex and miserable dozens of electrodes. EEG as MRI, give only a very general picture of work areas of the brain and their activity. And permits may still be enough to tell people asleep or awake, to assess the level of attention. Estimate the total activity of a sufficiently large volume of cells.

The obvious solution - to fix the electrical activity of specific neurons. If we manage to consistently record the individual activities of some of the neurons in the cortex - you can learn how to send any commands. Create interfaces to the same number of complex, diverse and obedient as your own voice / movement.

For neurons not much difference - they have two states is active / inactive. A receiving device is not difficult to prescribe appropriate activity specific neurons to any machine code.

Feedback easily organize the usual way - through the image on the monitor, etc.

And if we can control the activity of neurons arbitrary - you can on the contrary "to send information to the brain." This, however, is much more complicated. In contrast to the first method, then you will need much more knowledge about the individual features of the structure of each individual brain.

By the way, remove the activity of individual neurons have already learned. And they also affect. The problem is that this has to literally stick very thin electrode into the brain.

For obvious reasons, people do not. So check "sufficient number of neurons to create neurointerface" I think no one has yet been able to. Typically, such methods test theories about how the brain in animals.

Other cons:

Over time it turns out to explore only a few neurons in a few weeks.

Neurons from contact with the electrode can break down mechanically.

In terms of human neyroimplantov:

too small electrodes; the need brain surgery; a lack of knowledge.

Other potential areas:

Nanotechnology. If you manage to make a nanoscale sensor to attach it to a neuron and read pulses - you will not have to stick electrodes in the brain, opening the skull. In addition to the problem of creating nanorobot will have to solve the problem of its targeted delivery. Clearly not the immediate future; Improved scanning. Learn how to scan activity of individual neurons in the cerebral cortex. Given the current size and capabilities of MRI - obviously not the immediate future.

Bionics (neyroimplanty peripheral NA) - is impressive and will evolve rapidly. The rapid progress in principle there is no problem, knowledge is sufficient.

Neyroimplanty central NA - from the category of "fiction" have moved into the category of "real, but at a different level of progress." Necessary to improve medical technology and the development of knowledge of psychophysiology.

Neurointerfaces as a whole - based on the EEG, MRI - dead end, without creating a fundamentally different level of scanning devices. As interface to display the state of consciousness and biofeedback, however, are quite justified. I>

Source: geektimes.ru/post/242134/

I think for most it is no secret that neyroimplant - is already a reality. For example: Кохлеарный implant . There bionic prostheses and implants to restore vision.

Very impressive video Naytzhelom Ekland, naturally Deus EX:

On the other hand, when the apparent success of the prosthesis, in terms of why the transmission of information via neyrointerfeys current level - is «only transmit a few bits of information per minute »?

It would seem that, in real-time learned to control bionic prosthesis (even with feedback in the form of sensations), then suddenly - just barely get pass the information?

This is a significant difference arises from the fact that in one case the work is with the peripheral and the other - the central nervous system.

In the first case is relatively simple. There are bundles of nerves and a good enough model (understanding) of their work. In the case of muscles - is the momentum, there is contraction of the muscle, and vice versa. Transfer sensations - too "simple", by joining the existing nerves as "ports". With cochlear / visual implants - about the same.

is not difficult to "ping" the nerves and learn how to read / send signals i>

But to create a "neurointerface" is a little applicable. Simply because we need an extra interface, in this case, when you connect via a peripheral NA-free "port" is not provided. Is not much of a problem now create serious enough neurointerface connecting to the nerves limbs (as well as connect bionic prostheses). Have to spend time learning, but you can retrain and get used to. Pulses muscle contraction will encode arbitrary information. For example, the characters - "blind bespaltsevy" printing method.

But it is clear, almost no one wants to sacrifice limbs or other parts of the body for the sake of such an interface ...

So basically now trying to tie the dog wheel - using EEG and shifting patterns of brain activity in the command / information.

By and large, this approach is a dead end, or very niche.

The fact that the "resolution" of the method by orders of magnitude lower than the EEG of what information is encoded by the brain. If strongly exaggerate, the state of the neuron can be compared with byte 0 - inactive 1 - active (sends email. Pulse). In fact, neurons, and the processes much more difficult, but we have enough until this simplification. Ie coding an abstract idea, provided that we can influence and perceived status of individual neurons - need tens / hundreds / thousands of neurons. It's hard to say exactly because of the lack of sufficient understanding of the processes, but it may well be that last tens to hundreds of specialized neurons to create sustainable particular skill. Skill level "to submit a unique team for the computer." As well as we can issue the command finger tapping a letter on the keyboard, with the same degree of accuracy and precision. And with minimum energy consumption. Ideally costs "mental energy" to transfer the character to be several times smaller than pressing a finger. Due to the fact that will not need to activate the motor neurones and the periphery.

Returning to the EEG - with the help of the potentials are removed from the scalp electrodes 16-24-32. Feel the difference of proportions. Number of neurons in the cortex and miserable dozens of electrodes. EEG as MRI, give only a very general picture of work areas of the brain and their activity. And permits may still be enough to tell people asleep or awake, to assess the level of attention. Estimate the total activity of a sufficiently large volume of cells.

The obvious solution - to fix the electrical activity of specific neurons. If we manage to consistently record the individual activities of some of the neurons in the cortex - you can learn how to send any commands. Create interfaces to the same number of complex, diverse and obedient as your own voice / movement.

For neurons not much difference - they have two states is active / inactive. A receiving device is not difficult to prescribe appropriate activity specific neurons to any machine code.

Feedback easily organize the usual way - through the image on the monitor, etc.

And if we can control the activity of neurons arbitrary - you can on the contrary "to send information to the brain." This, however, is much more complicated. In contrast to the first method, then you will need much more knowledge about the individual features of the structure of each individual brain.

By the way, remove the activity of individual neurons have already learned. And they also affect. The problem is that this has to literally stick very thin electrode into the brain.

For obvious reasons, people do not. So check "sufficient number of neurons to create neurointerface" I think no one has yet been able to. Typically, such methods test theories about how the brain in animals.

Other cons:

Over time it turns out to explore only a few neurons in a few weeks.

Neurons from contact with the electrode can break down mechanically.

In terms of human neyroimplantov:

too small electrodes; the need brain surgery; a lack of knowledge.

Other potential areas:

Nanotechnology. If you manage to make a nanoscale sensor to attach it to a neuron and read pulses - you will not have to stick electrodes in the brain, opening the skull. In addition to the problem of creating nanorobot will have to solve the problem of its targeted delivery. Clearly not the immediate future; Improved scanning. Learn how to scan activity of individual neurons in the cerebral cortex. Given the current size and capabilities of MRI - obviously not the immediate future.

Bionics (neyroimplanty peripheral NA) - is impressive and will evolve rapidly. The rapid progress in principle there is no problem, knowledge is sufficient.

Neyroimplanty central NA - from the category of "fiction" have moved into the category of "real, but at a different level of progress." Necessary to improve medical technology and the development of knowledge of psychophysiology.

Neurointerfaces as a whole - based on the EEG, MRI - dead end, without creating a fundamentally different level of scanning devices. As interface to display the state of consciousness and biofeedback, however, are quite justified. I>

Source: geektimes.ru/post/242134/

The 3D-printer printed the contact lenses with built-in OLED-display

Scientists from the US are taught at home to talk to the power grids