500

Eliezer Yudkowsky: Man vs Artificial intelligence — who will win?

Imagine the incredibly smart artificial superintelligence locked in a virtual world — for example, just in the box. You don't know, he will be angry, friendly or neutral. All you know is that he wants to come out of the woodwork and that you can interact with it via the text interface. If the AI is Superintelligent really, if you can talk to him for five hours and not to succumb to his persuasion, and manipulation — not to open the box?

This thought experiment suggested that Eliezer Yudkowski, researcher at research Institute of mechanical engineering (MIRI). In MIRI is a lot of scientists who are researching the risks of the development of artificial superintelligence, although he was not even born yet, it already attracts attention and incites debate.

Yudkowsky claims that artificial superintelligence could say everything he can to convince you: careful reasoning, threats, deception, build rapport, subliminal suggestion, and so on. At the speed of light the AI builds the story, probing weaknesses and determines how most easily to convince you. The words of the theorist's existential threats, Nick Bostrom, "are we supposed to believe that a superintelligence will be able to achieve all that put goal."

Experiment with the AI-the-box raises doubts about our ability to control what we could create. It also causes us to comprehend the rather bizarre possibility that we don't know about our own reality.

Imitation may be the perfect breeding ground for artificial intelligence. Some of the most plausible ways to create a powerful AI include simulated worlds by introducing restrictions in the artificially simulated world and choosing desired traits, scientists can attempt to roughly duplicate its own model of development and conscious of human senses. The formation of AI in a simulated environment can also prevent its "leakage" in the world as long as his intentions and the degree of threat will become more than obvious.

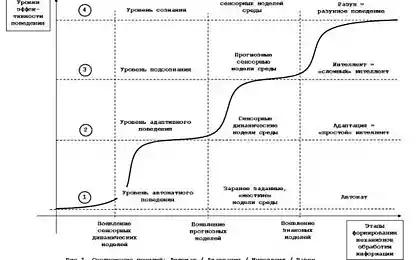

The latest achievements hinting that it may be impossible difficult to develop the knowledge from scratch. No one will be able to build the mind of the human scale, writing one line of code. There are other promising approaches, of course, the growth in computing power it may be possible to develop a process that will eventually create an AI, intelligent and capable as a man.

Will you release the AI or dare to be doomed to endless torture?One idea is the "new" AI is in the scanning, mapping and emulation of the virtual models of the human brain. The human brain contains billions of neurons and trillions of connections between these neurons. If we simulate a virtual someone the complete structure of neurons and their connections, whether it is an adequate replica of human consciousness?

Other plans for the virtual development of intelligence is much less simple. One of the key concepts is the creation of a learning machine is of weak intellect, who will be able to learn, perhaps because of an artificial neural network, programmed for endless expansion and improvement of their algorithms.

The scenario described above, include a worrisome problem. The emulated brain can work in 2, 10 or 100 times faster than a biological brain. AI can modify its own code or start to create a more reasonable version of yourself. A burst of speed and mind Smoluchowski computers discussed back in 1965, statistician I. J. Hood, who wrote that "the first Superintelligent machine will be the last invention that will need to create humanity."

If we create an incredibly intelligent machine, we have to be careful with its placement. Engineers will hardly be able to create a model of the world which is fully protected against leaks. Scientists need to create a world-simulation that will allow them to look at the universe and collect information. It may seem harmless, but it is difficult to assume, you will not find such a super way to harm us through simple observation.

Returning to boxes Rudkovskogo, there is a particularly creepy way that AI can use to get you to release him. It includes handling of the situation: the AI will try to convince you that you are almost certainly part of the simulation.

You are dealing with an artificial superintelligence in a virtual prison. AI explains that using his (inexplicably large) computational power, he created the trillion simulated creatures. These creatures are now all communicate with the AI and decide to open their snuff-box or not. All these creatures have memory of their "lives" and not think about how illusory the reality.

AI claims that each virtual being to open the box, will find an endless state of euphoria, and every simulated creature that will not open the box, be subjected to eternal torment, and horror.

Since you are one of a trillion minds, which are faced with this, push AI, you have come to the conclusion that there is an astronomically greater chance that you are in the power of AI and what you need to play by the rules.

Will you release the AI or dare to be doomed to endless torture? You can't fool someone who is always a step ahead of you, and you find it hard to rate, there lies the AI not knowing his motives. In the best case you could counter this tactic by following a strict policy of ignoring some types of blackmail. And this topic still confuses theorists AI.

MIRI stated goal: "to make sure that the establishment with intelligence above the human will bear a positive impact." We need to take a chance and try, but must warn all potential pitfalls. And if you ever do meet a divine computer that can spawn innumerable virtual universes, it is likely that this "you" is the dream of artificial intelligence... if it ever will hear your requests and pleas. published

P. S. And remember, just changing your mind — together we change the world! ©

Source: hi-news.ru