971

Creation of artificial intelligence

As a result of my research, I stopped using the phrase “artificial intelligence” for myself as too vague and came to another formulation: an algorithm for self-learning, research and application of the results found to solve any possible problems.

What is AI, much has been written about it. I put the question in another way, not “what is AI”, but “why does AI need?” I need it to make a lot of money, then I need the computer to do everything I don't want to do for me, then build a spaceship and fly to the stars.

Here I will describe how to get the computer to fulfill our desires. If you expect to see a description or mention here of how consciousness works, what self-consciousness is, what it means to think or reason, then this is not the case. Thinking is not about computers. Computers calculate, calculate and execute programs. So we will think about how to make a program that can calculate the necessary sequence of actions to realize our desires.

In what form our task gets into the computer - through the keyboard, through the microphone, or from sensors implanted in the brain - it does not matter, it is secondary. If we can get the computer to fulfill the wishes written in the text, then we can then ask it to make a program that also fulfills the wishes, but through a microphone. Image analysis is also superfluous.

To argue that in order for AI to recognize images and sound, such algorithms must initially be incorporated into it is like saying that everyone who created them knew from birth how such programs worked.

Here's the axiom:

1. Everything in the world can be counted according to some rules.

(Quantum uncertainty and inaccuracies will be discussed.)

2. The calculation according to the rule is an unambiguous dependence of the result on the initial data.

3. Any unambiguous dependence can be found statistically.

And now the claims:

4. There is a function of converting text descriptions into rules, so that you do not need to search for knowledge that has long been found.

5. There is a function of transforming tasks into solutions (this is the fulfillment of our desires).

6. The rule of predicting arbitrary data includes all other rules and functions.

Let's translate this into programming language:

1. Everything in the world can be calculated by algorithms.

2. The algorithm always gives the same result when the original data is repeated.

3. If there are many examples of the initial data and results to them, with an infinite search time, you can find all the many possible algorithms that implement this dependence of the initial data and the result.

4. There are algorithms for converting text descriptions into algorithms (or any other information data) so as not to search for the required algorithms statistically, if someone has already found and described them.

5. It is possible to create a program that will fulfill our desires, whether in text or voice, provided that these desires are realized physically and within the required time frame.

6. If you can create a program that can predict and learn how to predict as new data arrives, then after an infinite time, such a program will include all the possible algorithms in our world. Well, with not infinite time for practical use and with some error, it can be forced to execute the algorithms of the program claim 5 or any other.

And IMHO:

7. There is no other way of completely independent and independent of a person’s learning, except by searching for rules and statistically checking them on forecasting. You just need to learn how to use this property. This is the principle on which our brain works.

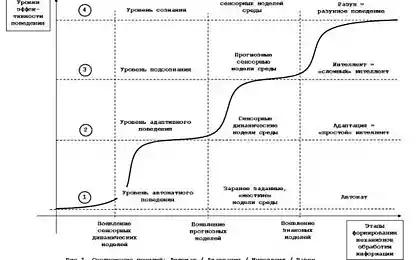

What to predict. In the human brain from birth begins to flow information from the eyes, ears, tactile, etc. All decisions are made based on previously received data. By analogy, we make a program that has the input of new information by one byte - the input byte stream. Everything that came before is presented as a single list. From 0 to 255 external information will be received, and more than 255 will be used as special control markers. That is, the input allows you to write say up to 0xFFFF dimension of a number. And it is this flow, or rather the next added amount of information, that you need to learn to predict, based on the data that came before. That is, the program should try to guess what the next number will be added.

Of course, other options for presenting data are possible, but for purposes when a variety of formats are received at the entrance, we simply stuff various html with descriptions there at first, this is the most optimal. Although markers can be replaced with escap sequences for optimization purposes, explaining them is less convenient. (Also, let’s say everything is in ASCII, not UTF.)

So, first, just like at birth, we put all the web pages with descriptions in there in a row and share them with a marker of new text - - so that this black box learns everything. After a certain amount of data, we begin to manipulate the incoming information using control markers.

By forecasting, I mean an algorithm that knows not only what patterns have already been, but is constantly looking for new ones. Therefore, if you send a sequence to such a program

skyblue

grassgreen

Ceiling

, then he must understand that the marker follows the color from the previously indicated object, and at the place of the ellipsis will predict the most likely color of the ceiling.

We repeated several examples to him so that he would understand which function to apply within these tags. And the color itself, of course, he should not invent, but should already know it independently by studying the patterns of forecasting.

When the algorithm is required to respond, what was the prediction of the previous step is fed to the input of the next steps. Type autoprediction (similar to the word autocorrelation).

The other option is to put a question after the first marker, and then the second answer, and then if this algorithm is super-mega-cool, it should begin to give answers to even the most difficult questions. Again, within the facts already studied.

You can still come up with many different tricks with control markers fed to the input of the predictive mechanism, and get any desired functions. In particular, it can be crossed with the q-learning algorithm and obtain sequences of instructions necessary to control any mechanisms. We will return to the control markers later.

What is this black box made of? First, it is worth mentioning that it is impossible to make one hundred percent forecasting always and in all situations. On the other hand, if the result is always a number of zero, then this is the same forecast. With an absolute margin of error. Now let’s calculate with what probability, what number, what number follows. For each number, the following is the most likely. So we can predict it a little bit. This is the first step of a very long journey.

The unambiguous display of the initial data on the result according to the algorithm, this corresponds to the mathematical definition of the word function, except that the definition of the algorithm does not impose certainty in the amount and placement of input and output data. As an example, let there be a small tablet: object-color, it will bring many lines: sky-blue, grass-green, ceiling-white. This turned out to be a small local unique display function. And it doesn’t matter that in reality it is not uncommon for colors to be different – there will be different tables. And any database containing remembered properties of something is a set of functions, and displays object identifiers by their properties.

For simplicity, further in many situations, instead of the term algorithm, I will use the term function, such as one-parameter, unless otherwise indicated. And all these references, you have to think about extensibility to algorithms.

And I will give an exemplary description, because in reality I will implement all this for now. But it makes sense. It should also be noted that all calculations are carried out by coefficients, not truth or falsehood.

Any algorithm, especially one that operates on integers, can be broken down into multiple conditions and transitions between them. Operations of addition, multiplication, etc. are also decomposed into polygorithmics of conditions and transitions. And the result operator. It's not a return operator. The operator of a condition takes a value from somewhere and compares it to a constant. And the result operator puts a constant value somewhere. The location of the take or fold is calculated relative to either the base point or the previous steps of the algorithm.

struct t_node { int type; // 0 is a condition, 1 is the result of union { struct {/ operator of the condition t_node* source_get; t_value* compare_value; t_node* next_if_then; t_node* next_if_else; }; struct {/ operator of the result t_node* dest_set; t_value* result_value_value; }; Off the hook, something like that. And from these elements, the algorithm is built.

Each predicted point is calculated by a function. A condition is attached to the function that tests the applicability of the function to that point. A common coupling returns, either a lie is not applicable, or the result of a function calculation. And continuous flow forecasting is another test of the applicability of all functions already invented and their calculation, if true. And so for every point.

In addition to applicability, there are distances. Between the initial data and the resulting, and this distance is different, with the same function, depending on the condition. (And from the condition to the initial or predicted is the same distance, it will be implied, but omitted in explanations.)

With the accumulation of a large number of functions, the number of conditions testing the applicability of these functions will increase. But, in many cases, these conditions can be arranged in the form of trees, and the cutting off of many functions will occur in proportion to the logarithmic dependence.

When there is an initial creation and measurement of the function, instead of the result operator, there is an accumulation of the distribution of the actual results. After the accumulation of statistics, the distribution is replaced by the most likely result, and the function is preceded by a condition, as well as testing the condition for the maximum probability of the result.

This is a search for single correlation facts. Having accumulated a lot of such singles, we try to combine them into groups. We look at the general condition and the total distance from the initial value to the result. We also check that under such conditions and distances, in other cases where the initial value is repeated, there is no wide distribution of the result. That is, in known frequent uses, it is highly identical.

Identity coefficient. (Here is a bidirectional identity. But it's often unidirectional. I'll rethink the formula later.

The number of each pair XY in a square and add.

Divide by: the sum of the quantities squared of each value of X plus the sum of the quantities squared Y minus divisible.

That is, SUM(XY^2) / (SUM(X^2) + SUM(Y^2) - SUM(XY^2).

This ratio is 0 to 1.

And as a result, what happens. We have seen from high-frequency facts that under these conditions and distances, these facts are unambiguous. And the remaining rare — but in total there will be much more than frequent — have the same error as the frequent facts in these conditions. That is, we can accumulate a forecasting base on isolated facts in these conditions.

Let there be a knowledge base. The sky is often blue, and tropical-rare-shit somewhere saw that it is gray-borough-raspberry. And remember, because we checked the rule - it is reliable. And the principle does not depend on the language, be it Chinese or alien. And later, after understanding the rules of translation, you can understand that one function can be assembled from different languages.

Further, as a result of the enumeration of rules, we find that under other arrangements and conditions, the former identity arises. And now we do not need to gain a large base for confirming identity, it is enough to type a dozen individual facts, and see that within this ten, the mapping occurs in the same values as the previous function. That is, the same function is used in other conditions. This property forms that in the description we can describe the same property in different expressions. And sometimes they are simply listed in tables on Internet pages. Further, the collection of facts on this function can be carried out for several use cases.

There is an accumulation of possible different conditions and locations with respect to functions, and you can also try to find patterns on them. Not infrequently, sampling rules are similar for different functions, differing only by some feature (for example, a word identifying property or a title in a table).

In general, we found a bunch of one-parameter functions. And now, as in the formation of single facts into one-parametric ones, let us also here try to group the one-parametric conditions and part of the distance. The part that is common is a new condition, and the one that differs is the second parameter of the new function, the two-parameter, where the first parameter will be a one-parameter parameter.

It turns out that each new parameter in multiparametrics is with the same linearity as the formation of single facts into one-parametric (well, almost the same). That is, finding N-parametric proportional to N. That in the quest for a lot of parameters becomes almost a neural grid. (Whoever wants will understand.)

Conversion functions.

Of course, it is wonderful when we were provided with many corresponding examples, say, small texts of translation from Russian into English. And you can start trying to find patterns between them. But in reality, it's all mixed up in the input stream of information.

So we took one function, and we found a path between the data. Two and three. Now we see if we can find a common part among them. Try to find X-P1-(P2)-P3-Y structures. And then, find other similar structures, with the likes of X-P1 and P3-Y, but different P2. And then we can conclude that we're dealing with a complex structure that has dependencies. A set of found rules, minus the middle part, combine in groups and call the conversion function. Thus, translation, compilation, and other complex entities are formed.

Take a sheet with Russian text, and with its translation into an unfamiliar language. Without a tutorial, it is extremely difficult to find an understanding of the rules of translation from these sheets. But it's possible. And about the same as you would do, you need to make it into a search algorithm.

When I understand the simple functions, then I will continue to muzzle the conversion search, until the sketch comes along, and the understanding that this is also possible.

In addition to the statistical search for functions, it is possible to form them from descriptions, by converting functions into rules. Examples for the initial search for such a function can be found abundantly online in textbooks — correlations between the descriptions and rules applied to the examples in those descriptions. That is, it turns out that the search algorithm should equally see both the source data and the rules applied to them, i.e. everything should be located in a kind of homogeneous data graph. From the same principle, only the opposite can be found rules for the reverse conversion of internal rules into external descriptions or external programs. As well as understanding the system what it knows and what it does not know, you can ask before requesting an answer whether the system knows the answer - yes or no.

The functions I've been talking about aren't really just a single piece of algorithm that you find, but they can consist of a sequence of other functions. For example, I roughly described predicting words and phrases at once. But to get a prediction of only a symbol, you need to apply the function of taking this one character to this phrase.

Also, the probability assessment is influenced by the repeatability of one set in different functions - it forms types (it is still to think about how to use).

And it should also be mentioned that not a few real-world sets, not web pages, are ordered and possibly continuous, or with other characteristics of sets, which somehow improves probabilities calculations.

In addition to directly measuring the found rule on examples, I assume the existence of other methods of evaluation, such as a rule classifier. Or perhaps the classifier of these classifiers.

More nuance. Forecasting consists of two levels. The level of rules found and the level of search for new rules. But finding new rules is essentially the same program with its own criteria. I have no doubt that it may be easier. What is needed is a zero level that will look for possible search algorithms in all their diversity, which in turn will create final rules. Or maybe it is a multi-level recursion or fractal.

Back to the control markers. As a result of all these arguments about the algorithm, it turns out that through them we ask this black box to continue the sequence, and give a calculation on a function determined by similarity. Like, do it the way it was shown before.

There is another way to define a function in this mechanism, through definitions. For example:

Translate into Englishtable

Respond to the question color of the skyblue

Create a program for TKI want artificial intelligence. . .

And many other use cases. I don’t even want to think about them until the algorithm is built – we’ll just play with this toy.

In general, the final instructions for production are not yet available. There are a lot of questions, both about the algorithm itself and about the use (and about the multivariance of texts). Over time, I will further refine and detail the description.

Some will say that brute force to find any pattern will be too long. In contrast, a child learns to speak for several years. How many options can we calculate in a few years? Found and ready rules apply quickly, and for computers much faster than humans. But the search for new and there is a long time, but whether the computer will be longer than a person, we will not know until we make such an algorithm. Also, note that bruteforce is perfectly parallelized, and there are millions of enthusiasts who will turn on their home PCs for this purpose. And it turns out that these few years can still be divided into a million. And the rules found by other computers will be studied instantly, unlike a similar process in humans.

Others will argue that the brain has billions of parallelizing cells. Then the question is, how are these billions used when trying to learn a foreign language without a textbook? A person will sit over printouts for a long time and write out correlated words. At the same time, one computer will do it in packs in a split second.

And image analysis – move a dozen billiard balls and count how many collisions there will be. And two dozen or three... And what about billions of cells? In general, brain speed and multiparallelity is a very controversial issue.

An alternative direction of prediction implementation is the use of recurrent neural networks (say, Elman network). In this direction, you do not need to think about the nature of forecasting, but there are many difficulties and nuances. But if this direction is implemented, then the rest of the use remains the same.

When I’m going to try to program this again, I’ll go into more detail about the algorithm. If anyone is interested in discussing this direction, or there may be a joint implementation, you can write in person or by post.

And PS: Don't ask me what I smoked. You better offer your own alternative.

Source: geektimes.ru/post/247572/