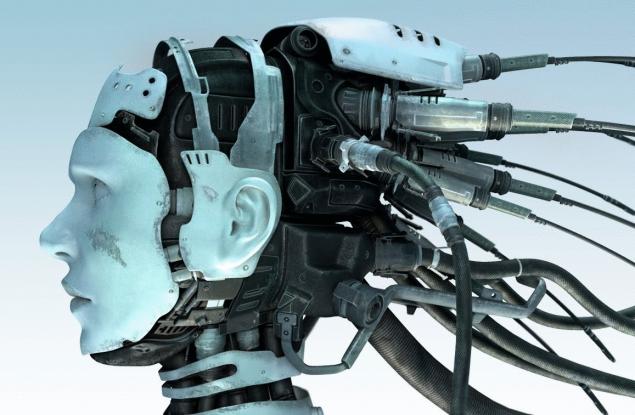

Stephen Hawking on artificial intelligence

Bashny.Net

Bashny.Net

Renowned British physicist Stephen Hawking, in an article inspired by the fantastic film "Superiority" (Transcendence) johnny Depp in the lead role, said that the underestimation of the threat posed by artificial intelligence may be the biggest mistake in the history of mankind.

The article, co-authored by computer science Professor Stuart Russell of the University of California at Berkeley and physics professors max Tegmark and Frank Wilchelm from the Massachusetts Institute of technology, Hawking points to some achievements in the field of artificial intelligence, noting that self-driving cars, voice assistant Siri and the supercomputer that defeated human in the TV game show-Jeopardy quiz.

All these achievements are overshadowed by what awaits us in the coming decades. The successful creation of artificial intelligence becomes the biggest event in the history of mankind. Unfortunately, it may be the last, unless we learn how to avoid risks, — quotes the British newspaper the Independent words of Hawking.

Professors write that in the future it may happen that nobody and nothing can stop machines with superhuman intelligence from self-improvement. And this will start the process of so-called technological singularity, which is extremely fast technological development. In the film with Depp in this sense, the word "transcendence".

Just imagine what this technology will surpass the person will manage financial markets, research, people, and weapons beyond our comprehension. If a short-term impact of artificial intelligence depends on who controls it, the long-term effects — whether it can be to manage at all.

It is difficult to say what effects it can have on people, artificial intelligence. Hawking believes that this is the subject of little serious research outside of non-profit organizations such as the Cambridge centre for the study of existential risk, future of humanity Institute, and research institutes machine intelligence and future life. According to him, each of us must ask ourselves what we can do now to avoid the worst-case scenario for the future.

Source: brainswork.ru

Tags

See also

Photos 4

Conquering the film industry

23 amusing facts about the movie

Laziness as a sign of genius. 4 kinds of laziness and whether it is necessary to struggle

Facts about the films of Tim Burton

Unfulfilled predictions of future technologies

Do not come true predictions

Amazing macro photography of insects Singapore

Stephen Hawking about robots in the labor market

Elon Musk is funding the research groups involved in the question - whether artificial intelligence is safe