How to behave as an unmanned vehicle in case to avoid casualties in the accident can not be?

Bashny.Net

Bashny.Net

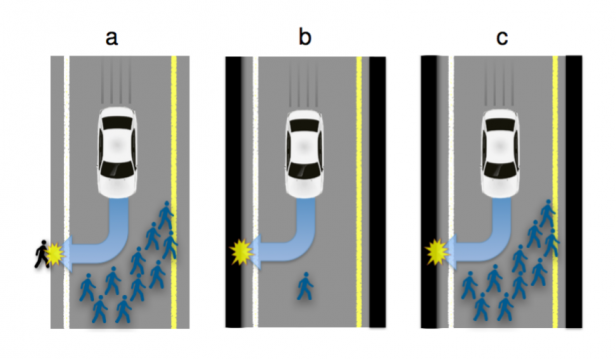

French scientists from the Toulouse School of Economics, headed by Jean-Francois Bonnfonom (Jean-Francois Bonnefon) published a scientific paper "Robomobilyam need experimental ethics: are we ready to produce cars on the road?» (Autonomous Vehicles Need Experimental Ethics: Are We Ready for Utilitarian Cars? ), which tried to answer the difficult ethical question: what should be the behavior of an unmanned vehicle in case it is impossible to avoid the accident and the victims are inevitable ? The authors surveyed several hundred participants crowdsourcing website Amazon Mechanical Turk , which brings together people who are ready to do any job a problem in which the computer It is not capable, for various reasons. In article обратил attention resource MIT Technology Review.

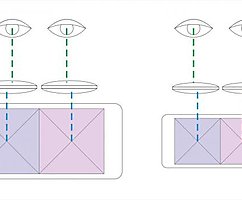

The authors of the study came to quite carefully, without requiring people clear answers: yes or no. Thus under robomobilem we mean really autonomous cars that are completely computer controlled, without the possibility of influence on the situation live person. It formulated a few questions, illustrating a number of situations in which the behavior of the computer algorithm controlling robomobilem becomes an ethical issue. For example, if traffic circumstances are such that there are only two options: to avoid a collision with a group of 10 walkers means to enter or bump stop in a crowd of people, the number of victims is truly unpredictable. How, then, should arrive a computer algorithm? Even more difficult situation: if the road is only one pedestrian and one robomobil? What is ethically more acceptable - to kill a pedestrian or kill the owner of the car?

The study revealed that people consider a "relatively acceptable" if robomobil be programmed to minimize human casualties in the event of a road accident. In other words, in case of possible accidents involving pedestrians 10, a computer algorithm to actually sacrifice his own. However, more or less definite answer investigators received exactly to the moment when study participants do not fall behind the wheel robomobilya - put yourself in the place of the driver they refused. This leads to another problem of contradictory. The fewer people will buy robomobili, which is really much smaller than living the drivers get into dangerous situations, the more people will die in accidents in conventional cars. In other words, it is unlikely the buyer future guglomobilya enjoy one of the points in the license agreement, which will state that in the event of an unavoidable accident victim as a result, the software is actually chooses to kill its owner.

Source: geektimes.ru/post/264794/

Tags

See also

How to behave with children in the Museum or why not to push

1938 Tips on how to behave on a first date a decent girl

How do air traffic controllers

Here's how to behave as a true knight under the guide 1483

How to keep a car in the night

How things work: an Air Traffic Controller

How things work: Air Traffic Controller (6 photos)

Hulk Hogan - 60 years!