Dedicated servers under water, literally !? Prospects for breeding fish in servers ?!

Bashny.Net

Bashny.Net

We all know that water and electronics - a dangerous combination, but is always? Can modern technology change that?

In this article, we will consider the advantages and disadvantages of placing servers in the liquid, and discuss possible problems of exploitation. We show how it may look like in practice and really work. And to discuss the question of why servers may or may not fish swim :)

For a long time, loss of energy and cooling costs in the operation of the servers did not give rest to many, including us, as the number of servers used by our subscribers constantly growing rapidly, we all think more about creating your own data processing center (DPC) in the foreseeable future. And when more than half of the energy consumed by all TsODom, the cost of cooling air, thanks to which you can not by more than a factor of efficiency of 1.7 divert the waste heat from the equipment, voluntarily involuntarily ask yourself, how you can improve the cooling efficiency and minimize energy loss?

Of course in physics is known that the air - not very efficient conductor of heat, since its thermal conductivity is 25 times lower than the thermal conductivity of water. It is rather more suitable for thermal insulation, rather than for the heat sink. He also has a very small heat capacity, which means that it is constantly necessary to intensively mix and deliver large volumes of cooling. Another thing - water and liquids. It is their use in the cooling system of data centers as a heat exchanger to increase the overall efficiency factor, but directly from the server of the liquid is not in contact only through an air gap and / or the heat sink (for cooling the chipset for example), which improves the efficiency ratio mehnichesky cooling system ( mPUE) up to 1.2 or even 1.15 when using the ambient for cooling purposes.

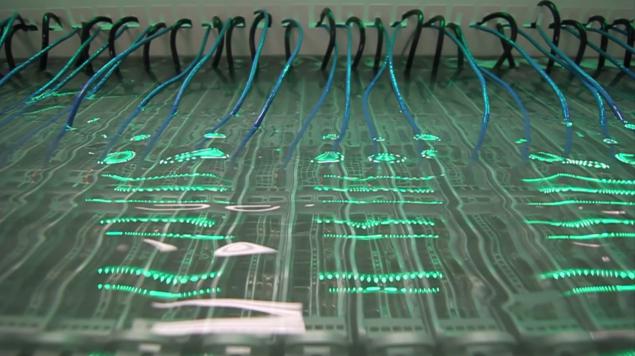

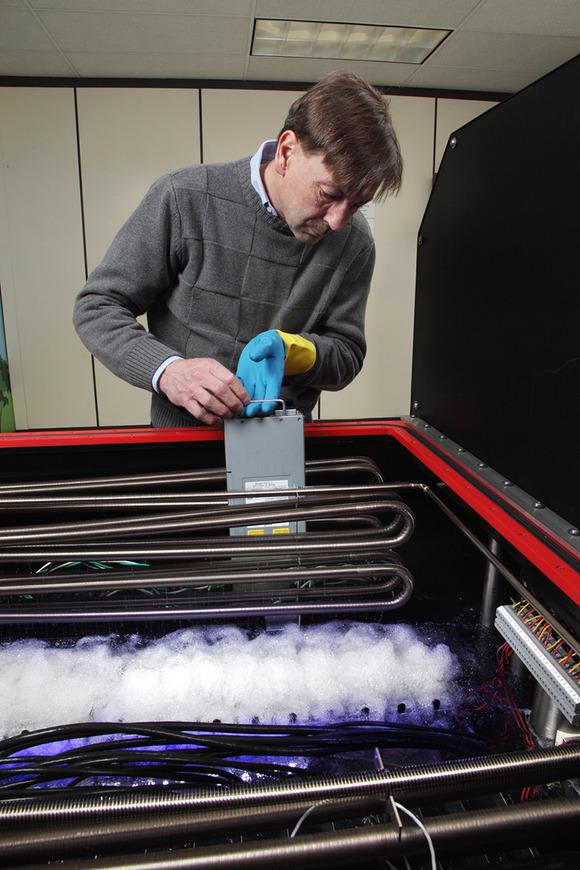

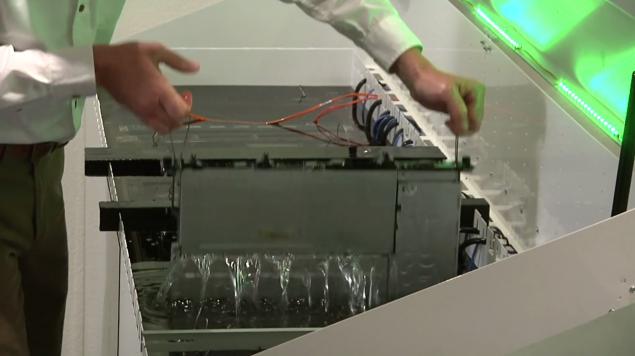

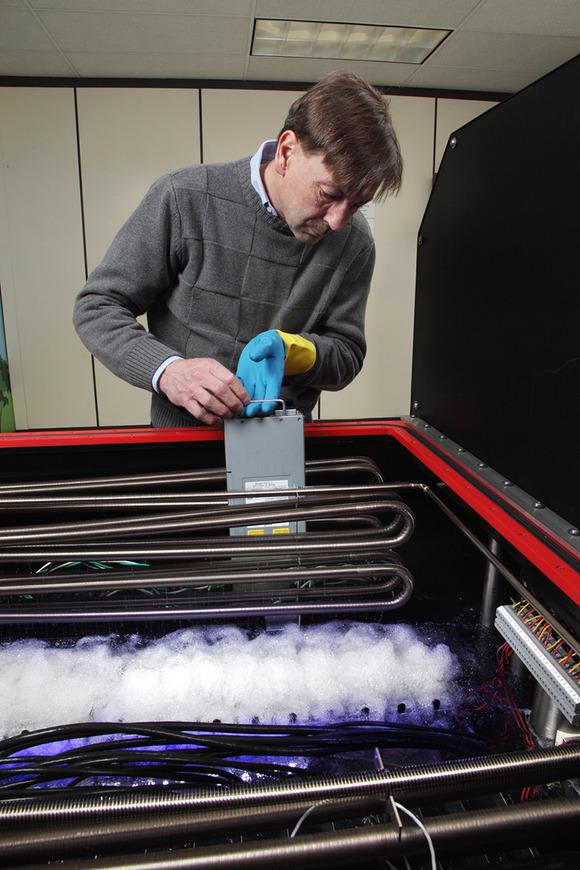

But how cool the server most effectively? Only one way out - to put it completely in the liquid (of course dielektirik), preferably with a greater thermal conductivity and heat capacity, which will not have a negative effect on the components of the server. And such a dielectric can be mineral oil. The idea, unfortunately and fortunately, was not new - it has a few years to develop and implement a number of companies in various forms and with varying efficiency. Modern technology allows to build a "submarine" Data Center! But what are the advantages and disadvantages of this solution?

Advantages and disadvantages of placing servers in the liquid h4> Cooling fluid now saves up to 95 percent of electricity, which is typically used for cooling in the data center and, as a consequence, 50% of all energy consumed by Data Center. < br />

The success of various companies in the field of cooling servers in liquid h4> Mineral oil can effectively protect against corrosion and dust, thanks to the fact that unlike the air contains no water and oxygen, extend the life of the equipment. It is non-toxic and odorless, and thus does not evaporate. But it happens with different efficacy and selection of proper mineral oil - a real art.

Outlook h4> In today's motherboards circuit laid out on "huge" distance from each other to maximize heat dissipation for use as an air cooler, which is not terribly efficient intercooler. The cooling fluid can be started in the production server with more densely packed circuits that allow operation in the liquid and heat dissipation property of the liquid, after the liquid has not only higher thermal conductivity than air, and a much higher heat capacity. The most effective to date mineral oils have heat capacity that exceeds the specific heat of air more than 1,200 times!

Save more than 60% of the funds in the construction of: h5> - there is no need to purchase expensive chillers, HVAC (heating ventilation air cooling) systems;

savings of over 50% of the operation: h5> - equipment being in fluid consume 10-20% less energy depending on the type of the cooler due to the absence of loss currents and chips due to their location in the dielektirke and ensure their constant temperature;

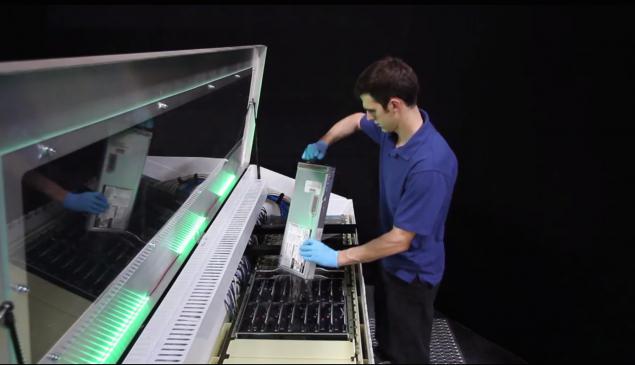

From the "underwater" servers to PCs, coolant, or how to create a workstation in a fluid at home h4> Of course, this idea has not received and will not receive such a wide application in the PC market, simply because most already long passed for laptops and other gadgets, home workstations in the body «tower» often used only by professionals, because they need more performance and discharge of large amounts of heat. That's for them to dive their invaluable iron in the liquid can be very useful!

I want in servers swimming fish! Throw the fish there! H4> I dare to admit that seeing different RYBOV under water - an unforgettable experience. I am a diver and would not refuse a workstation at home in the form of an aquarium with tropical or tropical fish is not very.

In this article, we will consider the advantages and disadvantages of placing servers in the liquid, and discuss possible problems of exploitation. We show how it may look like in practice and really work. And to discuss the question of why servers may or may not fish swim :)

For a long time, loss of energy and cooling costs in the operation of the servers did not give rest to many, including us, as the number of servers used by our subscribers constantly growing rapidly, we all think more about creating your own data processing center (DPC) in the foreseeable future. And when more than half of the energy consumed by all TsODom, the cost of cooling air, thanks to which you can not by more than a factor of efficiency of 1.7 divert the waste heat from the equipment, voluntarily involuntarily ask yourself, how you can improve the cooling efficiency and minimize energy loss?

Of course in physics is known that the air - not very efficient conductor of heat, since its thermal conductivity is 25 times lower than the thermal conductivity of water. It is rather more suitable for thermal insulation, rather than for the heat sink. He also has a very small heat capacity, which means that it is constantly necessary to intensively mix and deliver large volumes of cooling. Another thing - water and liquids. It is their use in the cooling system of data centers as a heat exchanger to increase the overall efficiency factor, but directly from the server of the liquid is not in contact only through an air gap and / or the heat sink (for cooling the chipset for example), which improves the efficiency ratio mehnichesky cooling system ( mPUE) up to 1.2 or even 1.15 when using the ambient for cooling purposes.

But how cool the server most effectively? Only one way out - to put it completely in the liquid (of course dielektirik), preferably with a greater thermal conductivity and heat capacity, which will not have a negative effect on the components of the server. And such a dielectric can be mineral oil. The idea, unfortunately and fortunately, was not new - it has a few years to develop and implement a number of companies in various forms and with varying efficiency. Modern technology allows to build a "submarine" Data Center! But what are the advantages and disadvantages of this solution?

Advantages and disadvantages of placing servers in the liquid h4> Cooling fluid now saves up to 95 percent of electricity, which is typically used for cooling in the data center and, as a consequence, 50% of all energy consumed by Data Center. < br />

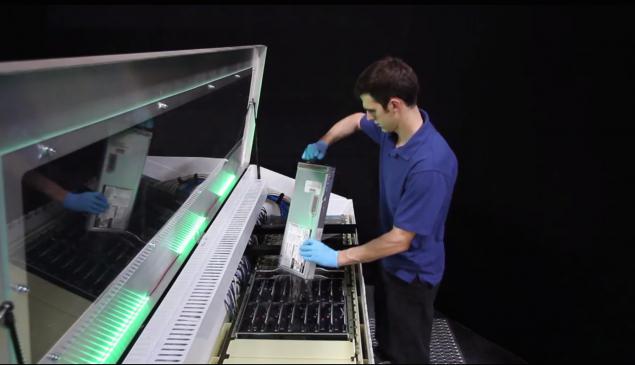

The cooling system in the liquid allows sekonomit to 60% of the funds in the construction of the Data Center, as there is no need to purchase expensive chillers, HVAC (heating ventilation air cooling) systems, construction of cold / hot aisle, the application of the raised floor, etc.

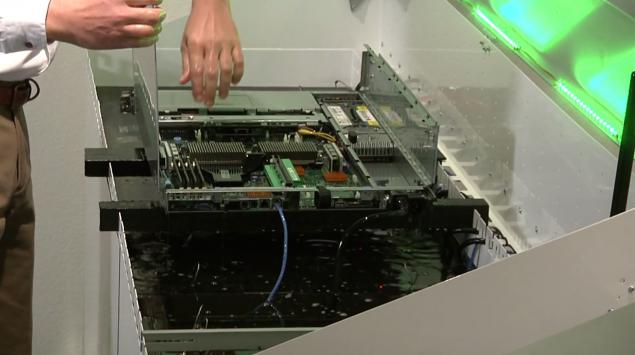

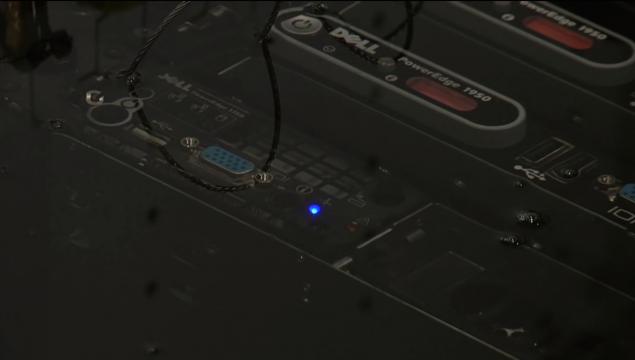

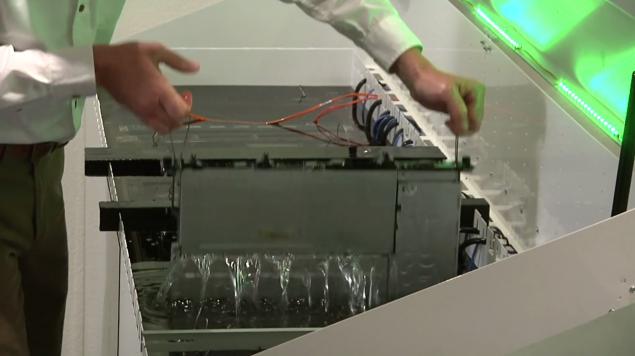

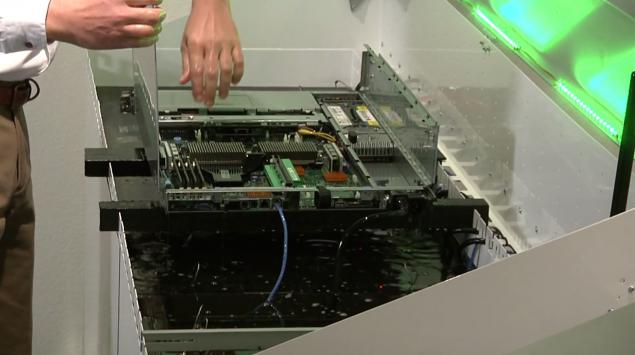

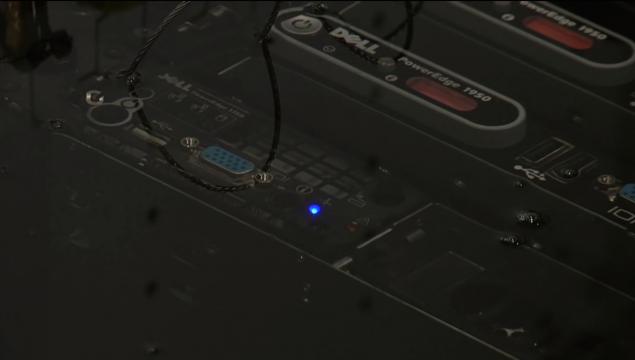

SSD-drives can be immersed in the coolant, of course while maintaining the operability :), without any modification, as in other and the rest of the standard assembles servers except drives. For hard drives require the use of additional devices, because they will not be able to effectively rotate in the liquid.

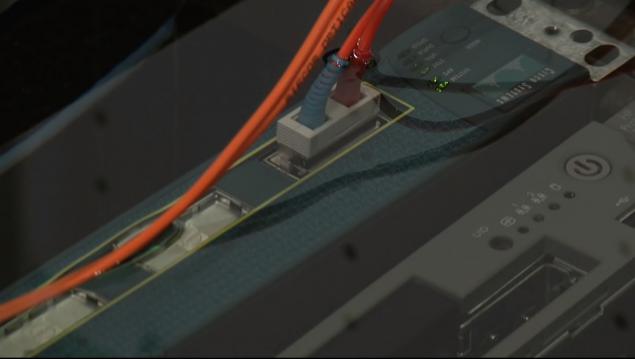

Since the coolant is a dielectric (non-conductive) - no drying servers and drain the entire system for carrying out works in the enclosure, or to a particular server. Nevertheless, this liquid must be non-toxic, odorless (minimum evaporation) and not be aggressive to the components of the server, for example does not dissolve the rubber insulation of the wires, etc. Selection of the proper and effective mineral oil - not an easy task. For the problem of the cooling fluid is suitable not for each mineral oil. And depending on the oil we get different allowable power equipment in a 42-yunitovom cabinet heat with which the system is capable of removing.

If we talk about the effectiveness of the cooling fluid in general, the system achieves PUE 1.03. But how is that possible, you ask, if the use of mineral oil for cooling saves only 95% eneregii? Due to what we can get more efficiency in the 2%?

The answer is simple cooling fluid saves energy consumed by the servers due to the fact that they no longer needed to set coolers for cooling, and also due to the fact that reduced leakage currents and chips as they work reliably and izollirovany at a constant temperature (change of temperature promotes leakage currents). As a consequence, we save on the cooling system, since it can now occupy a smaller volume, since it is already necessary to remove less heat. This gives a gain of those treasured 2 percent on cooling, but we get not only that. The servers themselves are beginning to expend energy by 10-20 percent less than other servers with cooling. Data Center PUE only grows.

The success of various companies in the field of cooling servers in liquid h4> Mineral oil can effectively protect against corrosion and dust, thanks to the fact that unlike the air contains no water and oxygen, extend the life of the equipment. It is non-toxic and odorless, and thus does not evaporate. But it happens with different efficacy and selection of proper mineral oil - a real art.

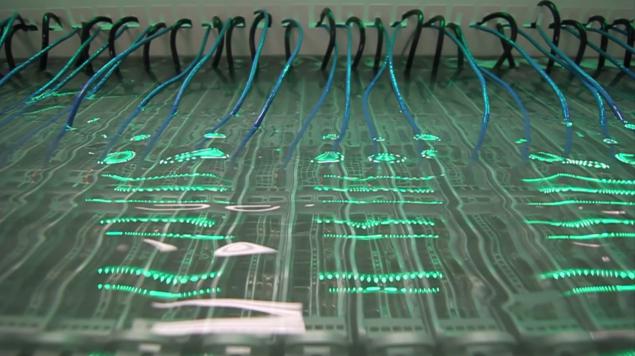

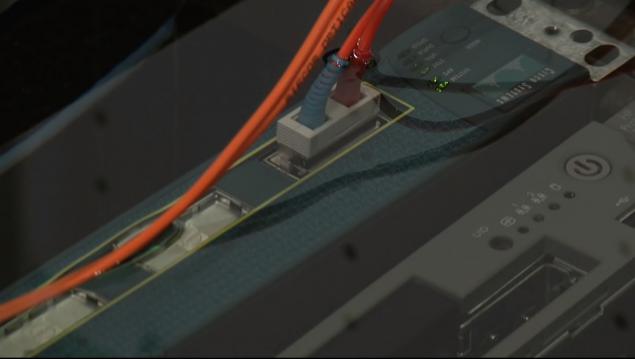

Different companies have long been involved in these issues closely and have different success through the use of various mineral oils, creating their own "know-how". About a month ago, Intel and SGI announced the "news" that they can through the use of mineral oil and its own cooling system developed on its basis, which includes operation of servers in the liquid to provide heat removal from the cabinet in a few tens of kilowatts or more. But they still have a problem, especially in their report mentioned that the conventional optical cables in their mineral oil work probably will not be able, for whatever reason, alas, is not specified, probably oil aggro for them. The solution is far from commercial exploitation.

The other company, GRC, has long been a much more efficient mineral oil, offers a ready commercial decision and does not have such problems, have not published it as "news", while they say they can take the heat from the cabinet up to 100 kW and more, and thus significantly surpassed the success Intel! So you need to be more critical to all the information from the news. If one company declares "know-how", it does not mean that others will not think of a better, some just may be the beginning of his way in a new direction for them :) As mentioned above, Intel is still very far from commercial exploitation this decision, but without it ultimately the decision of any company will not do.

Outlook h4> In today's motherboards circuit laid out on "huge" distance from each other to maximize heat dissipation for use as an air cooler, which is not terribly efficient intercooler. The cooling fluid can be started in the production server with more densely packed circuits that allow operation in the liquid and heat dissipation property of the liquid, after the liquid has not only higher thermal conductivity than air, and a much higher heat capacity. The most effective to date mineral oils have heat capacity that exceeds the specific heat of air more than 1,200 times!

This is all not only allows more efficient heat dissipation, but also in the case of stopping the cooling system to get a lot more time for its repair work before moving into a critical condition due to the temperature rise, as the properties of the fluid (large heat capacity and density) allow to absorb much more heat with the liquid itself does not become overheated, thereby pulls threshold "critical overheating" in time.

Very large and open perspectives for supercomputers working in liquid, energy saving and space in the operation of high-performance equipment - kolosalnye.

Most likely in the future there will be a computing and server equipment that could operate without dipping into the liquid. The advantages are huge, almost no flaws, except that now we need to have the cabinets are not vertically, but horizontally, which is somewhat unusual. This makes it possible to increase the "density" of the equipment in the data center, and provide an additional level of security. If the Data Center is suddenly flooded with water in the disaster - the water will have no impact on the servers, since they are already immersed in the liquid, albeit with a different density, but securely sealed cabinets.

The cooling liquid in the Numbers h4>

Save more than 60% of the funds in the construction of: h5> - there is no need to purchase expensive chillers, HVAC (heating ventilation air cooling) systems;

- No need for the construction of the cold / hot aisle, the application of the raised floor;

- Reduces the number of generators, batteries, uninterruptible power systems (UPS) at the N units of equipment, by reducing consumption - - eneregii this equipment when operating in the liquid;

- Infrastructure cost per watt by 73% lower than in the construction of the data center, air-cooled, and 55% if the data center uses the environment for cooling;

- Infrastructure cost per server gives a gain of 86 and 70 percent, respectively.

savings of over 50% of the operation: h5> - equipment being in fluid consume 10-20% less energy depending on the type of the cooler due to the absence of loss currents and chips due to their location in the dielektirke and ensure their constant temperature;

- 90-95% energii is maintained by servers in the cooling liquid and the absence of large cooling systems in the data center, because now the heat from the enclosure to the servers in mineral oil can effectively divert applying the evaporative cooling tower (no mechanics only water evaporation) or by means of loop with cold water;

- No costs associated with amortization of conventional cooling systems, the costs of the energy supply system are significantly reduced, based on the N units, because the need to contain fewer batteries including UPS;

Mineral oil is practically forever, it is not necessary to change and almost no need to add (except in cases of leakage), unlike other cooling in data centers;

- If the average server consumes about 230-270 watts and 50-170 watt cooling, depending on the cooling method used, the use of cooling fluid reduces the average power consumption to the server 210 Watts, while the energy required for cooling is about 10 Watts!

You can take over 100kVatt heat of immersion in mineral oil at 42 cabinet unit! As well as significantly reduce spending on server hardware, up to 50% on various components, and all because now the constant operating temperature is about 20 degrees lower than in air, it is possible to apply without fear even dekstopnye components, since they work with much lower temperatures.

From the "underwater" servers to PCs, coolant, or how to create a workstation in a fluid at home h4> Of course, this idea has not received and will not receive such a wide application in the PC market, simply because most already long passed for laptops and other gadgets, home workstations in the body «tower» often used only by professionals, because they need more performance and discharge of large amounts of heat. That's for them to dive their invaluable iron in the liquid can be very useful!

It turns out to implement it at home is not difficult, and perhaps already being done by many amateurs and modding for quite some time. Some companies even offer buy ready-made solution , mineral oil-based «Crystal Plus 70T», which is available in stores and in the words experimenters ideally suited for this task is the heat capacity of 750 times higher than that of air and a lower density than water.

Stirring may be carried out through the fluid passage of air through the mineral oil or conventional computer the cooler, which in mineral oil rotates itself much more slowly than the air, however, retains its functionality. On the question of what to do with the water vapor which will fall into the mineral oil by passing air to the mixing developers respond that due to different density (greater in water), water will accumulate in the bottom of the "tank" where it is not to be any electrical components, but they have not seen in a long process of exploitation appear even meager amounts of water, otherwise the tank began to resemble a "lava lamp».

I want in servers swimming fish! Throw the fish there! H4> I dare to admit that seeing different RYBOV under water - an unforgettable experience. I am a diver and would not refuse a workstation at home in the form of an aquarium with tropical or tropical fish is not very.

But alas, genetic engineering is still lagging behind the fashion in IT, the fish will die if placed in a mineral oil, it has the properties so very far from the desired water fish. Hopefully, this idea, either through the use of new technologies, either through genetic engineering, will be able to realize someone in the future, but for now you can use the robotic fish!

I'll be the first to order a robotic boxfish!

Well, of course do not forget to support the construction of our future Underwater Data Center (in the ideas which I have no doubt) - make dedicated servers at We , while that in the Netherlands and the United States and $ 49, and in the future and on Barrier Reef!

Source: habrahabr.ru/company/ua-hosting/blog/222669/

Tags

See also

Panoramic HD-camera Giroptic: shoot underwater, is screwed into the socket of the bulb, Stream via Ethernet and WiFi

Russian cities, found themselves under water

Cylinders under the water as energy storage

Cities that may disappear underwater

Liver: signs of possible problems — don't miss it!

Fujitsu F706i can operate underwater,

Flooded Argentine village

What can be done under water